Introduction

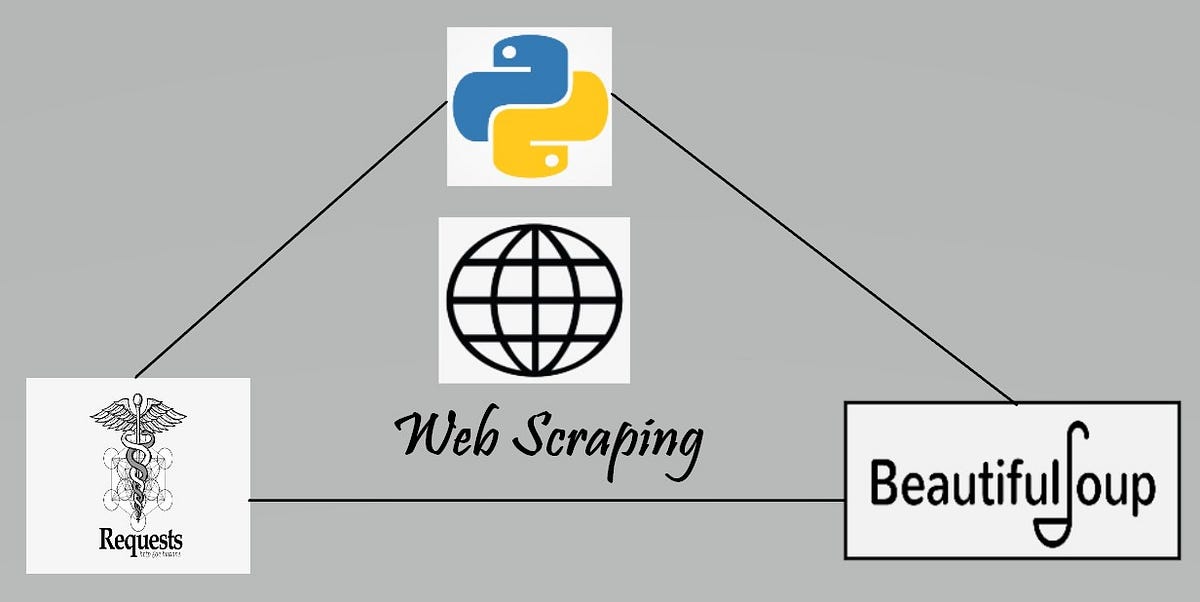

Do you know what is the greatest advantage of the World Wide Web? Many would say being able to communicate, but I would rather say the massive amount of data available for everyone. So what if someone like you and I need to collect this data for studies or analysis, isn’t it possible? Well, surely it is with the modern and not so new technology of “Web Scraping”. Web Scraping is used to extract the data from any websites on the internet that allows their users to scrape information and data within certain boundaries. We can think of this technology as a grey area which means the extraction of data is legal until that extraction follows all that rules stated by the website in their **“/robots.txt” **file. Also, it is of great importance that the extracted data must not be used for any illegal activities. Web Scraping can be done with several available APIs, open-source tools, and languages such as python and r along with selenium. In this article, we make use of python libraries such as Requests, BeautifulSoup, and Pandas to extract the data and build data frames for analysis.

Talking about the “/robots.txt” file it is always advisable to look into a website’s robots file and identify the scope of scraping. This means we need to distinguish the paths that are not prohibited by the website and the ones that are prohibited. We can access the file by simply appending “/robots.txt” at the end of the website URL.

Example:

http://www.flipkart.com/robots.txt

As you may have guessed in this article we will be scraping data from the famous Indian E-commerce website “Flipkart”. We will mainly focus on a single product which is “Mobile Phone” the following attributes of the product is our key interest for extraction.

⦁ Mobile Name

⦁ RAM and ROM Specification

⦁ Ratings and Reviews

⦁ Stars

⦁ List and Sales Price

If you wish to directly dive into the code visit my GitHub, which contains the summarized process of web scraping.

_Hop, Skip and _GitHub

So let’s get coding!

Step 1: Imports.

Import the basic libraries that are used for web scrapping.

- Requests — A Python library used to send an HTTP request to a website and store the response object within a variable.

- BeautifulSoup — A Python library used to extract the data from an HTML or XML document.

- Pandas — A Python library used for Data Analysis. Which will be used in this article to create a data frame.

from bs4 import BeautifulSoup

import requests

import pandas as pd

Step 2.1: Request code.

This step is the main responsibility of the requests library. The “requests.get()” method sends an HTTP request to the specified URL and stores its response into a variable.

url1 = 'https://www.flipkart.com/mobiles/pr?sid=tyy,4io&otracker=categorytree'

r = requests.get(url1)

Step 2.2: Check Status.

Is it possible to check whether we have received a successful response? Well, surely it is. The **“requests.status_code” **provides us with a response that indicates whether or not the HTTP communication between our code and the website was a success or a failure. The status we may receive are as follows :

- 200 — “OK”

- 404 — “NOT FOUND”

- 403 — “FORBIDDEN”

- 500 — “INTERNAL SERVER ERROR”

r.status_code

Step 3: Use BeautifulSoup to store the HTML code.

Another feature of the requests library is the **“requests.content” **which returns all the data of an HTTP response. The BeautifulSoup stores this content (data) in an HTML format in the variable s.

s = BeautifulSoup(r.content, ‘html.parser’)

#web-scraping #beautifulsoup #requests-library #python