Today we are going to learn how to work with images to detect faces and to extract facial features such as the eyes, nose, mouth, etc. There’s a number of incredible things we can do this information as a pre-processing step like capture faces for tagging people in photos (manually or through machine learning), create effects to “enhance” our images (similar to those in apps like Snapchat), do sentiment analysis on faces and much more.

In the past, we have covered before how to work with OpenCV to detect shapes in images, but today we will take it to a new level by introducing DLib, and abstracting face features from an image.

Dlib is an advanced machine learning library that was created to solve complex real-world problems. This library has been created using the C++ programming language and it works with C/C++, Python, and Java.It worth noting that this tutorial might require some previous understanding of the OpenCV library such as how to deal with images, open the camera, image processing, and some little techniques.

How does it work?

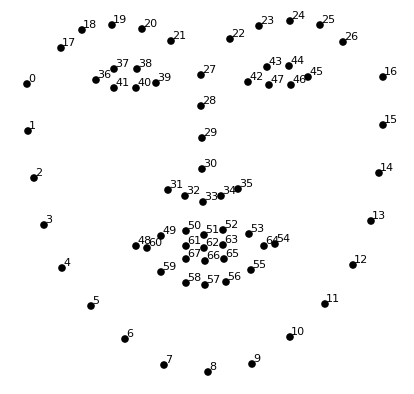

Our face has several features that can be identified, like our eyes, mouth, nose, etc. When we use DLib algorithms to detect these features we actually get a map of points that surround each feature.This map composed of 67 points (called landmark points) can identify the following features:

Point Map

- Jaw Points = 0–16Right Brow Points = 17–21Left Brow Points = 22–26Nose Points = 27–35Right Eye Points = 36–41Left Eye Points = 42–47Mouth Points = 48–60Lips Points = 61–67

Now that we know a bit about how we plan to extract the features, let’s start coding.

#python #data-science #machine-learning #opencv