Models these days are very big, and most of us don’t have the resources to train them from scratch. Luckily, HuggingFace has generously provided pretrained models in PyTorch, and Google Colab allows usage of their GPU (for a fixed time). Otherwise, even fine-tuning a dataset on my local machine without a NVIDIA GPU would take a significant amount of time. While the tutorial here is for GPT2, this can be done for any of the pretrained models given by HuggingFace, and for any size too.

Setting Up Colab to use GPU… for free

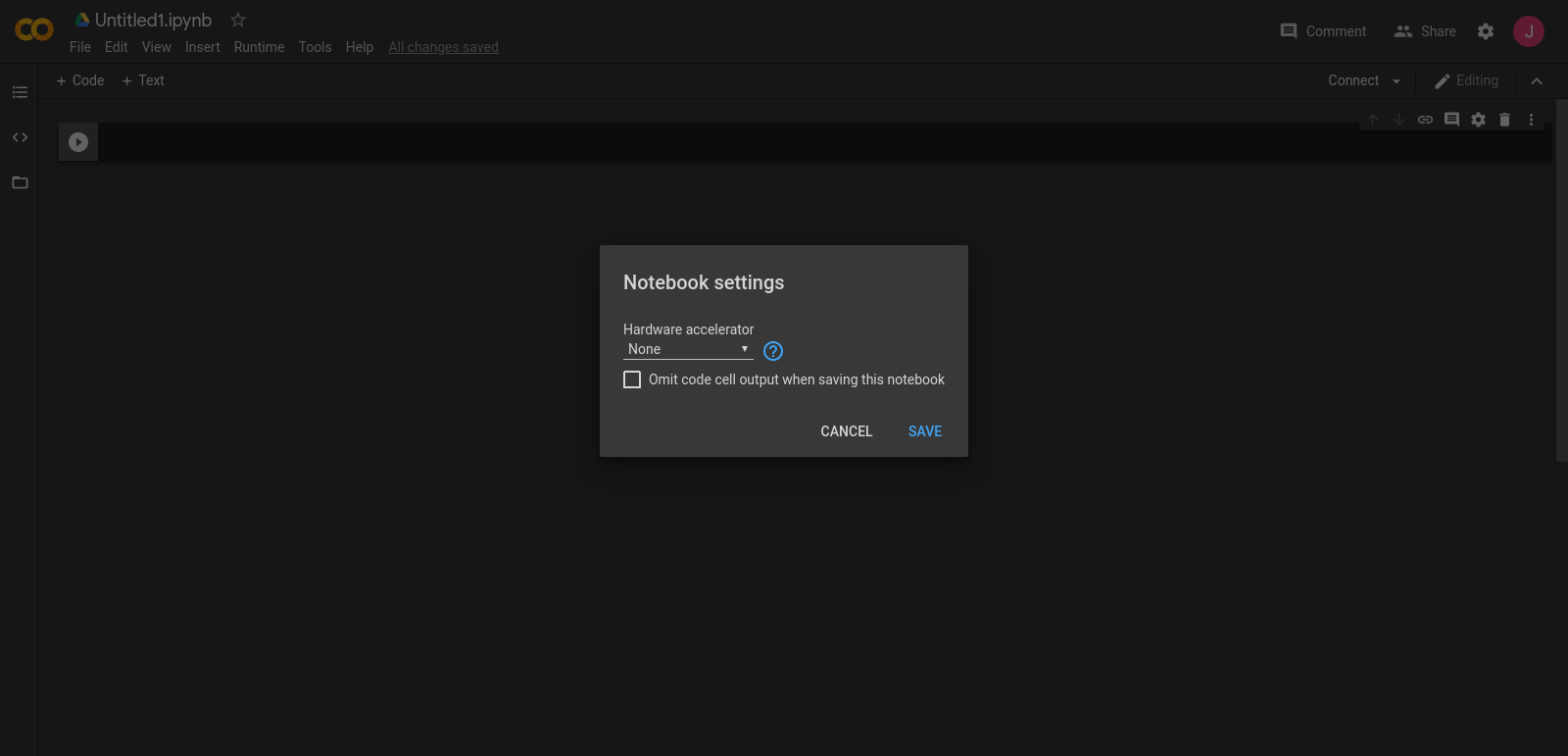

Go to Google Colab and create a new notebook. It should look something like this.

Set to use GPU by clicking Runtime > Change runtime type

Then click Save.

Installing Dependencies

We would run pip3 install transformers normally in Bash, but because this is in Colab, we have to run it with !

!pip3 install transformers

Getting WikiText Data

You can read more about WikiText data here. Overall, there’s WikiText-2 and WikiText-103. We’re going to use WikiText-2 because it’s smaller, and we have limits in terms of how long we can run on GPU, and how much data we can load into memory in Colab. To download and run, in a cell, run

%%bash

wget https://s3.amazonaws.com/research.metamind.io/wikitext/wikitext-2-raw-v1.zip

unzip wikitext-2-raw-v1.zip

#gpu #google-colab #gpt-2 #fine-tuning #machine-learning #deep learning