What if we designed machines to be more considerate social actors?

Our devices are socially awkward by design. Still, little has had a bigger effect on society over the last decade than smartphones and the second-order effects they caused. Over the next decade, as technology will become even more intimate, and at the same time more situationally aware through advances in sensors and artificial intelligence, we as designers and technologists have a unique opportunity to shape technology’s role as a social actor with intent.

Dieter Rams is often quoted as saying that he strives to design products to behave like ‘a good english butler’. This is a good metaphor for Rams’ fifth principle of ‘good design being unobtrusive’. While in the world of physical products this was clearly distinguishable from his second principle of ‘good design making a product useful’ — as products become more connected and at the same time more ingrained into our lives they could easily be rendered unusable if they are not fundamentally designed for unobtrusiveness.

One can already feel that in today’s smartphone usage and how overwhelmed people are with the amount of notifications they receive. Now just imagine a set of augmented reality goggles that do not know when it is appropriate to surface information and when they have to clear the field of view.

In a bigger sense, the idea of a product being an ‘english butler’ and the requirement of products being ‘there for you when you need them, but in the background at all times’ in the physical world has typically been translated mostly into aesthetic qualities. Digital products though, have not been able to deliver on this expectation for a long time. Limited sensorial capabilities, but even more importantly shortcomings in battery life and computational capacity, led to a ‘pull’-based model in interaction design that mostly required the user to manually inquire for an action to be taken. With the inception of the smartphone, and the emergence of ubiquitous connectivity, the system of ‘push-notifications’ became an ingrained part of our daily lives and with them the constant struggle to keep up with this new ‘push’-based approach to interaction. While the personified digital butler (software at large) for a long time could never anticipate our needs and always had to be asked to perform an action for us, it has recently pivoted to constantly demanding our attention and imposing any trivial distraction upon us, while not only serving their employer (‘us’ — the user) but also working in the interest of any company whose app found its way on our home-screens somehow.

In the paper ‘Magic Ink’ from 2006, the year before the smartphone revolution was kicked off by the launch of the iPhone, Bret Victor already concluded that when it comes to human interface design for information software, ‘interaction’ is to be ‘considered harmful’ — or in a more ‘Ramsian’ way of phrasing it — software designers should strive for ‘as little interaction as possible’.

Victor proposes a strategy to reduce the need for interaction with a system by suggesting ‘that the design of information software should be approached initially and primarily as a graphic design project’ treating any interface-design project as an exercise in graphic design first, only using manipulation as a last resort. While this is an effective approach for ‘pull’-based information software, it is not sufficient for communication software (which Victor describes as ‘manipulation software and information software glued together’) which today forces information upon us— mostly without much of a graphic interface at all¹.

Another strategy is to infer context through environment, history and user interaction. Victor writes, that software can

1. infer the context in which its data is needed,> 2. winnow the data to exclude the irrelevant and> 3. generate a graphic which directly addresses the present needs

12 years later, as technology and especially the integration of hardware and software significantly progressed — its ability to infer context and winnow data became much greater and can therefore be used to generate ‘graphics’ (or any other output) to address the present user needs even better, but even more importantly at a time, space and manner that is appropriate for the user and their environment.

But what could appropriateness mean for (inter-)action? Merriam-Webster defines appropriateness as ‘the quality or state of being especially suitable or fitting’. Here, I am going to deem any action or interaction to be appropriate, if it cannot be avoided through automation or anticipation and fits a given society’s model of ethics². This changes vastly between different societies, but is also dependent on one’s very personal relationship with a given group of people — be it a whole culture or a group of friends.

With the widespread adoption of mobile phones and the advent of ringtones, movie theatres decided to come up with a contract of what is appropriate usage of this technology in the environment they provide. To this day every movie starts with an announcement asking people to put their phones into ‘silent mode’. Similar measures have been taken on public transportation. The emergence of the smartphone even led to a cottage industry of devices trying to make it harder for people to use their smartphones and be distracted during shows.

Modelling appropriateness computationally, though, is no easy task. Attempts at this problem in our current devices are all very tactical. The whole area of human-computer-etiquette is still being neglected in most software today — sometimes it is only considered because of legal requirements.

But even more general approaches to modelling appropriateness, like the ‘Do not Disturb’ feature in Apple iOS, require the user to be very deliberate in their setup, and lack smartness and fluidity, since they are all modelled after the design-blueprint of their ancestor, ‘airplane mode’.

The exploration of machines as social actors in interaction design or human-computer interaction research is not that new. Advancements in sensors and computation, combined with more capable machine learning naturally lead towards a movement to make computers more situationally or socially aware.

This piece is based on a lot of the great work being done in the fields of affective computing at large, and human-computer etiquette as well as CASA(computers as a social actor) more specifically.

I started my design process by exploring the future of work. The office has always been fertile ground in interaction design. In the ‘mother of all demos’Douglas Engelbart starts by explaining what the audience is about to see by talking about a scenario that takes place in the office of a knowledge- (or back then, “intellectual”) worker:

“… if in your office, you, as an intellectual worker, were supplied with a computer display backed up by a computer that was alive for you all day and was instantly (…) responsive to every action you have, how much value could you derive from that?”

The office has changed a lot since then, to a great extent thanks to Engelbart’s and Licklider’s contributions through SRI. A lot of people do not work in an office at all anymore — and all the technology that eventually turned into tools for thought and bicycles for the mind and truly helped augment our mind’s capability, productivity and creativity ended up at the same time minimising our space for thought — the capacity of our mind.

In a human-centred design process I started off by interviewing people around what today’s knowledge work looks like to then ideate concepts that go beyond the knowledge worker’s office, be it a cubicle, open or everywhere.

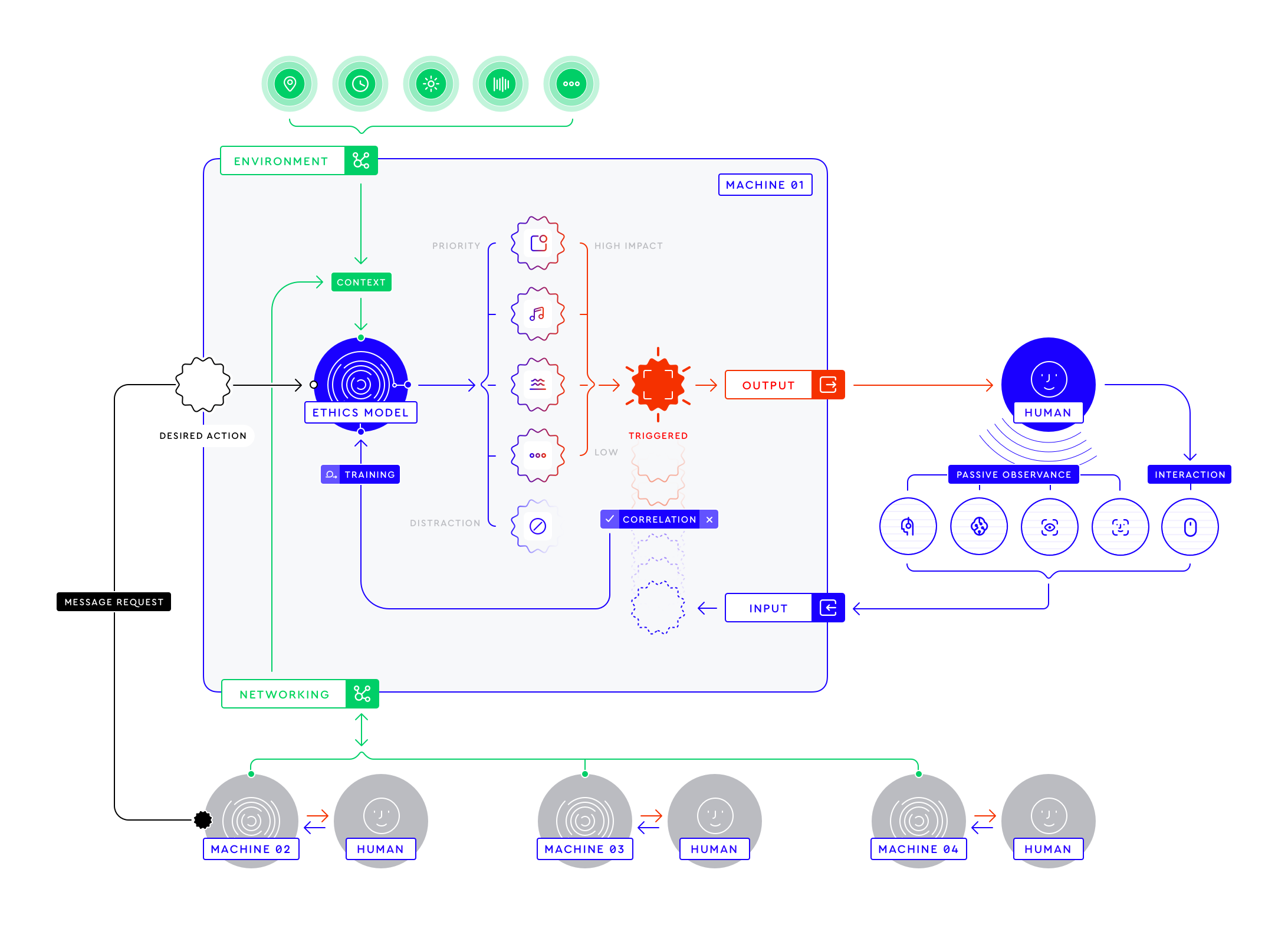

The framework that eventually emerged, which built the foundation — the “API”³ — for its individual applications builds on a system of sensors and intelligence with a capability to infer context and the winnowing of data to build a model of minimal interaction that occurs only when appropriate — based on the user’s environment, state of mind and a model of ethics, which is trained over time based on feedback on previous interactions between the system with the user in a given context.

Conceptual ‘API’ graph for appropriate machine behaviour

Now we will apply this system to scenarios that emerged from research that currently encompass ‘inappropriate’ behaviour and try to envision the machines involved as more considerate social actors.

Every prototype is accompanied by a broad ‘how might we?’ question that is intended to act as a loose principle, so that other designers and technologists can ask themselves the same questions when they design their product or service — looking at the prototype as one of many possible implementation strategies.

1 → Human Machine Etiquette

How might we design machines that can act appropriately towards their user?

What if a navigation system could be respectful of a conversation?

Imagine, driving your car. Having an in-depth conversation with your co-driver. Your co-driver listens to you when you’re talking and vice versa. It is an engaging discussion and everyone who understands natural language can tell.

Prototype → a simple iOS app that doesn’t interrupt the user when it hears them speak, but uses short audio signals to make itself heard.

So does the navigation system, that uses speech recognition and natural language processing to inform its ‘ethics model’. Therefore it only speaks out the next direction when there is a natural break in the conversation. When the information gets more urgent, it will make use of more intrusive signals. Only when the urgency has reached a maximum, the system will resort to such an intrusive signal as speaking out loud. With the help of eye-tracking and other sensing devices, it could even tell if you’ve already recognised the instruction.

The same system, using the ‘API’, could even be integrated with apps like Apple Music or Spotify perfectly timing interruptions for when there’s a natural break in the conversation in your favourite podcast or just after your favourite part in the current song you’re listening to.

2 → Negotiable Environment

How might we design machines that are aware and attentive of their environment?

In general, ‘negotiable environments’ are those in which interactions between a machine and its owner that have an impact on the people sorrounding them. Often this is relevant in public spaces, for example on the train or in a park, but even more importantly in a more intimate space, that is shared by the same people, like in an open office, a cinema or even an airplane.

What if machines could behave politely towards everyone they affect?

Prototype → A new take on the brightness slider that contains a subtle gradient to visualize how others set brightness around you to nudge you to set it similarly.

There are only a few people left using their devices. As the first ones turn down the brightness of their laptops to get into sleep mode the average brightness of devices that are in the vicinity starts to drop and the other devices, which haven’t had their brightness adjusted, automatically adapt accordingly. If their users don’t reset it (and therefore apply negative reinforcement) their respective ‘ethics models’ get trained on this behaviour as being appropriate and accepted by their user.

3 → Conformity

How might we design machines that adopt the appropriate behaviour in new and unknown environments and situations?

The last story brings up an interesting question: Should the settings automatically adapt to the environment or not? When people come to an unfamiliar place they look at how others behave, to figure out ‘how this place works’. Only when they are confident in the environment, they start to make adjustments or feel confident to behave outside the rules.

What if machines could understand and adapt dynamically to different customs and new environments?

Prototype → A settings app in which the settings change based on the user’s location or context (represented through proximity to different iBeacons).

Imagine a family in Japan enjoying a meal at a local restaurant. A business man (it seems to be his first time in Japan) is loudly recording voice messages to send to a colleague as he enters the restaurant. His smartphone recognises the new environment, looks at the other phones (which are all switched to ‘Do not Disturb’) and copies their setting since he has set his level of ‘conformity’ to ‘high’ for environments he is unfamiliar with. He will receive his colleagues reply once he leaves the restaurant and can therefore fully enjoy his meal.

4 → Augmentation

How might we leverage machines to become better social actors when we communicate through digital means?

Now that we’ve looked at multiple examples about how the system could make machines better social actors we might ask ourselves how this system could help us become even better social actors — especially in digital communication, where even humans often lack the usual social cues we use to figure out if an interruption will be welcome or disruptive.

What if we always knew if it’s okay to ask our colleague a ‘quick question’?

Prototype → iOS App that uses Face ID eye-tracking and networks with another iPhone running the same app detecting when both people pay attention (or not) and then signaling between them.

Imagine being in an open plan office. It is 10am, usually the busiest hour of the day. Mark has a pressing question, but his colleague Laura seems busy. Mark knows that Laura has a deadline to meet sometime today. He is not sure whether to ask the colleague right away, or to wait until lunch or even until late afternoon just to be save.

Mark uses the ‘My Colleagues’ app that Laura uses to signal whether she is deeply concentrated or happy for a little distraction. The app uses eye-tracking and other parameters to tell the difference. Laura indeed seems to be concentrated, so Mark can request a notification once she isn’t concentrated anymore and happy to chat. When the system detects that Laura might need a little break it asks her if she wants to chat to Mark. Laura agrees that she would appreciate a little break and accepts the invite. Mark gets a notification and they have a little chat.

A significant element of this system is its ability to learn from scratch. Every system equipped with this framework, could start out with a very basic ‘ethics model’ — a ‘tabula rasa’. As it learns from its environment and primarily its user it will develop a unique perspective on what is appropriate behaviour based on its experience — the feedback it got based on actions it carried out in the real world. Therefore a system that was ‘socialised’ by say a fashion blogger in France will have a slightly different idea of what it deems appropriate to a system that was trained through usage by an elderly woman from Japan, who just got her first smartphone. Only once those devices are in the same vicinity they will start to influence each other, and if the blogger ever travels to Japan they might even change their device’s ‘conformity settings’ to a higher level out of respect towards the culture that is unfamiliar to them, both person-to-person and device-to-device.

This ‘computational take at situational ethics’ is an approach that is very different from the more ‘biblical law’-type approaches technology companies take today when making decisions on what is right and wrong. A lot of the internet usage today is controlled by Facebook, which has a very stereotypically Silicon Valley approach when it comes to what content is allowed on the platform or not. Engineers, designers and product managers in Silicon Valley define what is moral for the whole rest of the world using their product. Once Facebook (or its subsidiaries) keep growing into new markets and beyond its 2 billion users — or networks from other cultures gain a dominant position in the world of technology— tensions, which are already being built up today, are just going to grow even more and the development of a more scalable, dynamic and inclusive solution has to start.

I would love to hear your thoughts on how we might make machines more considerate social actors. Let me know here or on Twitter @sarahmautsch.

Annotations

[1] Just think of notifications (the design of which is mostly prescribed by the creator of the operating system) or voice UIs like Siri or Alexa.

[2] In this piece the word ethics is used in its narrow meaning, based on its literal original meaning, from the greek ethos /έθος/, defined in the oxford dictionary as ‘the characteristic spirit of a culture, era, or community as manifested in its attitudes and aspirations’. When taking the context into account to evaluate it ethically it is called situational ethics. On the contrary, a biblical law type approach judges based on absolute moral doctrines. As this project is concerned with making machines behave appropriate based on their learnings from (inter-)actions within the environment, this piece fosters the idea of situational ethics.

[3] An API stands for Application Programming Interface and is ‘a set of rules that allows programmers to develop software for a particular operating system without having to be completely familiar with that operating system’ — here we will consider it as a concept of the system (with all of its inputs, outputs and core functions) on the basis which we can start to design.

Thanks for reading :heart: If you liked this post, share it with all of your programming buddies! Follow me on Facebook | Twitter

#machine-learning #design-pattern #data-science