Hello everyone,

It has been a while since the last time I posted a tutorial, or something in general. Basically life happened and I decided not to share rather than sharing low quality content. Today, I’ll walk you through a computer vision project that takes your live video input and translates your blinks into Morse Alphabet so you can blink short and long to write messages.

The source code for the project is here, I also used this awesome tutorial as a boiler plate to start with, if you want to learn more about Computer Vision applications you can check the channel owner’s channel from the link I posted. So without further ado let’s dive right into it.

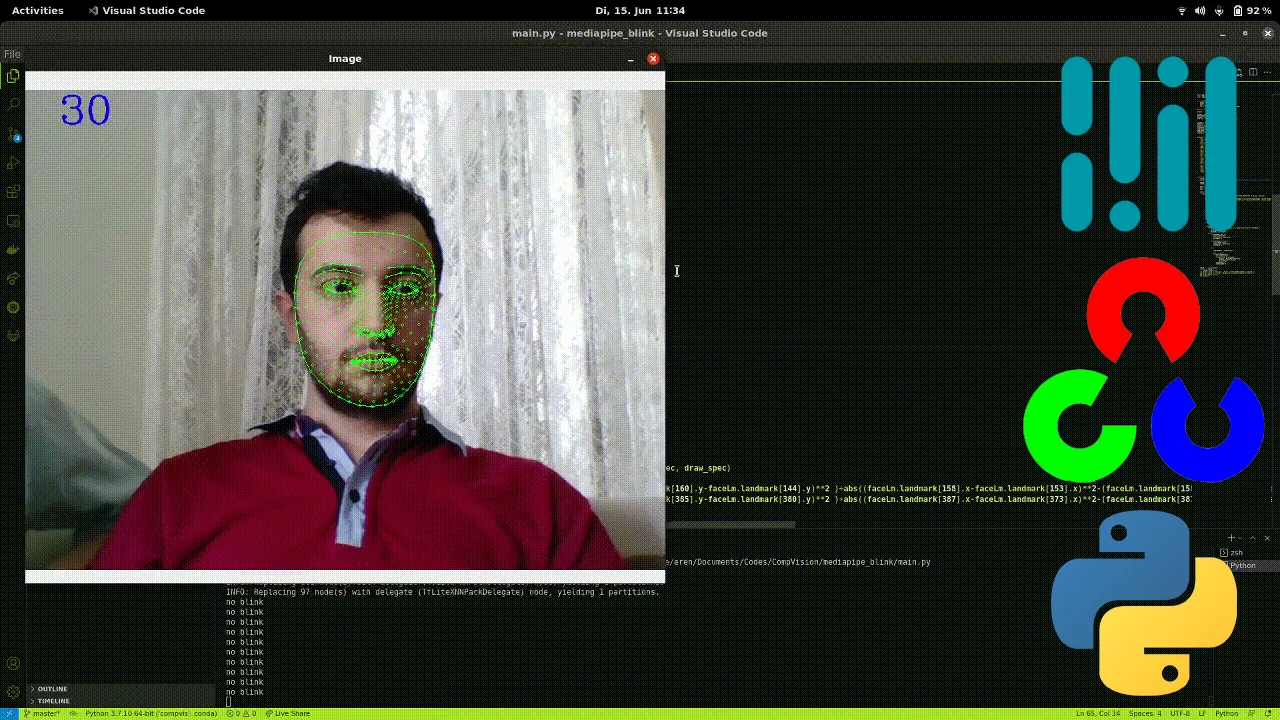

As for the beginning I want to explain MediaPipe library a little bit, “MediaPipe offers open source cross-platform, customizable ML solutions for live and streaming media.”, this definition is from their own website and explains what you can do with that library shortly and cleanly, they offer several other solutions that can run on different platforms and I’ll explain all of them in a different post in the future. The feature that we’ll use today is called “Face Mesh”, this solution provides us a face landmark map with the most important 468 landmarks that can be seen in a human’s face. Using that map we’ll calculate the ratio between some particular points in the face and with that information we’ll detect if the person on the camera blinked or not.

#python #opencv #mediapipe #computer-vision #morse code translator detect blinks — python, opencv, mediapipe #morse code translator detect blinks