Learn how to solve common issues when working with TPU USB Accelerator

In this article, I will be sharing some common errors that I faced when working on making the Deep Learning model work on Edge TPU USB Accelerator and the solutions that worked for me.

A Coral USB Accelerator is an Application Specific Integrated Chip(ASIC) that adds TPU computation power to your machine. The additional TPU computing makes inferences at the Edge for a deep learning model faster and easier.

Plug the USB Accelerator in USB 3.0 port, copy the TFLite Edge Model, along with the script for making inferences, and you are ready to go. It runs on Mac, Windows, and Linux OS.

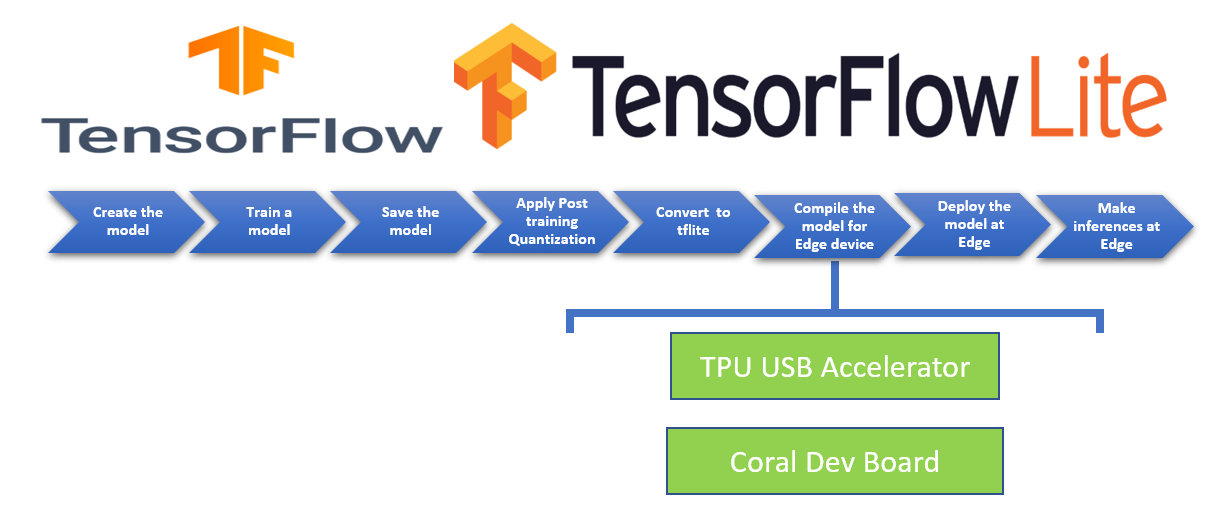

To create the Edge TFLite model and make inferences at the Edge using USB Accelerator, you will be using the following steps.

- Create the model

- Train the model

- Save the model

- Apply post-training quantization

- Convert the model to TensorFlow Lite version

- Compile the tflite model using edge TPU compiler for Edge TPU devices like Coral Dev board to TPU USB Accelerator

- Deploy the model at Edge and make inferences

Issue 1: When comping the TfLite model using edgetpu_compiler get an error “Internal compiler error. Aborting!”

Code that was erroring out

test_dir='dataset'

def representative_data_gen():

dataset_list = tf.data.Dataset.list_files(test_dir + '\\*')

for i in range(100):

image = next(iter(dataset_list))

image = tf.io.read_file(image)

image = tf.io.decode_jpeg(image, channels=3)

image = tf.image.resize(image, (500,500))

image = tf.cast(image / 255., tf.float32)

image = tf.expand_dims(image, 0)

# Model has only one input so each data point has one element

yield [image]

keras_model='Intel_1.h5'

#For loading the saved model and tf.compat.v1 is for compatibility with TF1.15

converter=tf.compat.v1.lite.TFLiteConverter.from_keras_model_file(keras_model)

# This enables quantization

converter.optimizations = [tf.lite.Optimize.DEFAULT]

# This ensures that if any ops can't be quantized, the converter throws an error

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# Set the input and output tensors to uint8

converter.inference_input_type = tf.uint8

converter.inference_output_type = tf.uint8

# set the representative dataset for the converter so we can quantize the activations

converter.representative_dataset = representative_data_gen

tflite_model = converter.convert()

#write the quantized tflite model to a file

with open('Intel_1.tflite', 'wb') as f:

f.write(tflite_model)

with open('Intel_1.tflite', 'wb') as f:

f.write(tflite_model)

print("TFLite conversion complete")

#edge-computing #deep learning