Today I’m going to show you how to set up a webpage that does real-time face tracking in any browser, mobile, or desktop. I’m doing everything in HTML and JavaScript to allow for cross-device portability. I’ll also show some easily extended working demos for face tracking, a basic Snapchat filter, and scientific applications.

All demos can be found here: facemeshmedium.netlify.app/

About the Model

With Google’s rapid development of Tensorflow.JS, machine learning on the web has never been easier. We’re going to be using Google MediaPipe’s Face Mesh model for all of our face-tracking needs.

Google Research has a subdivision called MediaPipe which focuses on making machine learning models run cross-device. This lets them predict anything from a big, chunky desktop to a mobile smartphone with no GPU (main site here). Face Mesh is their face tracking model, which takes in a camera frame and outputs 468 labeled landmarks on detected faces. It also clusters the landmarks by facial region (“Upper Lip,” “Left Eye,” etc.) and gives bounding boxes of the face in the output.

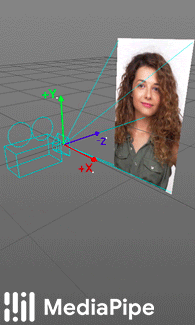

Oh, and did I mention that the landmarks it gives you are in 3D coordinates — with depth for the face?! How dope is that?

Face Mesh also outputs the relative depth of each region of your face ( link)

If you scroll down on Face Mesh’s page, you may be wondering why I’m writing this guide; MediaPipe already provides HTML examples using Face Mesh. There are two reasons for this:

- Their examples don’t work on mobile browsers due to a difference in the way video streams are handled on mobile and desktop JavaScript.

- The drawing and editing libraries are in this

#web-development #javascript #programming #machine-learning