The success of AlexNet on a popular Image classification benchmark brought Deep Learning to spotlight in 2011. Since then, it has achieved extraordinary results in numerous domains.

Most notably, deep learning has had an exceptional bearing on computer vision, speech recognition, and natural language processing (NLP), single-handedly bringing AI back to life again.

On the surface, one could assign credit to large datasets and high availability of computing resources. But from a fundamental standpoint, they only provide fuel to neural networks to advance the extra mile.

What helped neural networks make sense of huge datasets?

What exactly are the few but extraordinary developments in the last decade that made neural networks the ultimate learning machines?

Back in 1998, Yann LeCun et al. published a chapter on Efficient Backpropagation. To efficiently train the neural networks, the authors proposed a few practical guidelines.

These guidelines emphasize on stochastic learning, examples shuffling, normalizing input, activation functions, network initialization, and adaptive learning rate.

Unfortunately, there were no standardized tools to apply these ideas out-of-the-box. It was extremely difficult to converge a deep network, let alone generalize it well on unseen data.

Fortunately, these guidelines set the path to develop more formal tools in the coming years.

What is it that neural networks were struggling with?

Many of the difficulties in training deep neural networks occur due to poor network initialization and insufficient gradients at the lower layers.

Hinton et al. and Yoshio et al. explored the idea of greedy layer-wise training. Two remarkable papers that stand-out in this paradigm are “fast learning for Deep Belief Networks” and “Greedy Layer-Wise Training of Deep Networks”. Both these papers managed to train much deeper networks for the first time.

Unfortunately, the aforementioned still require additional efforts to train the network layer by layer followed by fine-tuning of the entire network.

To attain the magnitude of success we see today with Deep Learning, there was a need to develop formal techniques and not just hacks.

We needed tools that work across-domain and in a wide range of settings. We needed an eco-system to train neural networks end-2-end without bells and whistles.

Fortunately, with all the guiding principles and extra-ordinary efforts of the elite few, we have come passed the tipping point where deep learning can show its true potential.

The following are a few but vital developments in the Deep Learning ecosystem that made it the household name of every engineer’s toolkit.

Activation Function

Non-linearity is the powerhouse of neural networks. Without it, neural networks lack expressiveness and simply boil down to a linear transformation of input data.

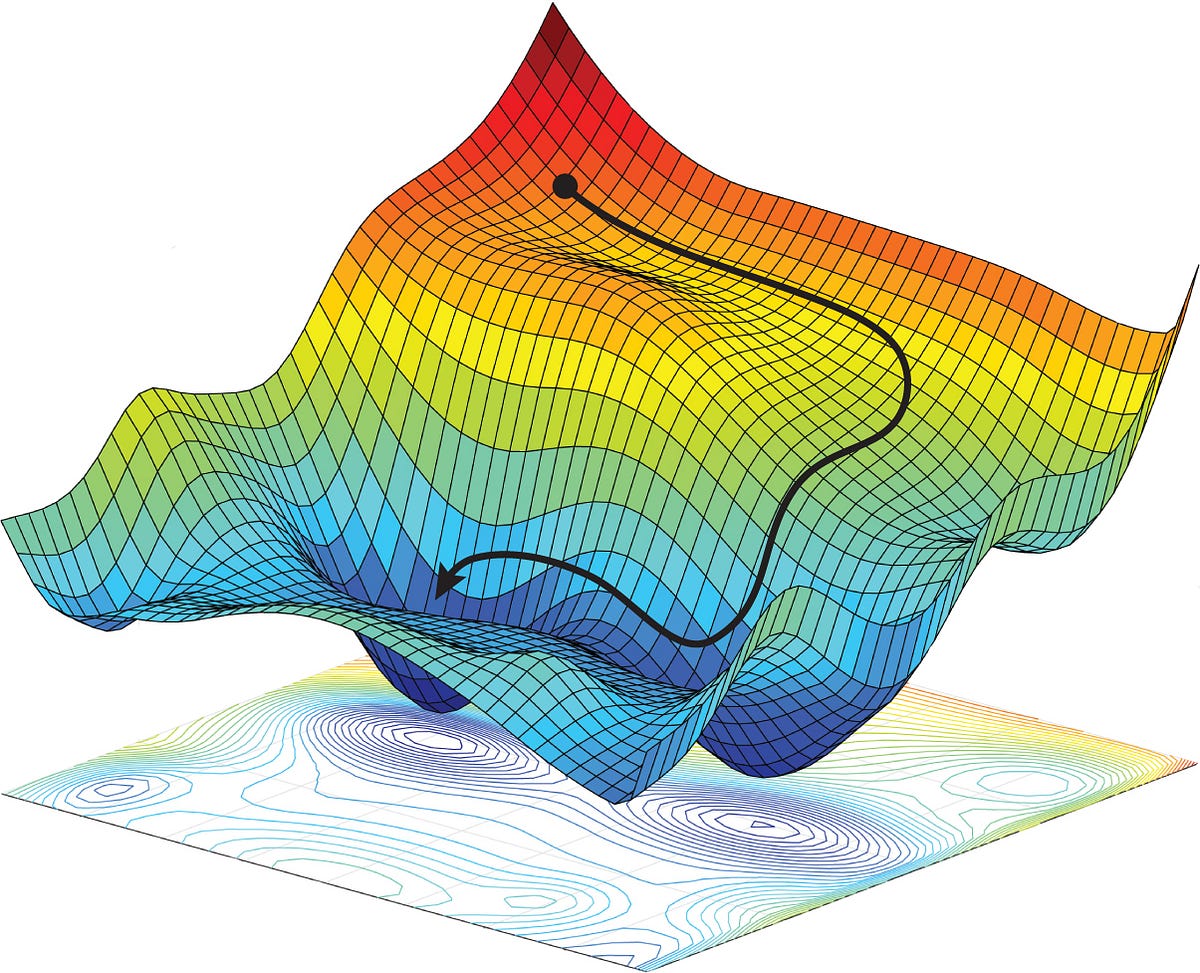

Unfortunately, the activations involving exponentials (sigmoid, hyperbolic tangents, etc.) used for so long to realize non-linearity have had their issues. They become exhaustively reluctant to allow gradient flow once they reach their saturating zones, vastly inhibiting the network’s learning potential.

Fortunately, AlexNet came to rescue and demonstrated for the first time the untouched potential of the rectified linear units (ReLU) as activation functions.

ReLU helps to train the network much faster (due to its non-saturating nature) and made it possible to train a much deeper network in an end-to-end fashion.

ReLU became the default choice of activation for the Deep Learning toolkit. From an optimization viewpoint, it solves the long-standing issue of the so-called vanishing gradient.

#optimization #artificial-intelligence #machine-learning #deep-learning #deep learning