In this post, we will show you how to train Detectron2 on Gradient to detect custom objects ie Flowers on Gradient. We will show you how to label custom dataset and how to retrain your model. After we train it we will try to launch a inference server with API on Gradient.

Reference Repo https://github.com/Paperspace/object-detection-segmentation

Article Outline

- Overview of Detectron2

- Overview of building your custom dataset

- How to label your custom dataset

- Register custom Detectron2 object detection data

- Run Detectron2 training on Gradient

- Run Detectron2 inference on Gradient

Overview of Detectron2

Detectron2 is a popular PyTorch based modular computer vision model library. It is the second iteration of Detectron, originally written in Caffe2. The Detectron2 system allows you to plug in custom state of the art computer vision technologies into your workflow. Quoting the Detectron2 release blog:

Detectron2 includes all the models that were available in the original Detectron, such as Faster R-CNN, Mask R-CNN, RetinaNet, and DensePose. It also features several new models, including Cascade R-CNN, Panoptic FPN, and TensorMask, and we will continue to add more algorithms. We’ve also added features such as synchronous Batch Norm and support for new datasets like LVIS

Overview of building your custom dataset

Download Dataset

https://public.roboflow.com/classification/flowers_classification

Roboflow: Computer Vision dataset management tool

We will be training our custom Detectron2 detector on public flower detection data hosted for free at Roboflow.

The Flowers dataset is a classification detection dataset various flower species like dandelions and daisies. Notably, blood cell detection is not a capability available in Detectron2 — we need to train the underlying networks to fit our custom task.

Label your Dataset with LabelImg

https://github.com/tzutalin/labelImg

Accurately labeled data is essential to successful machine learning, and computer vision is no exception.

In this section, we’ll demonstrate how you can use LabelImg to get started with labeling your own data for object detection models.

About LabelImg

LabelImg is a free, open source tool for graphically labeling images. It’s written in Python and uses QT for its graphical interface. It’s an easy, free way to label a few hundred images to try out your next project.

Installing LabelImg

sudo apt-get install pyqt5-dev-tools

sudo pip3 install -r requirements/requirements-linux-python3.txt

make qt5py3

python3 labelImg.py

python3 labelImg.py [IMAGE_PATH] [PRE-DEFINED CLASS FILE]

Labeling Images with LabelImg

LabelImg supports labelling in VOC XML or YOLO text file format. At Paperspace, we strongly recommend you use the default VOC XML format for creating labels. Thanks to ImageNet, VOC XML is a more universal standard as it relates to object detection whereas various YOLO implementations have slightly different text file formats.

Open your desired set of images by selecting “Open Dir” on the left-hand side of LabelImg

To initiate a label, type w, and draw the intended label. Then, type ctrl (or command) S to save the label. Type d to go to the next image (and a to go back an image).

Save VOX XML into coco dataset JSON

Inside our https://github.com/Paperspace/object-detection-segmentation in datasets you will find a coco_labelme.py script that will create a COCO dataset json from the xml files we have generated earlier.

In order to run it:

python3 datasets/coco_labelme.py /path_to_your_images/ " --output=“trainval.json”

Upload your custom dataset

Now we have to upload our custom dataset to s3 bucket.

Here you will find instructions on how to use AWS-CLI or web console to upload your data to S3

Register a Dataset

To let detectron2 know how to obtain a dataset named “my_dataset”, users need to implement a function that returns the items in your dataset and then tell detectron2 about this function:

def my_dataset_function():

...

return list[dict] in the following format

from detectron2.data import DatasetCatalog

DatasetCatalog.register("my_dataset", my_dataset_function)

## later, to access the data:

data: List[Dict] = DatasetCatalog.get("my_dataset")

Here, the snippet associates a dataset named “my_dataset” with a function that returns the data. The function must return the same data if called multiple times. The registration stays effective until the process exits.

How to register the my_dataset dataset to detectron2, following the detectron2 custom dataset tutorial.

from detectron2.data.datasets import register_coco_instances

register_coco_instances("my_dataset", {}, "./data/trainval.json", "./data/images")

Each dataset is associated with some metadata. In our case, it is accessible by calling my_dataset_metadata = MetadataCatalog.get(“my_dataset”), you will get

Metadata(evaluator_type='coco', image_root='./data/images', json_file='./data/trainval.json', name=my_dataset,

thing_classes=[‘type1’, ‘type2’, ‘type3’], thing_dataset_id_to_contiguous_id={1: 0, 2: 1, 3: 2})

To get the actual internal representation of the catalog stores information about the datasets and how to obtain them, you can call dataset_dicts = DatasetCatalog.get(“my_dataset”). The internal format uses one dict to represent the annotations of one image.

To verify the data loading is correct, let’s visualize the annotations of randomly selected samples in the dataset:

import random

from detectron2.utils.visualizer import Visualizer

for d in random.sample(dataset_dicts, 3):

img = cv2.imread(d["file_name"])

visualizer = Visualizer(img[:, :, ::-1], metadata=my_dataset_metadata, scale=0.5)

vis = visualizer.draw_dataset_dict(d)

cv2_imshow(vis.get_image()[:, :, ::-1])

Train the model

Now, let’s fine-tune a coco-pretrained R50-FPN Mask R-CNN model on the my_dataset dataset. Depending on the complexity and size of your dataset it can take anything from 5 mins to hours.

Pretrained model can be downloaded from:

detectron2://COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x/137849600/model_final_f10217.pkl

from detectron2.engine import DefaultTrainer

from detectron2.config import get_cfg

import os

cfg = get_cfg()

cfg.merge_from_file(

"./detectron2_repo/configs/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"

)

cfg.DATASETS.TRAIN = ("my_dataset",)

cfg.DATASETS.TEST = ()

cfg.DATALOADER.NUM_WORKERS = 2

cfg.MODEL.WEIGHTS = "detectron2://COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x/137849600/model_final_f10217.pkl" ## initialize from model zoo

cfg.SOLVER.IMS_PER_BATCH = 2

cfg.SOLVER.BASE_LR = 0.02

cfg.SOLVER.MAX_ITER = (

300

) ## 300 iterations seems good enough, but you can certainly train longer

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = (

128

) ## faster, and good enough for this toy dataset

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 3 ## 3 classes (data, fig, hazelnut)

os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)

trainer = DefaultTrainer(cfg)

trainer.resume_or_load(resume=False)

trainer.train()

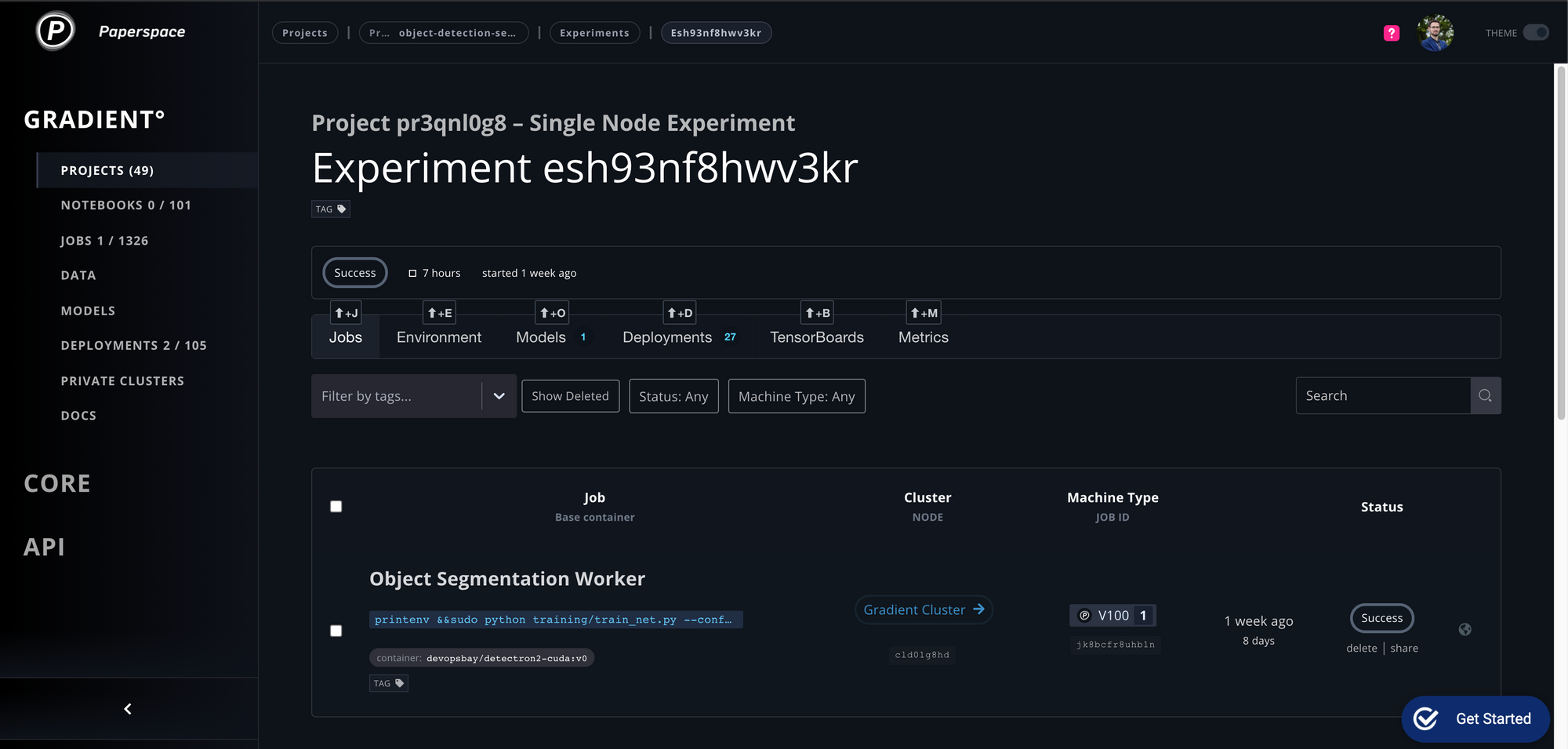

Run Training on Gradient

Gradient CLI Installation

How to install Gradient CLI - docs

pip install gradient

Then make sure to obtain an API Key, and then:

gradient apiKey XXXXXXXXXXXXXXXXXXX

Train on a single GPU

Note: training on a single will take a long time, so be prepared to wait!

gradient experiments run singlenode \

--name mask_rcnn \

--projectId <some project> \

--container devopsbay/detectron2-cuda:v0 \

--machineType P4000 \

--command "sudo python training/train_net.py --config-file training/configs/mask_rcnn_R_50_FPN_1x.yaml --num-gpus 1 SOLVER.IMS_PER_BATCH 2 SOLVER.BASE_LR 0.0025 MODEL.WEIGHTS https://dl.fbaipublicfiles.com/detectron2/COCO-Detection/faster_rcnn_R_50_FPN_1x/137257794/model_final_b275ba.pkl OUTPUT_DIR /artifacts/models/detectron" \

--workspace https://github.com/Paperspace/object-detection-segmentation.git \

--datasetName my_dataset \

--datasetUri <Link_to_your_dataset> \

--clusterId <cluster id>

The dataset is downloaded to the ./data/ directory. Model results are stored in the ./models directory.

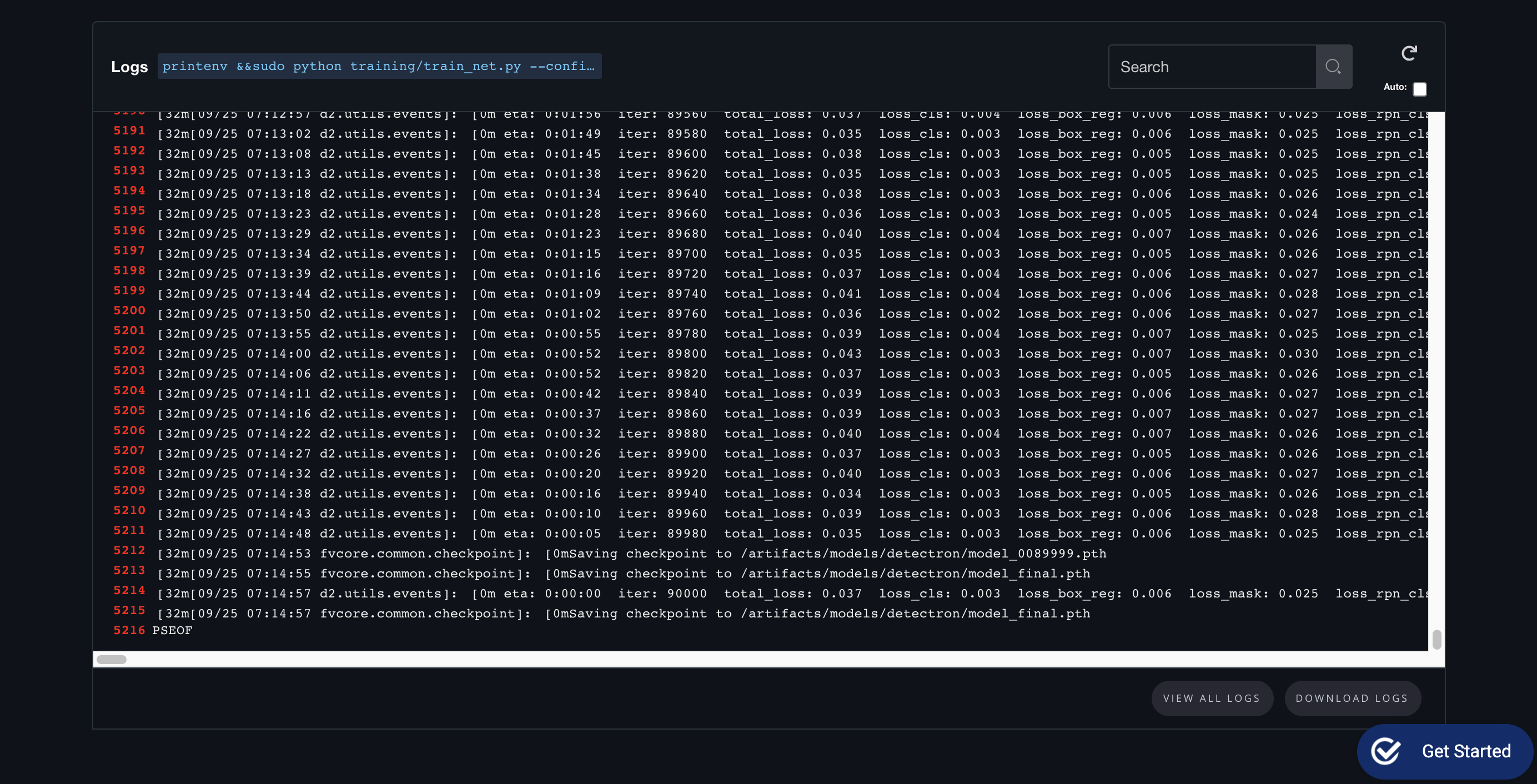

If everything is setup correctly you should see something like this:

Tensorboard Support

Gradient support Tensorboard out-of-the box

#pytorch #machine-learning #data-science #python #developer