The Curse of Dimensionality — a catchy term is termed by mathematician Richard Bellman in his book “Dynamic Programming” in 1957 which refers to the fact that problems can get a lot harder to solve on high-dimensional instances.

Let us start with a question. What is a Dimension?

**Dimension **in very simple terms means the attributes or features of a given dataset.

This sounds pretty simple. So why are we using such a negative word curse associated with dimensions? What is the **curse **here?

Let us learn the curse of dimensionality with a general example.

If anybody asks me What is a Machine learning Model?

Speaking in layman terms, when we give the dataset for training and the output of the training phase is a Model.

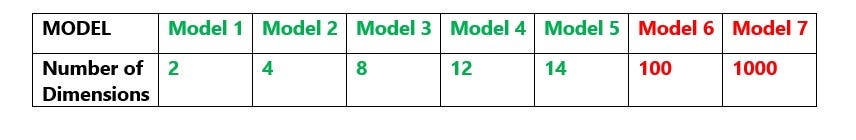

Suppose we have 7 models each having a different number of dimensions keeping the motive of the model the same in all the 7 models:

What we observe here is the number of features that we are giving to the training phase to generate the model is increasing exponentially.

So the question arises what is the relation between the number of dimensions and the model?

Can we say more number of features will result in a better model?

The answer is yes but …oh yes! there is a but here.

We can say that more number of features results in a better model but this is true only to a certain extent lets call that extent threshold.

#machine-learning