Generative Adversarial Networks (GANs) are very successful in generating very sharp and realistic images. This post briefly explains our image generation framework based on GANs to sequentially compose an image scene, breaking down the underlying problem into smaller ones. For an in-depth description, please see our publication: A Layer-Based Sequential Framework for Scene Generation with GANs.

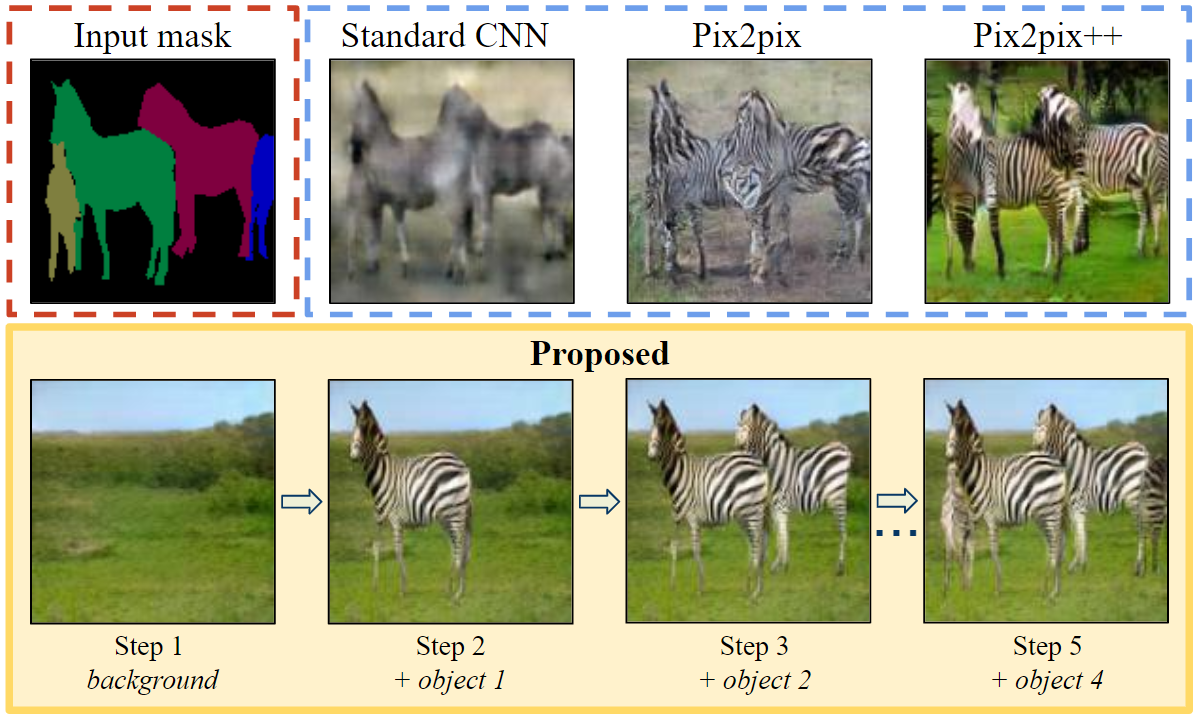

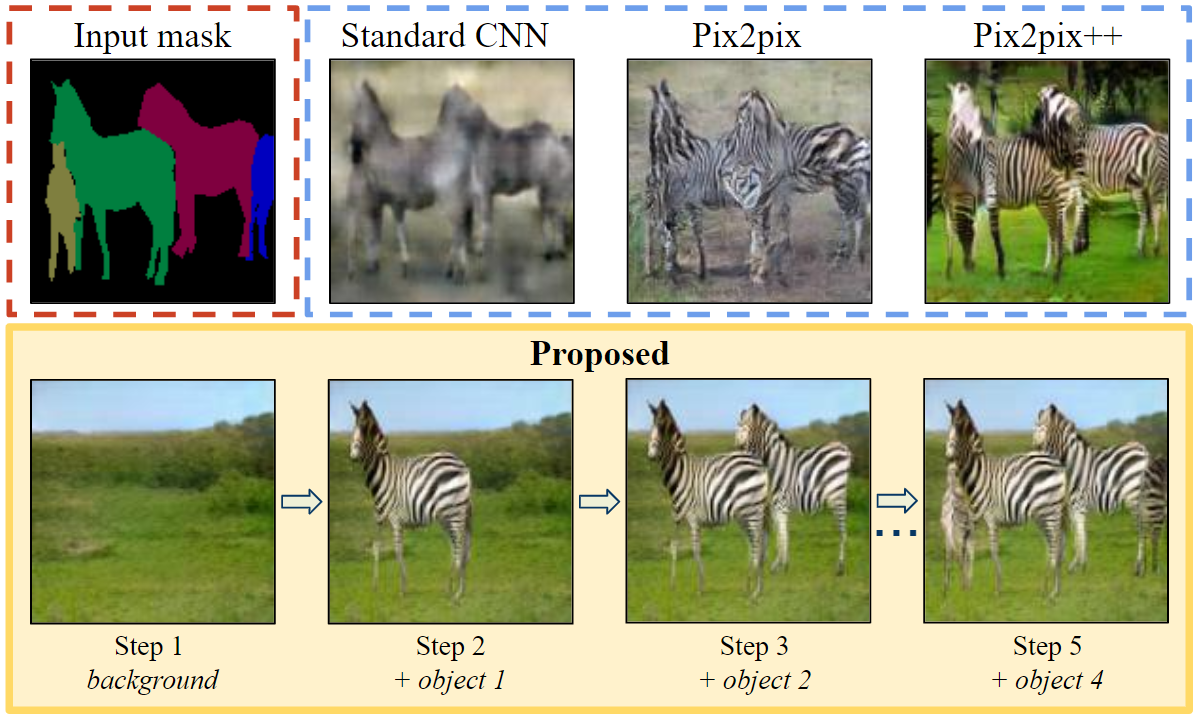

Fig. 1: The proposed image generation process. Given a semantic layout map, our model composes the scene step-by-step. The first row shows the input semantic map and images generated by state-of-the-art baselines.

What is Generative Adversarial Network (GAN)?

Fig. 2: GAN framework.

A generative adversarial network (GAN) [1] is a class of machine learning frameworks. Two neural networks: (i) generator, and (ii) discriminator contest with each other in a game-theoretic scenario. The generator takes a random noise as an input and generates a fake sample. The discriminator attempts to distinguish between samples drawn from the training dataset (real samples e.g hand-written digit images) and samples produced by the generative model (fake samples). This game drives the discriminator to learn to correctly classify samples as real or fake. Simultaneously, the generator attempts to fool the classifier into believing its samples are real. At convergence, the generators samples are indistinguishable from training data. For more details, please see the original paper or this post. GANs can be used for image generation; they are able to learn to generate sharp and realistic image data.

Single-shot image generation limits user control over the generated elements

Fig. 3: Different scaling of the foreground object.

The automatic image generation problem has been studied extensively in the GAN literature [2,3,4]. It has mostly been addressed as learning a mapping from a single source, e.g. noise or semantic map, to target, e.g. images of zebras. This formulation sets a major restriction on the ability to control scene elements individually. So for instance, it is difficult to change the appearance or shape of one zebra while keeping the rest of the image scene unaltered. Let’s look at Fig. 3. If we change the object size, the background is also changed even though the input noise is the same for each row.

Our approach: Layer-based Sequential Image Generation

Our main idea resembles how a landscape painter would first sketch out the overall structure and later embellish the scene gradually with other elements to populate the scene. For example, the painting could start with mountain ranges or rivers as background while trees and animals are added sequentially as foreground instances.

The main objective is broken down into two simpler sub-tasks. First, we generate the background canvas** x0** with the background generator Gbg conditioned on a noise. Second, we sequentially add foreground objects with the foreground generator Gfg to reach the final image xT, which contains the intended T foreground objects on the canvas (T is not fixed). Our model allows user control over the objects to generate, as well as, their category, their location, their shape, and their appearance.

#image-generation #generative-adversarial #deep-learning #gans #ai #deep learning