In this post, you will learn about concepts of neural networks with the help of mathematical models examples. In simple words, you will learn about how to represent the neural networks using mathematical equations. As a data scientist/machine learning researcher, it would be good to get a sense of how the neural networks can be converted into a bunch of mathematical equations for calculating different values. Having a good understanding of representing the activation function output of different computation units / nodes / neuron in different layers would help in understanding back propagation algorithm in a better and easier manner. This will be dealt in one of the future posts.

Single Layer Neural Network (Perceptron)

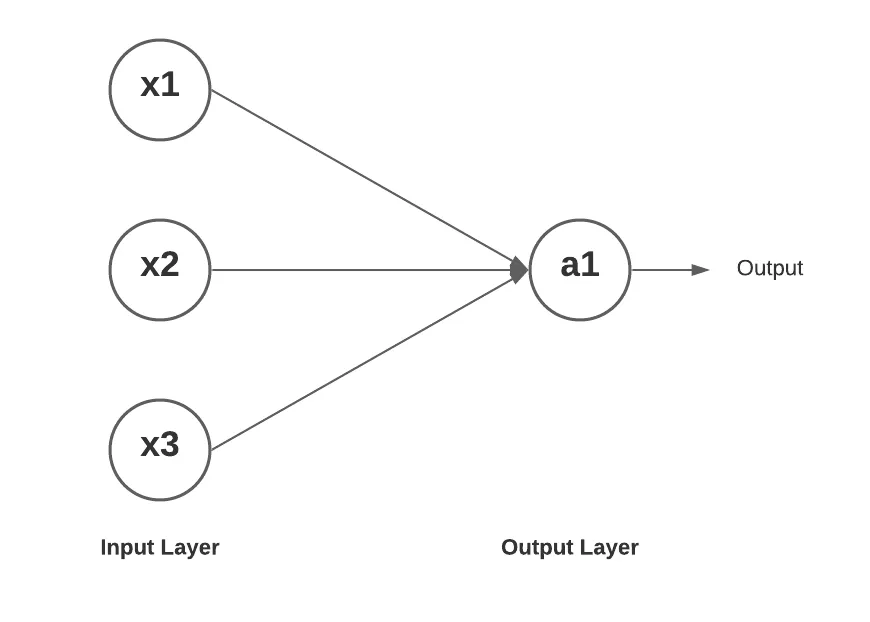

Here is how a single layer neural network looks like. You may want to check out my post on Perceptron - Perceptron explained with Python example.

In the above equation, the superscript of weight represents the layer, and the subscript of weights represent the weight of connection between the input node to output node. Thus, (\theta^{(1)}_12) represents the weight of the first layer between the node 1 in next layer and node 2 in current layer.

#python #ai #deep learning #neural network #perceptron