Generative models have been an important component of machine learning for the last few decades. With the emergence of the deep learning, generative models started being combined with deep neural networks creating the field of deep generative models(DGMs). DGMs hold a lot of promise for the deep learning field as they have the ability of synthesizing data from observation. This feature can result key to improve the training of large scale models without requiring large amounts of data. Recently, Microsoft Research unveiled three new projects looking to advance research in DGMs.

One of the biggest questions surrounding DGMs is whether they can be applied with large-scale datasets. In recent years, we have seen plenty of examples of DGMs applied in a relatively small scale. However, the deep learning field is gravitating towards a “bigger is better” philosophy when comes to data and we are regularly seeing new models being trained in unfathomably big datasets. The idea of DGMs that can operate at that scale is one of the most active areas of research in the space and the focus of the Microsoft Research projects.

Types of DGMs

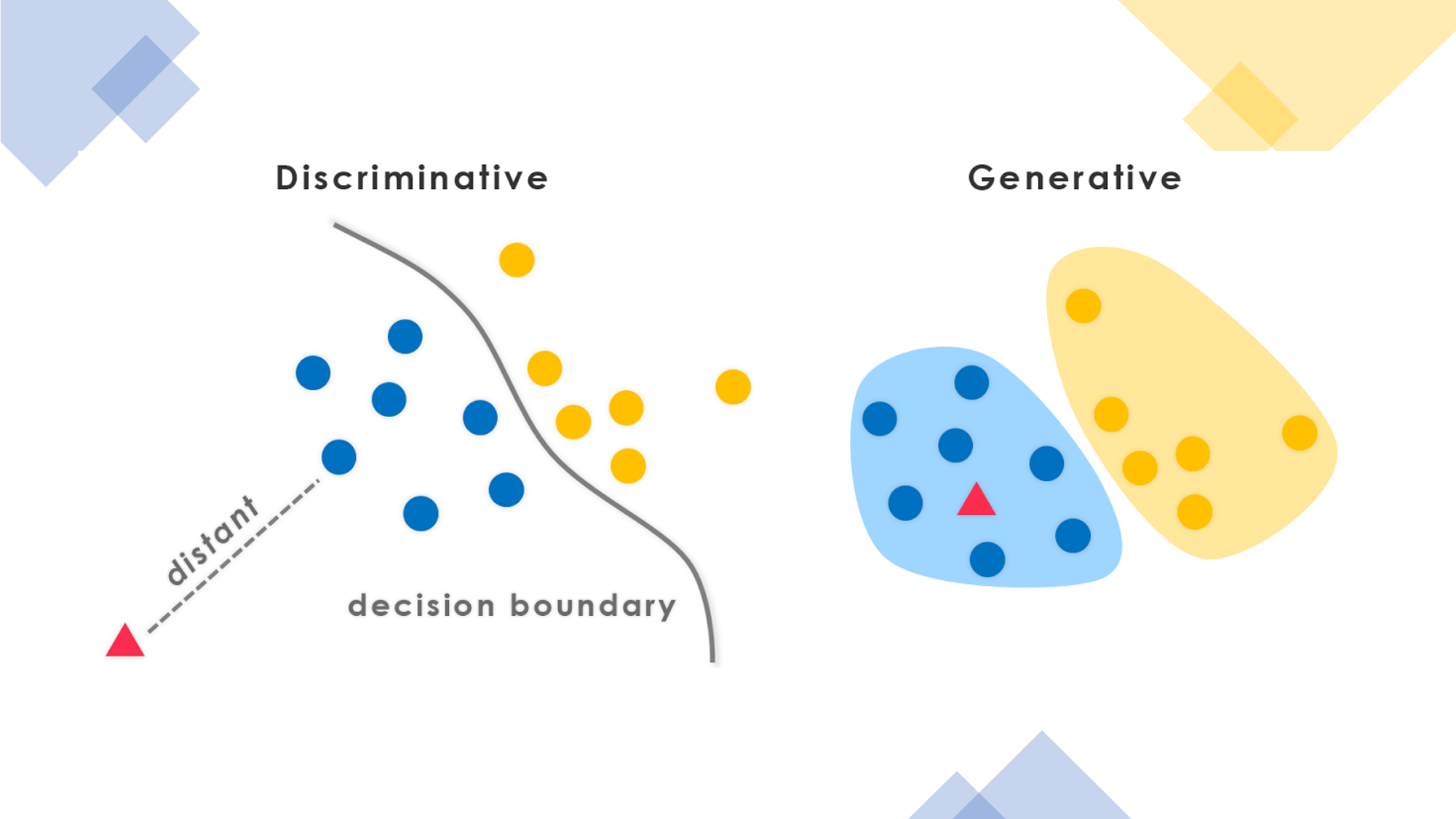

A good way to understand DGMs is to contrast it with its best-known complement: discriminative models. Often described as siblings: generative and discriminative models encompass the different ways in which we learn about the world. Conceptually, generative models can attempt to generalize everything they see whether discriminative models learn the unique properties in what they see. Both discriminative and generative models have strengths and weaknesses. Discriminative algorithms tend to perform incredibly well in classification tasks involving high quality datasets. However, generative models have the unique advantage that can create new datasets similar to existing data and operate very efficiently in environments that lack a lot of labeled datasets.

The essence of generative models was brilliantly captured in a 2016 blog post by OpenAI in which they stated that:

“Generative models are forced to discover and efficiently internalize the essence of the data in order to generate it.”

#2020 may tutorials #overviews #gans #microsoft #neural networks