Get started Web Scraping with Proxies

The worldwide web is a treasure trove of data. The availability of big data, lightning-fast development of data analytics software, and increasingly inexpensive computing power have further heightened the importance of data-driven strategies for competitive differentiation.

According to Forrester’s report, data-driven companies that harness insights across their organization and implement them to create competitive advantage, are growing at an average of more than 30 percent annually and are on track to earn $1.8 trillion by 2021.

According to McKinsey research, organizations that leverage customer behavioral insights outperform peers by 85 percent in sales growth and more than 25 percent in gross margin.

Howbeit that, content is constantly being fed on the internet, on a regular basis. This leads to a lot of clutter when you’re looking for data relevant to your needs. That’s when web scraping comes in to help you scrape the web for useful data depending on your requirements and preference.

Below, therefore, are the basic things you need to know about how to gather information online using web scraping, and how to use IP proxies efficiently.

What Is Web Scraping?

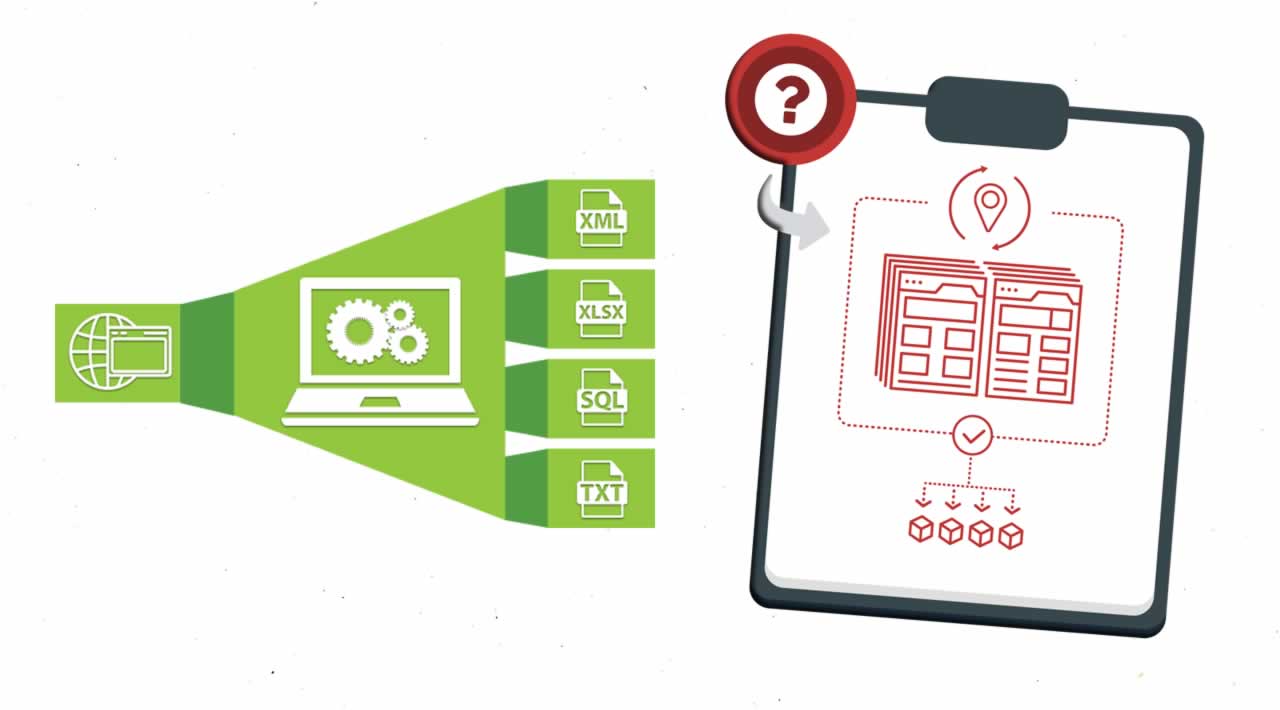

Web scraping or web harvesting is a technique used to extract requirement relevant and large amounts of data from websites. This information can be stored locally on your computer in the form of spreadsheets. This can be very insightful for a business to plan its marketing strategy as per the analysis of the data obtained.

Web scraping has enabled businesses to innovate at the speed of light, providing them real-time access to data from the world wide web. So if you’re an e-commerce company and you are looking for data, having a web scraping application will help you download hundreds of pages of useful data on competitor websites, without having to deal with the pain of doing it manually.

Why Is Web Scraping so Beneficial?

Web Scraping kills the manual monotony of data extraction and overcomes the hurdles of the process. For example, there are websites that have data that you cannot copy and paste. This is where web scraping comes into play by helping you extract any kind of data that you want.

You can also convert and save it in the format of your choice. When you extract web data with the help of a web scraping tool, you would be able to save the data in a format such as CSV. You can then retrieve, analyze and use the data the way you want.

Web scraping simplifies the process of extracting data, speeds up the process by automating it and provides easy access to the extracted data by providing it in a CSV format. There are many other benefits of web scraping, such as using it for lead generation, market research, brand monitoring, anti-counterfeiting activities, machine learning using large data sets and so on.

However, when scraping the web at any reasonable scale, using proxies is strongly recommended.

In order to scale your web scraping project, it is important to understand proxy management since it’s the core of scaling any data extraction project.

What Are Proxies?

An IP address typically looks like this: 289.9.879.15. This combination of numbers is basically a label attached to your device while you’re using the internet. It helps locate your device.

A proxy is a third party server that allows you to route your request through their servers and use their IP address in the process. When using a proxy, the website you are making the request to no longer sees your IP address but the IP address of the proxy, giving you the ability to scrape the web with higher safety.

Benefits of Using a Proxy

-

Using a proxy allows you to mine a website with much more reliability thereby reducing the chances of your spider getting banned or blocked.

-

A proxy enables you to make your request from a specific geographical region or device (mobile IPs for example) which helps you to see region-specific content that the website displays. This is very useful when scraping product data from online retailers.

-

Using a proxy pool allows you to make a higher volume of requests to a target website without being banned.

-

A proxy saves you from IP bans that some websites impose. For example, requests from AWS servers are very commonly blocked by websites, as it holds a track record of overloading websites with large volumes of requests using AWS servers.

-

Using proxies enables you to make unlimited concurrent sessions to the same or different websites.

What Are Proxy Options?

If you go by the fundamentals of proxies, there are 3 main types of IPs to choose from. Each category has its own set of pros and cons and can be well-suited for a specific purpose.

Datacenter IPs

This is the most common type of proxy IP. They are the IPs of servers housed in data centers. These are extremely cheap to buy. If you have the right proxy management solution, it can be a solid base to build a very robust web crawling solution for your business.

Residential IPs

These are the IPs of private residences, enabling you to route your request through a residential network. They are harder to get, hence much more expensive. They can be financially cumbersome when you can achieve similar results with data center IPs which are cheaper. With proxy servers, the scraping software can mask their IP address with residential IP proxies, enabling the software to access all the websites which might not have been available without a proxy.

Mobile IPs

These are the IPs of private mobile devices. It is extremely expensive since it’s very difficult to obtain IPs of mobile devices. It is not recommended unless you’re looking to scrape the results shown to mobile users. This is legally even more complicated because most of the time, the device owner isn’t aware that you are using their GSM network for web scraping.

With proper proxy management, data center IPs give similar results as residential or mobile IPs without the legal concerns, and they come at a fraction of the cost.

Artificial Intelligence in Web Scraping

Many research studies suggest that Artificial Intelligence (AI) can be the answer to the challenges and roadblocks of web scrapping. Researchers from the Massachusetts Institute of Technology recently released a paper on an artificial intelligence system that can extract information from sources on the web and learn how to do it on its own. This study has also introduced a mechanism of extracting structured data from unstructured sources automatically, thereby establishing a link between human analytical ability and AI-powered mechanism.

This could probably be the future to fill the gap of lack of human resources or eventually make it an entirely AI dominated process.

In Conclusion

Web scraping has been enabling innovation and establishing groundbreaking results from data-driven business strategies. However, it comes with its unique set of challenges which can hinder the possibilities and as a result makes it more difficult to achieve desired results.

In just the last decade, humans have created more information than the entire history of the human race put together. This calls for more innovations like artificial intelligence to structure this highly unstructured data landscape, and open up a larger landscape of possibilities.

#web-development #web-scraping #Proxies