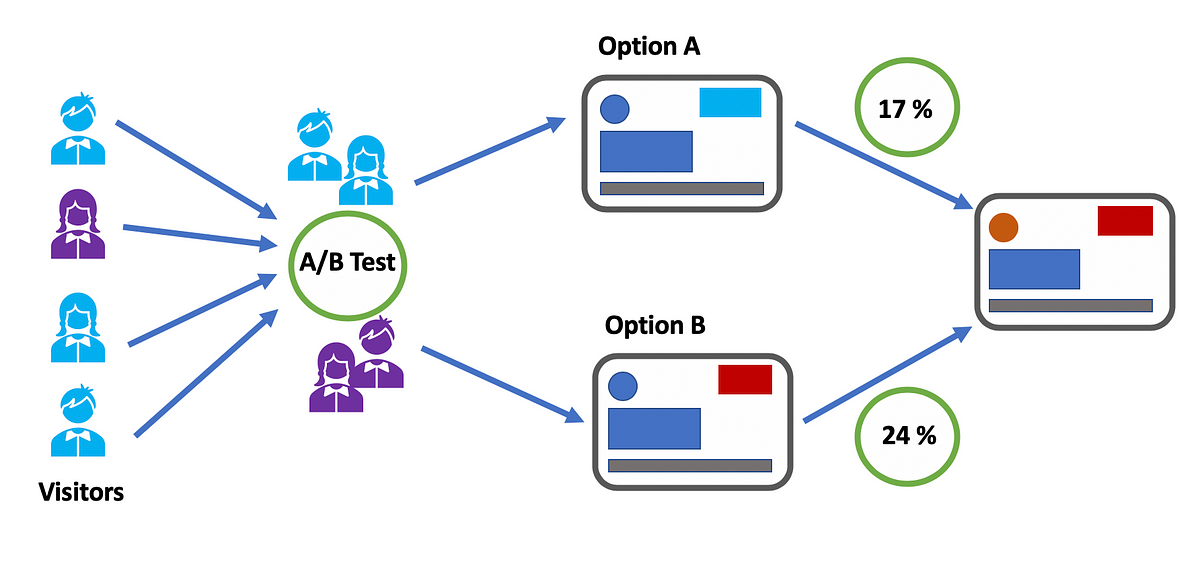

The idea of A/B testing is to present different content to different variants (user groups), gather their reactions and user behaviour and use the results to build product or marketing strategies in the future.

A/B testing is a methodology of comparing multiple versions of a feature, a page, a button, headline, page structure, form, landing page, navigation and pricing etc. by showing the different versions to customers or prospective customers and assessing the quality of interaction by some metric (Click-through rate, purchase, following any call to action, etc.).

This is becoming increasingly important in a data-driven world where business decisions need to be backed by facts and numbers.

How to conduct a standard A/B test

- Formulate your Hypothesis

- Deciding on Splitting and Evaluation Metrics

- Create your Control group and Test group

- Length of the A/B Test

- Conduct the Test

- Draw Conclusions

1. Formulate your hypothesis

Before conducting an A/B testing, you want to state your null hypothesis and alternative hypothesis:

The null hypothesis is one that states that there is no difference between the control and variant group.The_ alternative hypothesis__ is one that states that there is a difference between the control and variant group._

Imagine a software company that is looking for ways to increase the number of people who pay for their software. The way that the software is currently set up, users can download and use the software free of charge, for a 7-day trial. The company wants to change the layout of the homepage to emphasise with a red logo instead of blue logo that there is a 7-day trial available for the company’s software.

Here is an example of hypothesis test:

Default action: Approve blue logo.

Alternative action: Approve red logo.

Null hypothesis: Blue logo does not cause at least 10% more license purchase than red logo.

Alternative hypothesis: Red logo does cause at least 10% more license purchase than blue logo.

It’s important to note that all other variables need to be held constant when performing an A/B test.

2. Deciding on Splitting and Evaluation Metrics

We should consider two things: where and how we should split users into experiment groups when entering the website, and what metrics we will use to track the success or failure of the experimental manipulation. The choice of unit of diversion (the point at which we divide observations into groups) may affect what evaluation metrics we can use.

The control, or ‘A’ group, will see the old homepage, while the experimental, or ‘B’ group, will see the new homepage that emphasises the 7-day trial.

Three different splitting metric techniques:

a) Event-based diversion

b) Cookie-based diversion

c) Account-based diversion

An event-based diversion (like a pageview) can provide many observations to draw conclusions from, but if the condition changes on each pageview, then a visitor might get a different experience on each homepage visit. Event-based diversion is much better when the changes aren’t as easily visible to users, to avoid disruption of experience.

In addition, event-based diversion would let us know how many times the download page was accessed from each condition, but can’t go any further in tracking how many actual downloads were generated from each condition.

Account-based can be stable, but is not suitable in this case. Since visitors only register after getting to the download page, this is too late to introduce the new homepage to people who should be assigned to the experimental condition.

So this leaves the consideration of cookie-based diversion, which feels like the right choice. Cookies also allow tracking of each visitor hitting each page. The downside of cookie based diversion, is that it get some inconsistency in counts if users enter the site via incognito window, different browsers, or cookies that expire or get deleted before they make a download. As a simplification, however, we’ll assume that this kind of assignment dilution will be small, and ignore its potential effects.

#machine-learning #artificial-intelligence #data-science #data-analysis #ab-test #data analysis