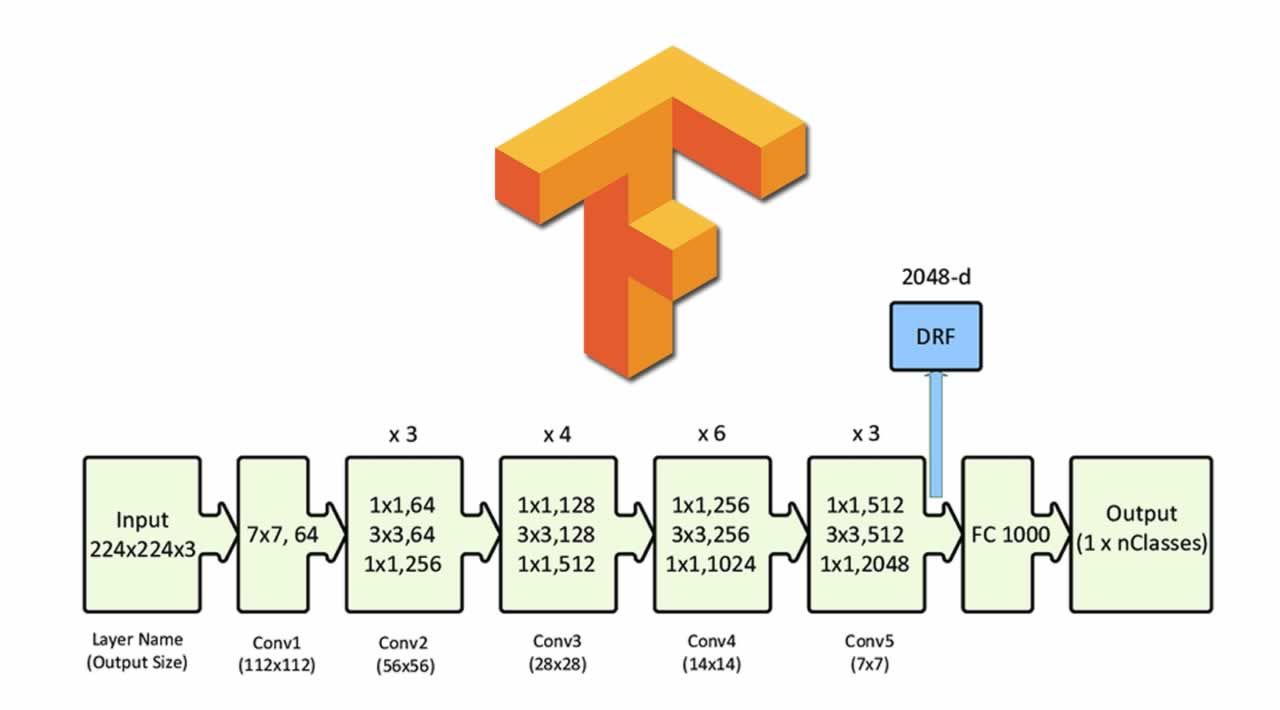

Our intuition may suggest that deeper neural networks should be able to catch more complex features and thus they can be used for representing more complex functions compared to the shallower ones. The question that should arise is — if learning a better network is equivalent to stacking more and more layers? What are the problems and benefits of this approach? These questions and some very important other concepts were discussed in the Deep Residual Learning for Image Recognition paper by K. He et al. in 2017. This architecture is known as ResNet and many important must-know concepts related to Deep Neural Network (DNN) were introduced in this paper and, these will all be addressed in this post including an implementation of 50 layer ResNet in TensorFlow 2.0. What you can expect to learn from this post —

- Problem with Very Deep Neural Network.

- Mathematical Intuition Behind ResNet.

- Residual Block and Skip Connection.

- Structuring ResNet and Importance of 1×1 Convolution.

- Implement ResNet with TensorFlow.

Let’s begin!

#artificial-intelligence #machine-learning #tensorflow