Realtime Face Recognition in the Browser

Not that long time ago Vincent Mühler has posted several posts about his amazing face recognition library face-api.js based on TensorFlowJS. For me, having worked previously with OpenCV on the server side, the idea of doing Face Recognition in the browser felt quite compleling to try out. As result of that curiosity, Face® was born.

Face® is not an AI project, but merely an engineering one, trying to utilize face-api.js for performing Face Recognition in the browser and engineer a software solution that will take care of application aspects like:

- User Registration

- Models loading

- Image upload, resizing and deleting

- Using camera (taking and resizing photos, face recognition)

- Training (Face landmarks/descriptors extraction) and storing models

- DevOps — Deploying using Docker

using the following technology stack:

- VueJS / NuxtJS / VuetifyJS — Frontend (simply to look nice)

- NodeJS / ExpressJS — Backend (Static content & API handlers)

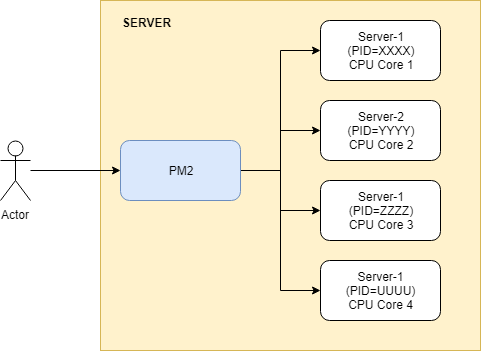

- PM2 — Clustering (multi process support — one per CPU core)

- Docker /Alpine Edge — Deployment (lean containerized app)

If you want to know more about the face-api.js library, I recommend start with Vincent’s post.

Here we will focus on building an app that utilizes that library.

So let’s dive into it.

Models

We will be using the following models:

- Tiny Face Detector — 190 KB — for face detection (rectangles of faces)

- [68 Point Face Landmark Detection Models](http://68 Point Face Landmark Detection Models) — 80 kb — for face landmark extraction (eyes, eyebrows, nose, lips, etc)

- [Face Recognition Model](http://68 Point Face Landmark Detection Models) — 6.2 MB

The total size of all models is less than 6.5 MB.

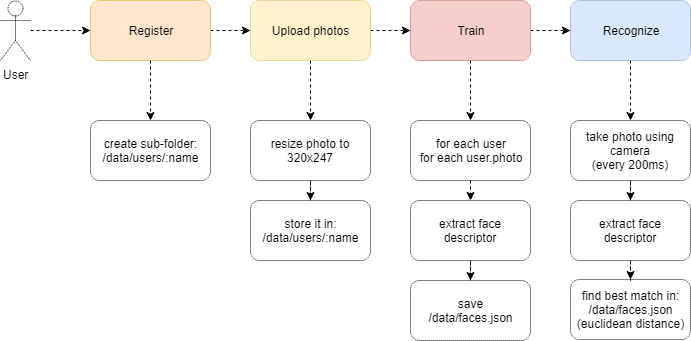

App Workflow

For the sake of simplicity this application will not use any DB as a storage per say, and instead the registration process for each user will rely on:

- creating a new sub-folder in the folder /data/users/ e.g. for the user Goran the app will create /data/users/Goran, in which will be stored all his photos needed for training the face recognition model.

- and trained face recognition model for all users and all their photos will be stored in a static file /data/faces.json (see format below).

First, the user will need to register by typing in his/her name, after which he/she needs to upload at least 3 photos of him/her-self. The photo upload can be done either by a file upload or by taking photos using the browser camera (WebRTC getUserMedia API). During the upload, all photos are resized to size 320x247 using the performant image processing library sharp.

After the registration, the user can start the training process, which takes all users in the catalog (and their uploaded photos) and extracts:

- rectangles with their faces (optional)

- 68 face landmarks (optional)

- 128 face descriptors (these are only required for training the model)

and stores the the face recognition model in the static file /data/faces.json in the following format:

[

{

"user": "user1",

"descriptors": [

{

"path": "/data/users/user1/user1_timestamp1.jpg",

"descriptor": { }

},

{

"path": "/data/users/user1/user1_timestamp2.jpg",

"descriptor": { }

},

{

"path": "/data/users/user1/user1_timestamp3.jpg",

"descriptor": { }

}]

},

{

"user": "user2",

"descriptors": [

{

"path": "/data/users/user2/user2_timestamp1.jpg",

"descriptor": { }

},

{

"path": "/data/users/user2/user2_timestamp2.jpg",

"descriptor": { }

},

{

"path": "/data/users/user2/user2_timestamp3.jpg",

"descriptor": { }

}]

}

]

faces.json

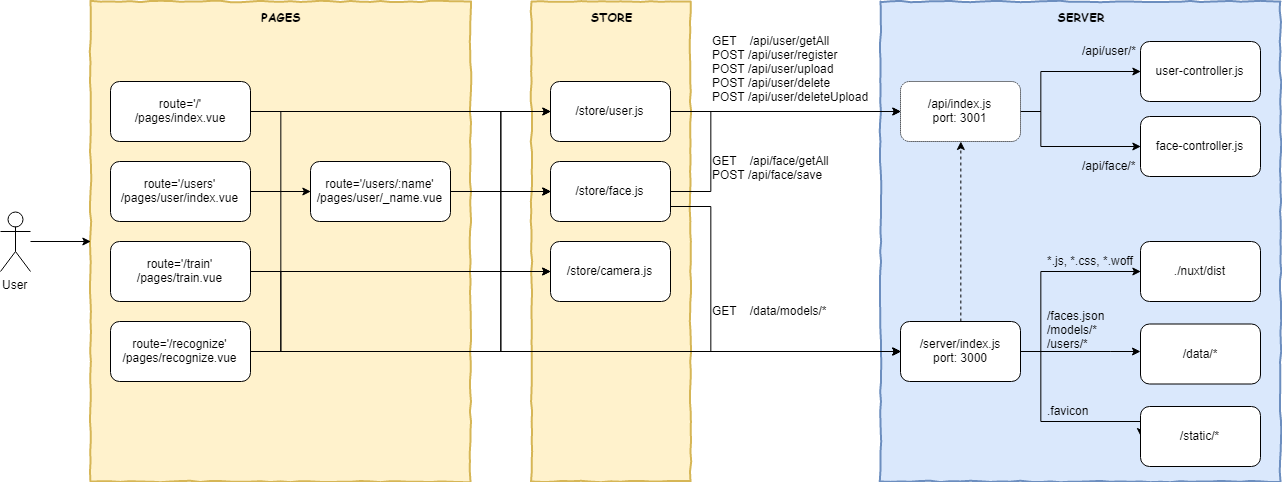

Architecture

The application is using NUXT.JS with SSR (server-side rendering) and it following the its default directory structure convention.

The application in development mode will split the SERVER in two distincts processes:

- /server/index.js — for static content (frontend) — listening on port 3000

- /api/index.js — for API calls (backend) — listening on port 3001

npm run dev

npm run api

By separating the frontend from the backend during the development, we are reducing the number of Nuxt-loaded files, and with it the size and duration of that initial startup, as well as we benefit from faster consecutive start/stop of the API needed during debugging.

On the other hand, in production mode, the application the merges the server-side into a single process, listening on port 3000.

npm run build

npm run start

Loading the models

When the user onboards to our app, we are going to load all TensorflowJS models and for that purpose we will utilize the mounted() handler of the /layouts/default.vue:

<template>

<v-app dark>

...

</v-app>

</template>

<script>

export default {

data() {

return {

items: [

{ icon: "home", title: "Welcome", to: "/" },

{ icon: "people", title: "Users", to: "/users" },

{ icon: "wallpaper", title: "Train", to: "/train" },

{ icon: "camera", title: "Recognize", to: "/recognize" }

],

title: "face® - Realtime Face Recognition"

};

},

async mounted() {

let self = this

await self.$store.dispatch('face/load')

}

};

</script>

facer-layout-default.vue

With load() action being handled by /store/face.js:

export const state = () => ({

loading: false,

loaded: false

})

export const mutations = {

loading(state) {

state.loading = true

},

load(state) {

state.loading = false

state.loaded = true

}

}

export const actions = {

async load({ commit, state }) {

if (!state.loading && !state.loaded) {

commit('loading')

return Promise.all([

faceapi.loadFaceRecognitionModel('/data/models'),

faceapi.loadFaceLandmarkTinyModel('/data/models'),

faceapi.loadTinyFaceDetectorModel('/data/models')

])

.then(() => {

commit('load')

})

}

}

}

facer-store-face-load.js

User registration

We are registring the user via the simple form in the /pages/users/index.vue page handled by the register() method:

<template>

<v-form ref="form" v-model="valid" lazy-validation>

<v-text-field

v-model="name"

:rules="nameRules"

label="Your full name"

required />

<v-spacer/>

<v-btn

:disabled="!valid"

color="primary"

@click="register()">Register new!</v-btn>

</v-form>

</template>

<script>

export default {

data(){

return {

selectedUser: null,

valid: true,

name: null,

nameRules: [

v => !!v || 'Full name is required',

v => (v && v.length > 2) || 'Name must be more than 2 characters'

],

}

},

methods: {

async register() {

const self = this

if (this.$refs.form.validate()) {

return this.$store.dispatch('user/register', this.name)

.then(() =>{

self.$router.push({ path: `/users/${self.name}`})

})

}

}

}

</script>

facer-register.vue

With register() action being handled by the /store/user.js:

export const state = () => ({

list: []

})

export const mutations = {

addUser(state, name) {

state.list.push({

name,

photos: []

})

}

}

export const actions = {

async register({ commit }, name) {

await this.$axios.$post('/api/user/register', { name })

commit('addUser', name)

}

}

facer-store-user-register.js

And API call being handled by /api/controller/user-controller.js:

const express = require('express')

const userRoutes = express.Router()

//folders

const rootFolder = join(__dirname, '../../')

const dataFolder = join(rootFolder, 'data')

const usersFolder = join(dataFolder, 'users')

userRoutes.post("/register", (req, res) => {

res.header("Content-Type", "application/json")

if (req.body.name) {

const newFolder = join(usersFolder, req.body.name)

if (!existsSync(newFolder)) {

mkdirSync(newFolder)

res.send('ok')

} else {

res.sendStatus(500)

.send({

error: 'User already exists'

})

}

} else {

res.sendStatus(500)

.send({

error: 'User name is mandatory.'

})

}

})

facer-controller-user-register.js

which creates a new folder: _/data/users/name.

Photos upload

The user has two options/tabs to upload a photos:

- tab-1: Either by a file upload HTML input, handled by filesChange() method (resized to 320x247 on the server-side)

- tab-2: Or by using the browser camera (WebRTC getUserMedia API) and taking photo snapshots via the HTML5 canvas, handled by takePhoto() method (sized to 320x247 on the client-side)

After registration, we’ve navigated the user on the page where he/she can continue uploading photos _/pages/users/name.vue.

<template>

<v-layout row wrap align-center>

<v-flex xs12>

<v-tabs

v-model="tab"

centered

color="green-lighten2"

dark

icons-and-text

>

<v-tab-item

value="tab-1"

>

<v-card flat>

<form method="POST" class="form-documents" enctype="multipart/form-data">

Upload photos

<input id="fileUpload" :multiple="multiple" type="file" name="fileUpload" @change="filesChange($event.target.name, $event.target.files)" >

</form>

</v-card>

</v-tab-item>

<v-tab-item

value="tab-2"

>

<v-card flat>

<v-btn color="secondary" @click="takePhoto">Take photo</v-btn>

<v-layout row wrap>

<v-flex xs12 md6>

<video

id="live-video"

width="320"

height="247"

autoplay/>

</v-flex>

<v-flex xs12 md6>

<canvas

id="live-canvas"

width="320"

height="247"/>

</v-flex>

</v-layout>

</v-card>

</v-tab-item>

</v-tabs>

</v-flex>

</v-layout>

</template>

<script>

export default {

data () {

return {

tab: 'tab-1',

multiple: true

};

},

computed: {

user() {

const userByName = this.$store.getters['user/userByName']

return userByName(this.$route.params.name)

}

},

methods: {

filesChange(fieldName, fileList) {

const self = this

const formData = new FormData()

formData.append('user', self.user.name)

Array.from(Array(fileList.length).keys()).map(x => {

formData.append(fieldName, fileList[x], fileList[x].name)

});

return self.$store.dispatch('user/upload', formData)

.then(result => {

if (document) {

document.getElementById('fileUpload').value = ""

}

})

},

async takePhoto() {

const video = document.getElementById("live-video")

const canvas = document.getElementById("live-canvas")

const canvasCtx = canvas.getContext("2d")

canvasCtx.drawImage(video, 0, 0, 320, 247)

const content = canvas.toDataURL("image/jpeg")

await this.$store.dispatch('user/uploadBase64', {

user: this.user.name,

content

})

}

}

}

</script>

facer-upload-photos.vue

With upload() and uploadBase64() actions being handled by the /store/user.js:

export const state = () => ({

list: []

})

export const mutations = {

addPhotos(state, data) {

const found = state.list.find(item => {

return item.name === data.user

})

if (found) {

data.photos.forEach(photo => {

found.photos.push(photo)

})

}

}

}

export const actions = {

async upload({ commit }, upload) {

const data = await this.$axios.$post('/api/user/upload', upload)

commit('addPhotos', {

user: upload.get('user'),

photos: data

})

},

async uploadBase64({ commit }, upload) {

const data = await this.$axios.$post('/api/user/uploadBase64', { upload })

commit('addPhotos', {

user: upload.user,

photos: data

})

}

}

facer-store-user-upload.js

And the API calls being handled by /api/controller/user-controller.js:

const express = require('express')

const sharp = require('sharp')

sharp.cache(false)

const multer = require("multer")

const userRoutes = express.Router()

//folders

const rootFolder = join(__dirname, '../../')

const dataFolder = join(rootFolder, 'data')

const usersFolder = join(dataFolder, 'users')

const storage = multer.diskStorage({

destination: function(req, file, callback) {

callback(null, usersFolder)

},

filename: function(req, file, callback) {

let fileComponents = file.originalname.split(".")

let fileExtension = fileComponents[fileComponents.length - 1]

let filename = `${file.originalname}_${Date.now()}.${fileExtension}`

callback(null, filename)

}

})

userRoutes.post("/upload", async(req, res) => {

res.header("Content-Type", "application/json")

const upload = multer({

storage: storage

}).array('fileUpload');

await uploadFile(upload, req, res)

.then(result => res.send(result))

.catch(e => {

console.error(e)

res.sendStatus(500).send(e)

})

})

userRoutes.post("/uploadBase64", async(req, res) => {

res.header("Content-Type", "application/json")

await uploadBase64(req.body.upload)

.then(result => res.send(result))

.catch(e => {

console.error(e)

res.sendStatus(500).send(e)

})

})

async function uploadFile(upload, req, res) {

return new Promise(async(resolve, reject) => {

await upload(req, res, async(err) => {

if (err) {

reject(new Error('Error uploading file'))

return

}

const result = [];

await Promise.all(req.files.map(async file => {

try {

const oldPath = join(usersFolder, file.filename)

const newPath = join(usersFolder, req.body.user, file.filename)

const buffer = readFileSync(oldPath)

await sharp(buffer)

.resize(320, 247)

.toFile(newPath)

.then(() => {

result.push(`/data/users/${req.body.user}/${file.filename}`)

try {

unlinkSync(oldPath)

} catch (ex) {

console.log(ex)

}

})

} catch (e) {

reject(e)

return

}

}))

resolve(result)

})

})

}

async function uploadBase64(upload) {

const fileName = `${upload.user}_${Date.now()}.jpg`

const imgPath = join(usersFolder, upload.user, fileName)

const content = upload.content.split(',')[1]

return new Promise(async(resolve, reject) => {

writeFile(imgPath, content, 'base64', (err) => {

if (err) {

reject(new Error(err))

}

resolve([`/data/users/${upload.user}/${fileName}`])

})

})

}

facer-controller-user-upload.js

For extracing the file content from the mutipart/form-data we are using Multer. Also, since the user can upload images of different sizes and shapes, we are resizing the uploaded image using sharp. Finally, the photos get stored in the user’s folder: _/data/users/name/.

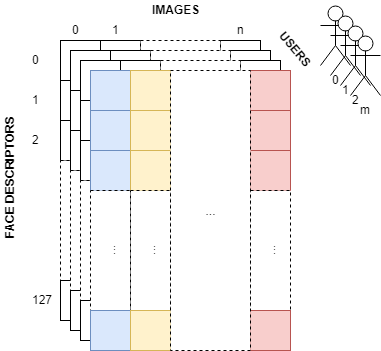

Training — Face Descriptor Exraction

Training is the process of extracting 128 face descriptors out of one image for a given user (vector of 128 descriptor values).

It is recommended that one user has at least 3 photos uploaded for training. Hence, after the training, the face recognition model will be composed out of n x m descriptor vectors; m — being number of users and n — being the number of photos for given user.

We can perform training in one of the following manners:

- One by one — training after each photo upload of a selected user

- Per-User — training for all user’s photos of a selected user

- Batch — training for all users and all their photos at once

In this app, we will implement a Batch training process, that as an end result will store the face recogintion model inside the /data/faces.json file.

The batch training process is started from the /pages/train.vue:

<template>

<v-layout row

wrap>

<v-flex xs6>

<v-btn color="primary" @click="train()">

Train

</v-btn>

</v-flex>

<v-flex v-for="user in users" :key="user.name" xs12>

<v-card>

<v-card-title>

<strong class="headline">{{ user.name }}</strong>

<v-btn :to="{ path: `/users/${user.name}`}" fab dark small color="primary">

<v-icon dark>add_a_photo</v-icon>

</v-btn>

</v-card-title>

<v-layout row

wrap>

<v-flex v-for="(photo, index) in user.photos"

:key="photo"

xs12 md6 lg4>

<v-card flat tile class="d-flex">

<img :id="user.name + index" :src="photo">

</v-card>

</v-flex>

</v-layout>

</v-card>

</v-flex>

</v-layout>

</template>

<script>

export default {

computed: {

users() {

return this.$store.state.user.list;

},

},

async fetch({ store }) {

const self = this

await store.dispatch('user/getAll')

},

methods: {

async train() {

const self = this

const faces = []

await Promise.all(self.users.map(async user => {

let descriptors = [];

await Promise.all(user.photos.map(async (photo, index) => {

const photoId = `${user.name}${index}`;

const detections = await self.$store.dispatch('face/getFaceDetections', photoId)

detections.forEach(d => {

descriptors.push({

path: photo,

descriptor: d._descriptor

})

})

}))

faces.push({

user: user.name,

descriptors: descriptors

})

}))

await self.$store.dispatch('face/save', faces)

.catch(e => {

console.error(e)

})

}

}

}

</script>

facer-train.vue

that iterates through the list of users, and for each of their photos, it extracts the face descriptors (128 face descriptor vector). After that, it save the JSON face recognition model via the save() action of the /store/face.js:

import * as faceapi from 'face-api.js'

export const state = () => ({

faces: []

})

export const mutations = {

setFaces(state, faces) {

state.faces = faces

}

}

export const actions = {

async save({ commit }, faces) {

const { data } = await this.$axios.$post('/api/face/save', { faces })

commit('setFaces', data)

}

}

facer-store-face-save.js

And the API call being handled by /api/controllers/face-controller.js:

const express = require('express')

const modelRoutes = express.Router()

//folders & model file

const rootFolder = join(__dirname, '../../')

const dataFolder = join(rootFolder, 'data')

const facesFileName = 'faces.json'

modelRoutes.post("/save", async(req, res) => {

res.header("Content-Type", "application/json")

const content = JSON.stringify(req.body.faces)

writeFileSync(join(dataFolder, facesFileName), content)

res.send('ok')

})

facer-controller-face-upload.js

Recognition

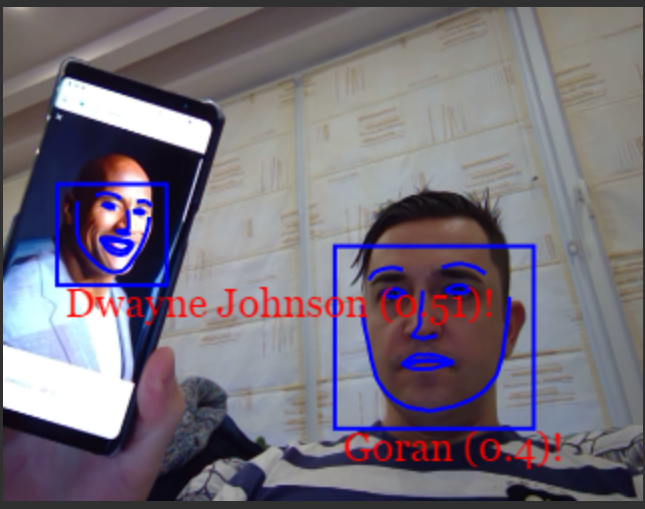

Face recognition process reads the face recognition model (faces.json) and creates a Face Matcher, that is able to calculate the Euclidean Distance between the face descriptors vectors of the stored face recogition model and any new face to be recognized.

In the UI, via the camera (WebRTC getUserMedia API), we start to sample with 60 fps (frames per second) snapshot stored inside a HTML canvas element. Then, for each such snapshot, we extract its face descriptors and using the face matcher we output the best match and draw it back onto the canvas.

The face recognition process is started via the /pages/recognize.vue:

<template>

<v-layout row

wrap>

<v-flex>

<h1>Recognize</h1>

</v-flex>

<v-flex xs12 md6>

<video

id="live-video"

width="320"

height="247"

autoplay/>

</v-flex>

<v-flex xs12 md6>

<canvas

id="live-canvas"

width="320"

height="247"/>

</v-flex>

</v-layout>

</template>

<script>

export default {

data(){

return {

interval: null,

fps: 60,

recognition: ''

}

},

computed: {

async beforeMount() {

let self = this;

await self.$store.dispatch('face/getAll')

.then(() => self.$store.dispatch('face/getFaceMatcher'))

},

async mounted () {

await this.recognize()

},

methods: {

async recognize(){

let self = this

await self.$store.dispatch('camera/startCamera')

.then(stream => {

const videoDiv = document.getElementById("live-video")

const canvasDiv = document.getElementById("live-canvas")

const canvasCtx = canvasDiv.getContext("2d")

videoDiv.srcObject = stream

// with FPS sampling

self.interval = setInterval(async () => {

canvasCtx.drawImage(videoDiv, 0, 0, 320, 247)

const detections = await self.$store.dispatch('face/getFaceDetections', canvasDiv)

if (detections.length) {

detections.forEach(async item => {

const shifted = item.forSize(canvasDiv.width, canvasDiv.height)

self.$store.dispatch('face/drawLandmarks', { canvasDiv, landmarks: shifted._unshiftedLandmarks } )

const bestMatch = await self.$store.dispatch('face/recognize', shifted.descriptor)

self.recognition = `${bestMatch.toString()}!`

self.$store.dispatch('face/drawDetection', { canvasDiv, detection: shifted._detection, recognition: self.recognition } )

})

} else {

self.recognition = ''

}

}, self.fps / 1000)

})

}

}

}

</script>

facer-recognize.vue

whereat getFaceDetections(), drawLandmarks(), recognize() and drawDetections() actions are handled by /store/face.js:

import * as faceapi from 'face-api.js'

export const state = () => ({

faces: [],

faceMatcher: null,

recognizeOptions: {

useTiny: true

}

})

export const mutations = {

setFaceMatcher(state, matcher) {

state.faceMatcher = matcher

}

}

export const actions = {

async getFaceDetections({ commit, state }, canvasDiv) {

const detections = await faceapi

.detectAllFaces(canvasDiv, new faceapi.TinyFaceDetectorOptions({ scoreThreshold: 0.5 }))

.withFaceLandmarks(state.recognizeOptions.useTiny)

.withFaceDescriptors()

return detections

},

async recognize({ commit, state }, faceDescriptor) {

const bestMatch = await state.faceMatcher.findBestMatch(faceDescriptor)

return bestMatch

},

drawLandmarks({ commit, state }, { canvasDiv, landmarks }) {

faceapi.drawLandmarks(canvasDiv, landmarks, { drawLines: true })

},

drawDetection({ commit, state }, { canvasDiv, detection, recognition }) {

const boxesWithText = [

new faceapi.BoxWithText(new faceapi.Rect(detection.box.x, detection.box.y, detection.box.width, detection.box.height), recognition)

]

faceapi.drawDetection(canvasDiv, boxesWithText)

}

}

facer-store-face-recognize.js

Production

As NodeJS by default works in a single process, in production this approach is not optimal, especially if we have hardware with mutiple CPUs and/or CPU Cores.

Hence we will utilize PM2 to instantiate as many processes as the number of CPU Cores we have (-i 0 param), and load balance the request between those forked processes:

pm2 start server/index.js -i 0 — attach

Deploy

We will package our app as a very lean Docker Image, based on Alpine:Edge (less than 300 MB because size matters):

FROM gjovanov/node-alpine-edge

LABEL maintainer="Goran Jovanov <goran.jovanov@gmail.com>"

# Version

ADD VERSION .

# Environment variables

ENV NODE_ENV production

ENV HOST 0.0.0.0

ENV PORT 3000

ENV API_URL https://facer.xplorify.net

# Install packages & git clone source code and build the application

RUN apk add --update --no-cache --virtual .build-deps \

gcc g++ make git python && \

apk add --no-cache vips vips-dev fftw-dev libc6-compat \

--repository http://nl.alpinelinux.org/alpine/edge/testing/ \

--repository http://nl.alpinelinux.org/alpine/edge/main && \

cd / && \

git clone https://github.com/gjovanov/facer.git && \

cd /facer && \

npm i pm2 -g && \

npm i --production && \

npm run build && \

apk del .build-deps vips-dev fftw-dev && \

rm -rf /var/cache/apk/*

# Volumes

VOLUME /facer/data

WORKDIR /facer

EXPOSE 3000

# Define the Run command

CMD ["npm", "run", "start"]

facer-docker.Dockerfile

Then we can either:

- build the Docker image by

docker build -t gjovanov/facer . - or use Travis Reeder’s versioning’s script

./build.sh

Or pull the one from Docker Hub docker pull gjovanov/facer .

Finally, we can run the Docker Container by:

docker run -d --name facer \

--hostname facer \

--restart always \

-e API_URL=https://facer.xplorify.net \

-p 8081:3000 \

-v /gjovanov/facer/data:/facer/data \

--net=bridge \

gjovanov/facer

Source code & Demo

Both, the source code and a demo are available to try out.

Any suggestions for improvements or pull-requests are more than welcome.

#vuejs #docker #nuxtjs