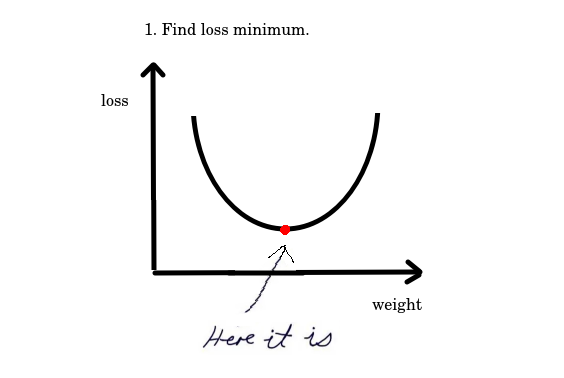

The task of an optimizer is to look for such a set of weights for which a NN model yields the lowest possible loss. If you only had one weight and a loss function like the one depicted below you wouldn’t have to be a genius to find the solution.

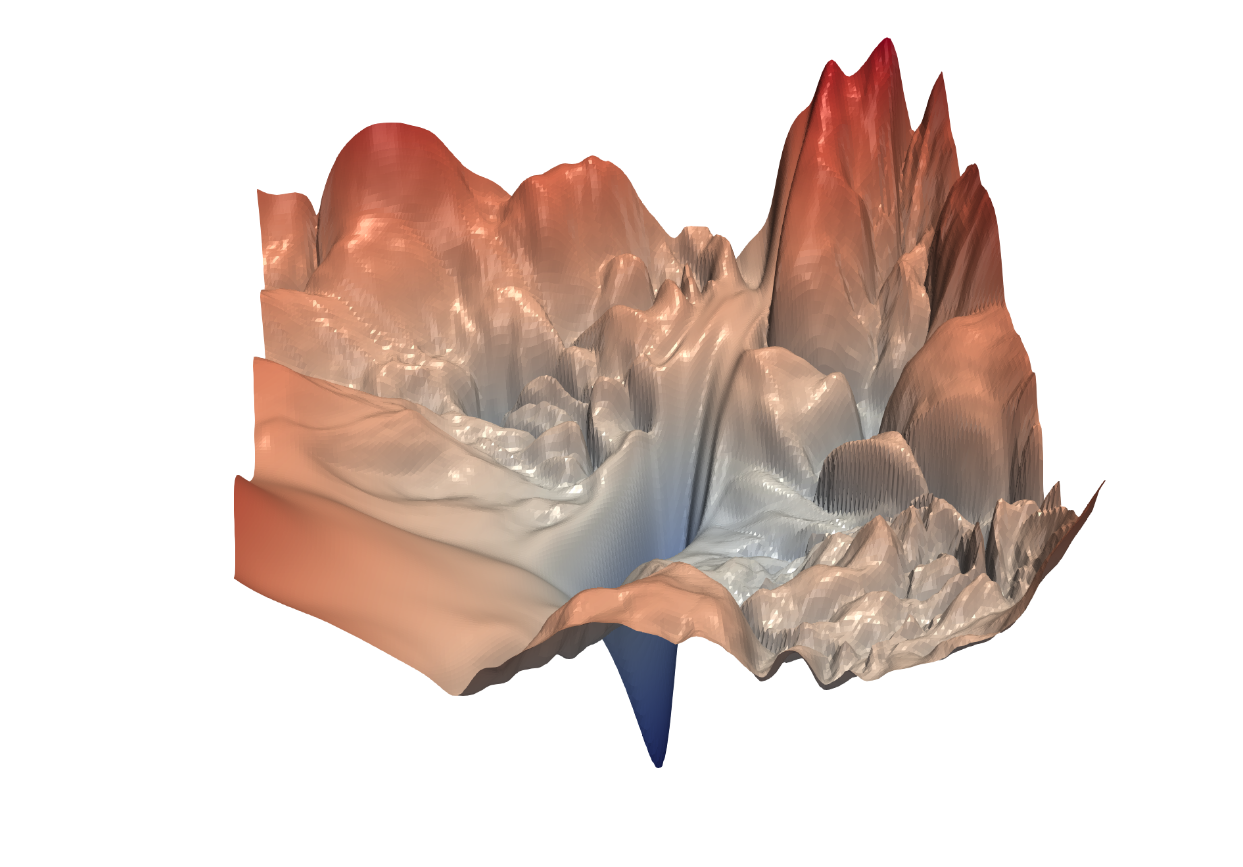

Unfortunately you normally have a multitude of weights and a loss landscape that is hardly simple, not to mention no longer suited for a 2D drawing.

The loss surface of ResNet-56 without skip connections visualized using a method proposed in https://arxiv.org/pdf/1712.09913.pdf.

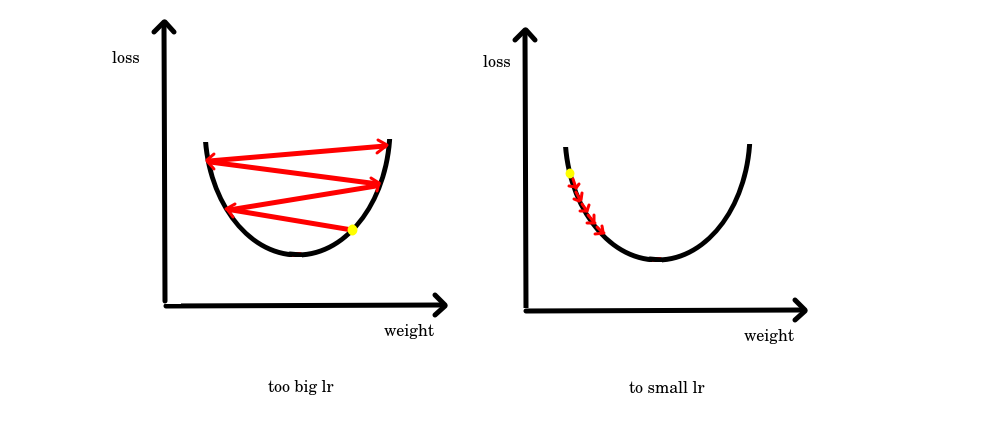

Finding a minimum of such a function is no longer a trivial task. The most common optimizers like Adam or SGD require very time-consuming hyperparameter tuning and can get caught in the local minima. The importance of choosing a hyperparameter like learning rate can be summarized by the following picture:

Too big learning rate causes oscillations around the minimum and too small learning rate makes the learning process super slow.

The recently proposed LookAhead optimizer makes the optimization process

less sensitive to suboptimal hyperparameters and therefore lessens the need for extensive hyperparameter tuning.

It sounds like something worth exploring!

The algorithm

Intuitively, the algorithm chooses a search direction by looking ahead at the sequence of “fast weights” generated by another optimizer.

The optimizer keeps two sets of weights: fast weights θ and slow weights ϕ. They are both initialized with the same values. A standard optimizer (e.g. Adam, SGD, …) with a certain learning rate η is used to update the fast weights θ for a defined number of steps k resulting in some new values θ’.

Then a crucial thing happens: the slow weights ϕ are moved along the direction defined by the difference of weight vectors θ’- ϕ. The length of this step is controlled by the parameter α — the slow weights learning rate.

#machine-learning #neural-networks #gradient-descent #deep-learning #ai #deep learning