When I had an interview for a data science-related job, the interviewer asked me the following question. Afterward, I also asked the same question to the candidate when I was an interviewer: Given a large dataset (more than 1,000 columns with 100,000 rows (records)), how will you select the useful features to build a (supervised) model?

This is a good question to distinguish your data science knowledge between a student and a professional level. When you are a student, you learn algorithms from a beautiful and cleansed dataset. However, in the business world, data scientists do spend a lot of efforts for data cleansing, to build a machine learning model.

Back to our question:

Given a large dataset (more than 1000 columns with 10000 rows (records)), how do you select the useful features to build a (supervised) model?

In fact, there is no absolute solution to this question but to testify your logic and explanation. In this post, I would share a few methodologies (feature selection) to handle it.

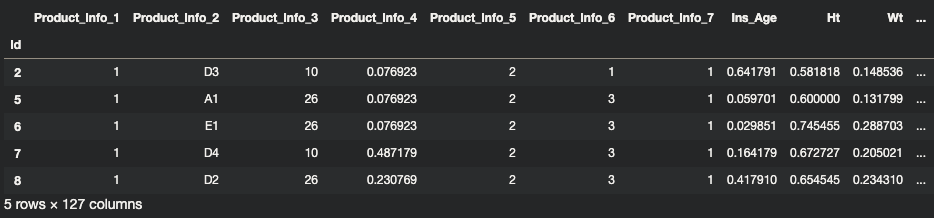

The demonstrated Kaggle notebook is uploaded here, where the dataset I use is the prudential life insurance underwriting data. The goal of this task is to predict the risk level (underwriting) of the life insurance applicant. For more implementation of the feature selection, you may check the Scikit-learn article.

#machine-learning #supervised-learning #data-science #feature-engineering