Implementing feature selection methods on text classification

The size of variables or features is referred to as the dimensionality of a dataset. On text classification methods, the size of features could be enumerated a large number. In this post, we are going to implement tf-idf decomposition dimensionality reduction technique using Linear Discriminant Analysis-LDA.

Our pathway in this study:

1. Preparing Dataset

2. Transforming text to feature vectors

3. Applying filter methods

4. Applying Linear Discriminant Analysis

5. Building a Random Forest Classifier

6. Result comparison

All source codes and notebooks have been uploaded in this Github repository.

Problem Formulation

Enhancing the accuracy and reducing process time in text classification.

Data Exploration

We are using the “515K Hotel Reviews Data in Europe” from the Kaggle datasets. The data was scraped from Booking.com. All data in the file is publicly available to everyone already. Data is originally owned by Booking.com and you can download it thought this profile on Kaggle. The dataset which we need contains 515,000 positive and negative reviews.

Import libraries

The most important libraries that we have used are Scikit-Learn and pandas.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import requests

import json

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.feature_selection import VarianceThreshold

from sklearn.metrics import accuracy_score, roc_auc_score

from sklearn.preprocessing import StandardScaler

from nltk.stem.snowball import SnowballStemmer

from string import punctuation

from textblob import TextBlob

import re

Preparing Dataset

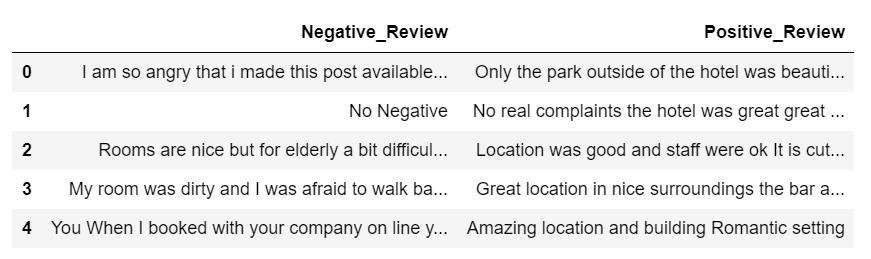

We are going to work just on two categories, positives and negatives. Therefore, we select 5,000 rows for each category and copy them into the Pandas Dataframe (5,000 for each part). We used Kaggle’s notebook for this project, therefore the dataset was loaded as a local file. If you are using another tool or running as a script you can download it. let’s take a glance at the dataset:

fields = ['Positive_Review', 'Negative_Review']

df = pd.read_csv(

'../input/515k-hotel-reviews-data-in-europe/Hotel_Reviews.csv',

usecols= fields, nrows=5000)

df.head()

Stemming words using NLTK SnowballStemmer:

stemmer = SnowballStemmer('english')

df['Positive_Review'] = df['Positive_Review'].apply(

lambda x:' '.join([stemmer.stem(y) for y in x.split()]))

df['Negative_Review'] = df['Negative_Review'].apply(

lambda x: ' '.join([stemmer.stem(y) for y in x.split()]))

Removing Stop-Words:

Exclude stopwords with countwordsfree.com list comprehension and pandas.DataFrame.apply.

url = "https://countwordsfree.com/stopwords/english/json"

response = pd.DataFrame(data = json.loads(requests.get(url).text))

SW = list(response['words'])

df['Positive_Review'] = df['Positive_Review'].apply(

lambda x: ' '.join([word for word in x.split() if word not in (SW)]))

df['Negative_Review'] = df['Negative_Review'].apply(

lambda x: ' '.join([word for word in x.split() if word not in (SW)]))

#feature-selection #lda #machine-learning #scikit-learn #text-classification #deep learning