Introduction

Machine learning algorithms require more than just fitting models and making predictions to improve accuracy. Nowadays, most winning models in the industry or in competitions have been using Ensemble Techniques to perform better. One such technique is Gradient Boosting.

This article will mainly focus on understanding how Gradient Boosting Trees works for classification problems. We will also discuss about some important parameters, advantages and disadvantages associated with this method. Before that, let’s get a brief overview of Ensemble methods.

What are Ensemble Techniques ?

**Bias and Variance **— While building any model, our objective is to optimize both variance and bias but in the real world scenario, one comes at the cost of the other. It is important to understand the trade-off and figure out what suits our use case.

Ensemblesare built on the idea that a collection of weak predictors, when combined together, give a final prediction which performs much better than the individual ones. Ensembles can be of two types —

i) Bagging **— **Bootstrap Aggregation or Bagging is a ML algorithm in which a number of independent predictors are built by taking samples with replacement. The individual outcomes are then combined by average (Regression) or majority voting (Classification) to derive the final prediction. A widely used algorithm in this space is Random Forest.

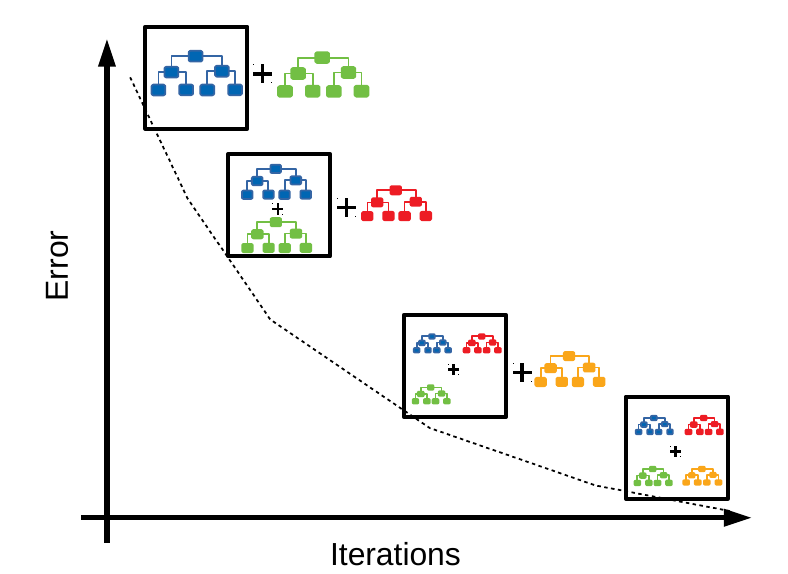

**ii) Boosting — **Boosting is a ML algorithm in which the weak learners are converted into strong learners. Weak learners are classifiers which always perform slightly better than chance irrespective of the distribution over the training data. In Boosting, the predictions are sequential wherein each subsequent predictor learns from the errors of the previous predictors. Gradient Boosting Trees (GBT) is a commonly used method in this category.

#machine-learning #gradient-boosting #data-science #classification #decision-tree