There exist a plethora of articles on quantization, but they generally cover only the surface level theory or provide a simple overview. In this article, I’ll explain how quantization is actually implemented by deep learning frameworks.

Before getting into quantization, it’s good to understand the basic difference between two key concepts: float (floating-point) and int (fixed-point).

Fixed-point vs Floating-point

Fixed-point

Fixed-point basically means there are a fixed number of bits reserved for storing the integer and fractional parts of a number. For integers, we don’t have fractions. Thus, all the bits except one represent the integer. One bit represents the sign bit. So in ‘int8’, 7 bits will be used to represent the integer, and one bit will indicate whether the number is positive or negative. In an unsigned integer, there’s no sign bit—all bits represent the integer.

EXAMPLES (8-bit unsigned int)

33 - 00100001

9 - 00001001

Floating-point

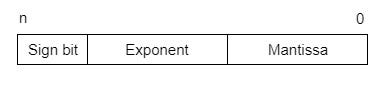

Floating-points don’t have a fixed number of bits assigned to integer and fractional parts. Instead, it reserves a certain number of bits for the number (called the mantissa), and a certain number of bits to say where within that number the decimal place sits (called the exponent).

A number is represented by the formula(-1)**s (1+m)*(2**(e-bias)), where s is the sign bit, m is the mantissa, e is the exponent value, and _bias_is the bias number.

Why it matters?

#computer-vision #quantization #machine-learning #deep-learning #heartbeat #deep learning