Critique of pure interpretation

The scientific method as the tool that has served us to find explanations about how things work and make decisions, brought us the biggest challenge that until 2020 we have probably still not overcome: Giving useful narratives to numbers. Also known as “interpretation”.

Just as a matter of clarification, the scientific method is the pipeline of finding evidence to prove or disprove hypotheses. Science and how things work is everything, from natural sciences to economic sciences. But most delightful, by evidence, not only we mean, but humanity understands “data”. And data cannot be anything less than numbers.

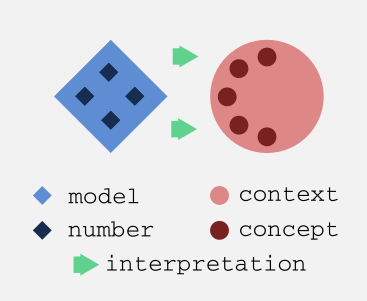

Leaving space for generality, the problem of interpretation is particularly entertaining along the path of statistical analyses within the scientific method pipeline. This means finding models that are written in a mathematical language and finding an interpretation for them within the context that delivered the data.

Interpreting a model has two crucial implications that many scientists or science technicians have for long skipped (hopefully not forgotten). The first one relies on the fact that if there is a model to interpret now, there must have been a research question asked before in a context that delivered data to build such a model. The second one is that the narratives we need to create about our model can do much more by expressing ideas about a number within the context of the research question rather than purely inside the model. After all and until 2020, the decisions are made by humans based on the meaning of those numbers, not really by computers. And this last statement is important, because in the 21st century we might actually get to the point that computers take over us in many tasks and they might end up making decisions for us. For this they will need to communicate those decisions among their network. Just then, the human narratives will not count since computers only understand numbers.

As statisticians, we have been adopting the practice of finding problems to solve, finding questions to answer and answers to explain using available data. This mindset has kept us running on a circle of non-sense narratives and interpretations because problems are not found or looked for. Problems and questions emerge from all ongoing interactions and reactions of different phenomena. This fact implies that statistical models and/or other analytical approaches are tools to be used upon the core central problem or question, they are not the spine.

This ugly art of fitting a linear regression on some data and saying that “the beta coefficient is the amount of units that y increases when x increases one unit”, or the art of calculating an average and saying that “it is the value around which we can find the majority of the data points” is a ruthless product that we statisticians have been offering to the scientific method.

The bubble of interpretation

Teaching statistics has made clear for us that people can perfectly understand the way the models work and how to train them to get the numbers. However, what we still did not digest is the fact that out of all the numbers that are produced when training models, most are simply noncommunicable for non statistical people. Let us present some of these numbers whose communication is dark:

- The p-value is one particular concept that may in fact deserve an entire essay on its own. On social media, for instance, we constantly see people asking about an explanation of the p-value and right away there is a storm of statisticians engaging into giving their own interpretation.

- The odds-ratio stars in this debate. After 10 years of working in this field, we must admit that it has never been possible to explain to an expert in another field how to think about the odds ratio. Even Wikipedia has tried out and, in our opinion, has more than failed at it.

- The beta coefficients of a dummy variable in a logistic regression are of the same kind. We have the feeling that they are odds-ratio of the categories with respect to the baseline category. But, how can we make it understandable and actionable in practice? This simply we don’t know.

This problem is central for the scientific and statistical community. The models that we train lose their value because of such a lack of interpretation.

After some years of talking with colleagues about this problem and looking for an appropriate framework that clears up the problem of interpretation, we had come to one obvious conclusion: the interpretation process exists and can only happen within a given context. It makes no sense to fight for the interpretation process inside the model. The inner processes of the model are all numerical and these results can be communicated and understood only by numerical, statistical people. Making interpretations of numbers inside the models is a bubble of rephrasing. In order to interpret the results of a model so that they become tools for taking actions, it is essential to keep in mind the context from where the data is coming and the research questions are asked.

#interpretation #model-interpretability #semantics #data #data-analysis #data analysis