What is bias?

Algorithms are present everywhere around us. We as humans have constantly used and trusted them on a daily basis. They help to continually make countless decisions for us, be it right from small scale businesses to multi-national companies, all of these thrive on algorithmic decision making in crucial scenarios. Although they may seem to have an unbiased calculated nature, they aren’t any more objective than humans because at the end of the day they are written by humans.

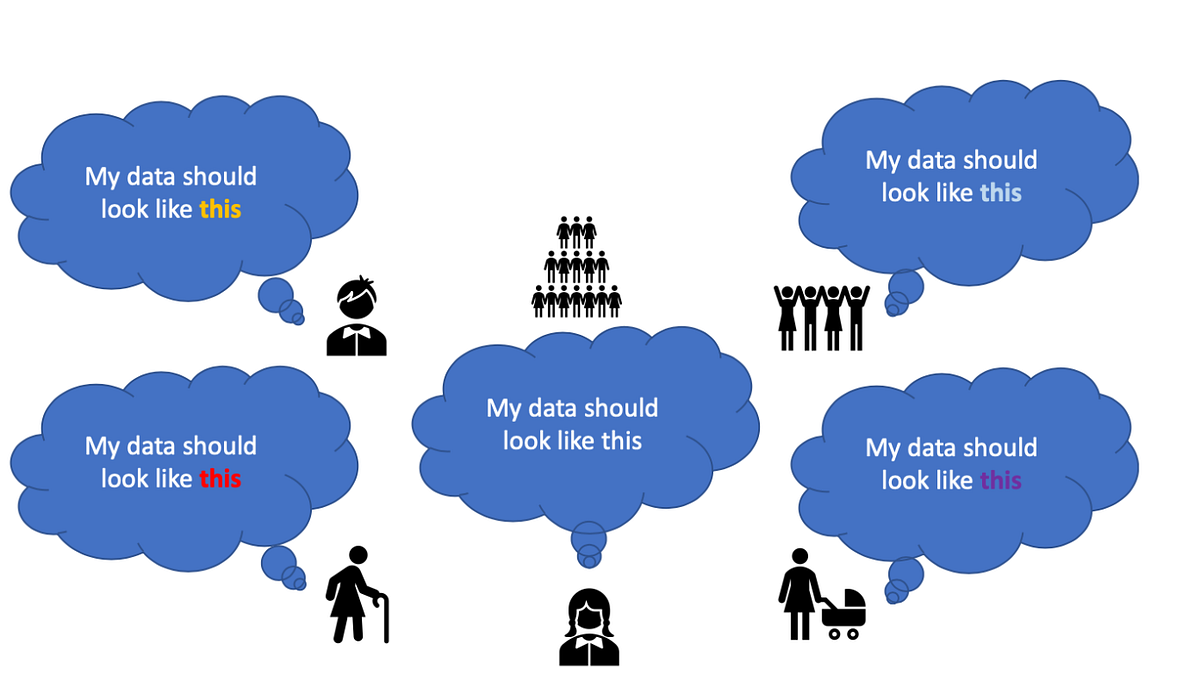

This is where the occurrence of “Algorithmic Bias” comes into play. Algorithm bias is routed using machine learning and deep learning, these are the mechanisms by which computers make important decisions. Both of these techniques are immensely dependent on huge amount of data! This is where the type of data that is being entered comes into picture. Ultimately the people who feed this data play a significant role in this decision making as shown in the illustrated figure. Generally, a group of people enter the data into the training data for machine learning, so what goes wrong when these groups of people enter data in a biased manner?

Let’s take a real-world example: Company A’s (a mug producing company) image classification algorithm only classifies certain types of mugs to be listed in their algorithm. So what about mugs with different characteristics and features from diverse geographical locations?

Training data of Company A: All of the data contains handles and a specific shape as characteristics

Image classification input data: Does this mean the given mugs in the picture below cannot be identified since it has the handle missing or the handles placed in a new fashion as a characteristic?

This is just still a small problem to be precise, the bigger problems arise when racial, gender, age, legal differences on a global level crop up. Few of these real-world disasters of algorithmic bias involves:

· In the year 2015, Google’s popular photo recognition tool erroneously labelled a photo of two gorillas as people of color.

· In the US a crime predicting algorithm, incorrectly listed black people as repeated offenders.

These are just a few examples listed.

#data-science #algorithmic-bias #algorithms