If I say you wanted to do something with hand gesture recognition in python, what would be the first solution that will pop in your mind: train a CNN, contours, or convexity hull. Sounds good and feasible, but when it comes to actually make use of these techniques, the detection is not very good and requires special conditions(like a proper background or similar conditions that you used while training).

Recently I came across a super cool library called Mediapipe which makes things pretty much simple for us. I would suggest you go through its official site to read more about it because the site explains pretty much everything that the library provides to you. What I would do in this article would be to show how I used this library to come up with some great projects, because that’s what made you land up to this article.

Before we begin with real code stuff let’s take some time to appreciate this library. I am really fascinated by how easy is to use this library and do innovative things which otherwise I found very difficult to code from scratch. You don’t even need a GPU to use this library and the things work pretty much smooth even on a regular CPU. To add, it is backed up by Google, so this gives another reason to use this library. In this article, I would be dealing with Python stuff but this library supports nearly all platforms(Android, iOS, C++). At the time when I am writing this article, there are only a few modules available for Python but don’t worry it is still evolving fast and you can expect more coming very soon.

Introduction

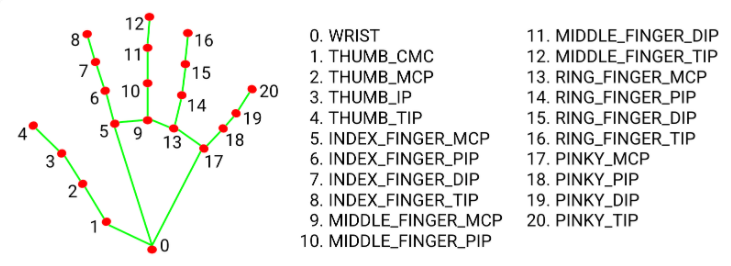

In this article, I would be using the hands module of this library to create 2 cool projects. The hands module creates a 21 points based localization of your hand. What this means is that if you supply a hand image to this module, it will return a 21 point vector showing the coordinates of 21 important landmarks present on your hand. If you want to know how it does that, go and check the documentation on their page.

The image is taken from Mediapipe’s official website. Please check here to view full working details.

The points would mean the same irrespective of your input image. This means that point 4 would always be the tip of your thumb, 8 would always be the tip of the index finger. So once you have the 21 point vector, it’s up to your creativity what kind of project you create.

#opencv #volume-control #gesture-recognition #mediapipe #computer-vision