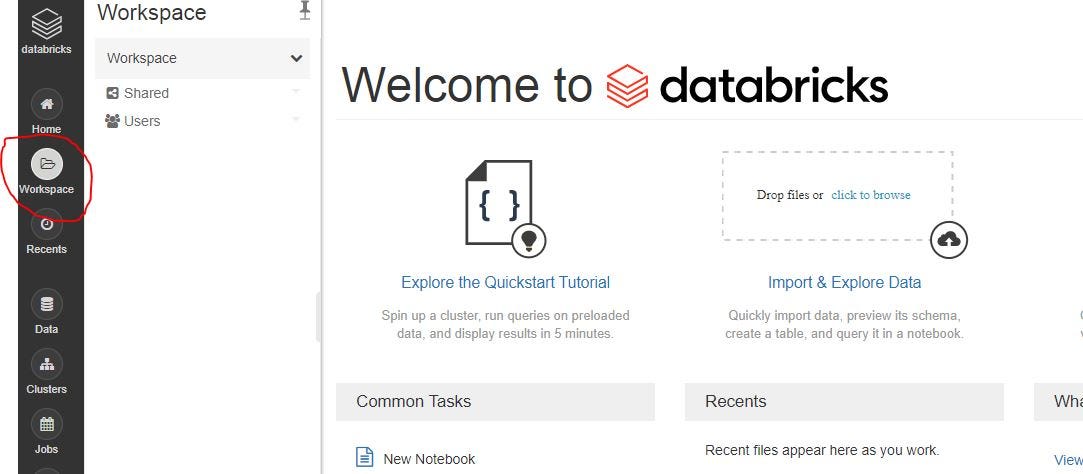

Many companies today use Apache Spark. For those who are not using Spark, you are spending much more time than you should to execute Queries. Learning it gives you a leg up for industry. Additionally installing Spark on your local machine can be very complicated and unless you have a very expensive and powerful system, It is not worth the hassle to try to install(Trust me!).Fortunately, the company Databricks provides Spark infrastructure,without all the convoluted installation headaches……and you can use it for free! Here are the steps get started!

1. Download the JDBC driver

A JDBC driver is a software component enabling a Java application to interact with a database (https://en.wikipedia.org/wiki/JDBC_driver)

In jupyter notebook run these two commands(or you can run them in bash if you are a linux user):

i) Download the necessary JDBC driver for MySQL

!wget https://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.45.tar.gz

ii) Extract the JDBC driver JAR file

!tar zxvf mysql-connector-java-5.1.45.tar.gz

#apache-spark #pyspark #databricks #mysql #big-data