How to serve machine learning models with ease

One of the biggest challenge in many data science projects is model deployment. In some cases, you can make your predictions offline in batches, but in other scenarios, you have to invoke your model in real-time.

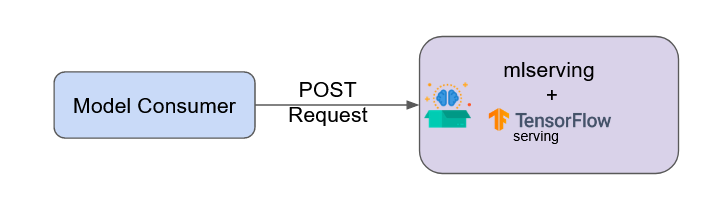

Besides, we see more and more model-servers like [TensorFlow Serving](https://www.tensorflow.org/tfx/guide/serving) and [Torch Serve](https://pytorch.org/serve/) being released. Although there are many good reasons to use these solutions in production as they are fast and efficient, they can’t encapsulate the model technicalities and implementation details.

That’s why I’ve created [mlserving](https://pypi.org/project/mlserving/). A python package that helps data-scientists to focus more of their firepower on the machine-learning logic and less on the server-technicalities.

mlserving tries to generalize the prediction flow, rather if you implement the prediction yourself, or use another serving application for that.

#machine-learning #serving #data-science #endpoints #python