Reinforcement Learning (RL) is a powerful class of Machine Learning, which, unlike supervised learning (another powerful class), does not require labeled data to train a machine/agent to make intelligent decisions. RL revolves around only two elements:

- Environment: The (simulation) world with which the actor/machine (i.e. agent) interacts.

- Agent: Actor (e.g. robot, computing machine, etc.) which is trained by the RL algorithm to behave independently and intelligently.

Most of the resources pertaining to RL talk excessively (and in most cases solely) about training techniques for RL agents (agent-environment interaction). These resources pay little-to-no attention to the development of the environment. It makes sense as well because developing the RL algorithm is the **MAIN **goal at the end of the day. Methods such as Dynamic Programming, Q-learning, SARSA, Monte-Carlo, Deep Q-Learning, etc. focus solely on training the agent (often called RL-agent).

An RL-AGENT learns to behave intelligently when it interacts with the ENVIRONMENT,and receives REWARD or PENALTY for its ACTION. But how can we train an agent if we do not have the environment? In most of the practical applications, the programmer(s) may have to develop their own training environment.

In this blog, we will learn about how to develop a simple RL Environment, the Hello-World of RL, The Grid World Environment!

But first, lets learn some fundamentals about the environment.

Fundamentals of RL Environment

The following are the necessities of an RL environment:

- States/Observations set for environment.

- Reward or penalty from environment to agent.

- Action set for agent, within the boundaries of environment.

Simply put, an RL environment contains information about all possible actions that an agent can take, the states that agent can achieve following those actions, and the reward or penalty (negative reward) the environment gives to the agent, in return of the interaction.

Grid-World Environment

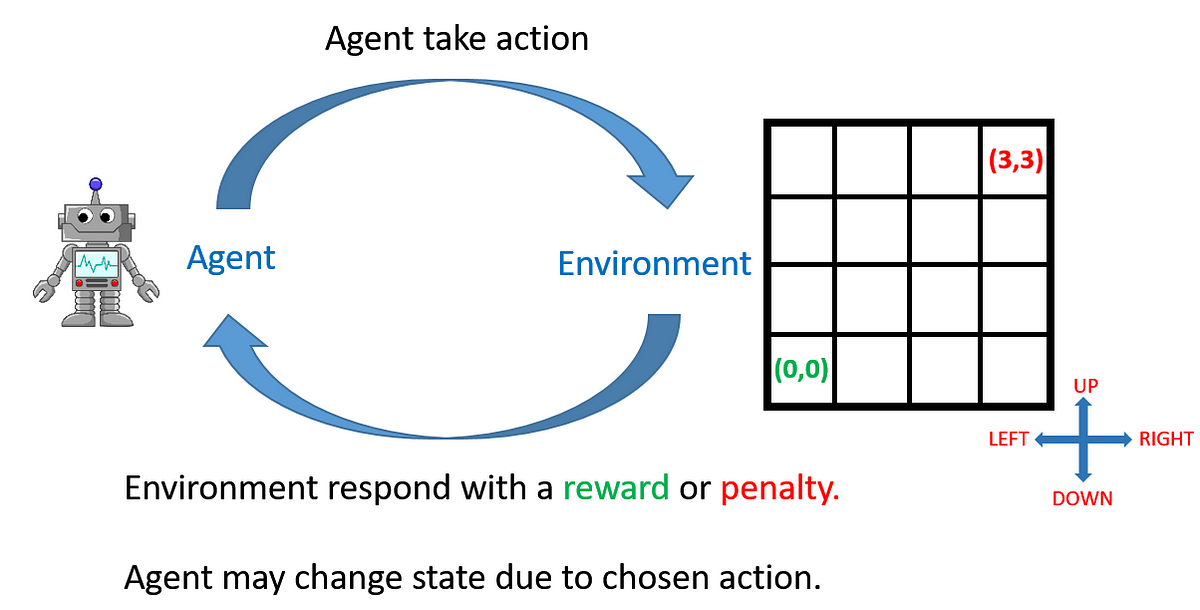

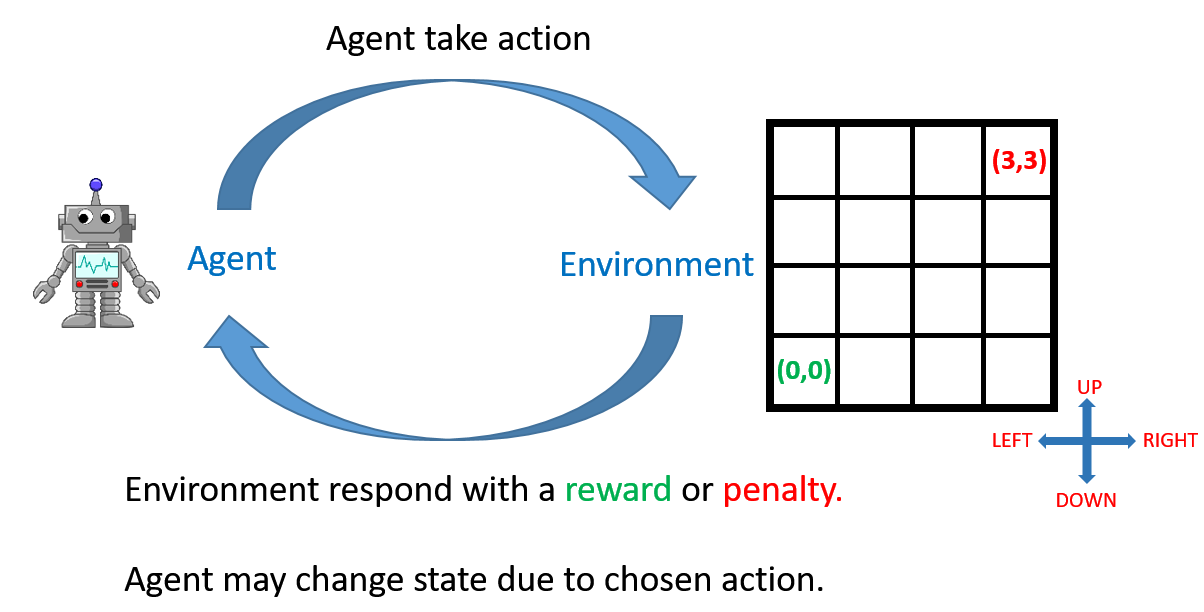

Following is an illustration of the grid world environment in which an agent (i.e. a robot) starts at the initial location (0,0) and tries to reach the target location (3,3), one step at a time.

An Agent-Environment interaction

In the 4x4 grid world above, a robot can randomly select any action from the set {Left, Up, Right, Down} at each step. The agent gets a reward of -1 as it moves from one state to another after taking an action. This negative reward (i.e. penalty) enforces the agent to complete the journey and reach the terminal state in minimum number of steps.

If the agent wants to run out of the grid, it gets a penalty of -2. For example, if the agent takes LEFT action from any of the states (0, 0), (0, 1), (0, 2),

(0, 3), it receives a penalty of -2.

When the agent moves from any of the states (2, 3), (3, 2) to the Terminal state (3, 3), it gets a reward of 0.

#machine-learning #rl #environment #grid #reinfocement-learning #deep learning