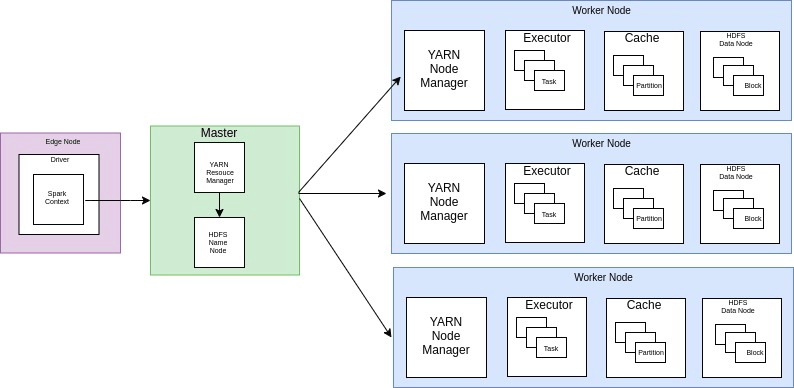

In our current scenario, we have 4 Node cluster where one is master node (HDFS Name node and YARN resource manager) and other three are slave nodes (HDFS data node and YARN Node manager)

In this cluster, we have implemented Kerberos, which makes this cluster more secure.

Kerberos services are already running in the different server which would be treated as KDC server.

In all of the nodes, we have to do a client configuration for Kerberos which I have already written in my previous blog. please go through below kerberos authentication links for more info.

To start the installation of Hadoop HDFS and Yarn follow the below steps:

Prerequisites:

- All nodes should have an IP address as mentioned below

- Master : 10.0.0.70

- Slave 1 : 10.0.0.105

- Slave 2 : 10.0.0.85

- Slave 3 : 10.0.0.122

- SSH password less should be there from master node to all the slave node in order to avoid password prompt

- each node should communicate with each other.

- 1.8 OpenJDK should be installed on all four nodes.

- jsvc package should be installed on all four nodes.

- Need to make host file entry in all of the nodes to communicate with each other by name as local DNS.

- I am assuming Kerberos packages already installed in all of the four nodes and configuration has also done.

Lets begin the configuration:

add master node’s ssh public key in all of the worker’s nodes authorized_keys which would be found in ~/.ssh/authorized_keys

after adding keys, master node will be able to login in to all of the worker’s nodes without password or keys.

install jdk 1.8 in all four nodes

sudo apt-get install openjdk-8-jdk -y

install jsvc too in all of the four nodes

sudo apt-get install jsvc -y

please make host file entry as mentioned in all of the four nodes

open /etc/hosts file

vim /etc/hosts

add below mentioned parameters for each node and also add one entry for Kerberos(10.0.0.33 EDGE.HADOOP.COM)

10.0.0.70 master

10.0.0.105 worker1

10.0.0.85 worker2

10.0.0.122 worker3

10.0.0.33 EDGE.HADOOP.COM

I am performing here all the operation from root user.

#devops #cluster #hdfs #spark #yarn