Learn how to upload and access large datasets on a Google Colab Jupyter notebook for training deep-learning models

Google Colab is a free Jupyter notebook environment from Google whose runtime is hosted on virtual machines on the cloud.

With Colab, you need not worry about your computer’s memory capacity or Python package installations. You get free GPU and TPU runtimes, and the notebook comes pre-installed with machine and deep-learning modules such as Scikit-learn and Tensorflow. That being said, all projects require a dataset and if you are not using Tensorflow’s inbuilt datasets or Colab’s sample datasets, you will need to follow some simple steps to have access to this data.

Understanding Colab’s file system

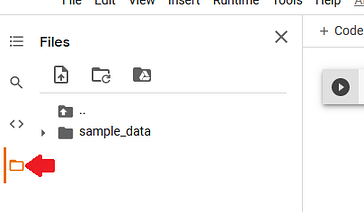

The Colab notebooks you create are saved in your Google drive folder. However, during runtime (when a notebook is active) it gets assigned a Linux-based file system from the cloud with either a CPU, GPU, or TPU processor, as per your preferences. You can view a notebook’s current working directory by clicking on the folder (rectangular) icon on the left of the notebook.

Current working directory on Colab by author

Colab provides a sample-data folder with datasets that you can play around with, or upload your own datasets using the upload icon (next to ‘search’ icon). Note that once the runtime is disconnected, you lose access to the virtual file system and all the uploaded data is deleted. The notebook file, however, is saved on Google drive.

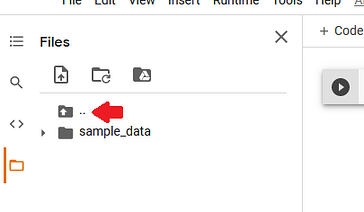

The folder icon with 2 dots above sample_data folder reveals the other core directories in Colab’s file system such as /root, /home, and /tmp.

File system on Colab by author

You can view your allocated disk space on the bottom-left corner of the Files pane.

Colab’s Disk storage by author

In this tutorial, we will explore ways to upload image datasets into Colab’s file system from 3 mediums so they are accessible by the notebook for modeling. I chose to save the uploaded files in /tmp , but you can also save them in the current working directory. Kaggle’s Dogs vs Cats dataset will be used for demonstration.

1. Upload Data from a website such a Github

To download data from a website directly into Colab, you need a URL (a web-page address link) that points directly to the zip folder.

- Download from Github

Github is a platform where developers host their code and work together on projects. The projects are stored in repositories, and by default, a repository is public meaning anyone can view or download the contents into their local machine and start working on it.

First step is to search for a repository that contains the dataset. Here is a Github repo by laxmimerit containing the Cats vs Dogs 25,000 training and test images in their respective folders.

To use this process, you must have a free Github account and be already logged in.

#machine-learning #deep-learning #dataset #colab