Understanding the basics of Federated Learning and its implementation using PyTorch

Many thanks to renowned data scientist Mr. Akshay Kulkarni for his inspiration and guidance on this tutorial.

In the world revolutionized by data and digitalization, more and more personal information is shared and stored, which opens a new field of preserving data privacy. But, what is data privacy, and why is there a need for preserving it?

Data Privacy defines how a particular piece of information/data should be handled or who has authorized access based on its relative importance. With the introduction to AI (Machine Learning and Deep Learning), a lot of personal information can be extracted from these models, which can cause irreparable damage to the people whose personal data has been exposed. So, here comes the need to preserve this data while implementing various machine learning models.

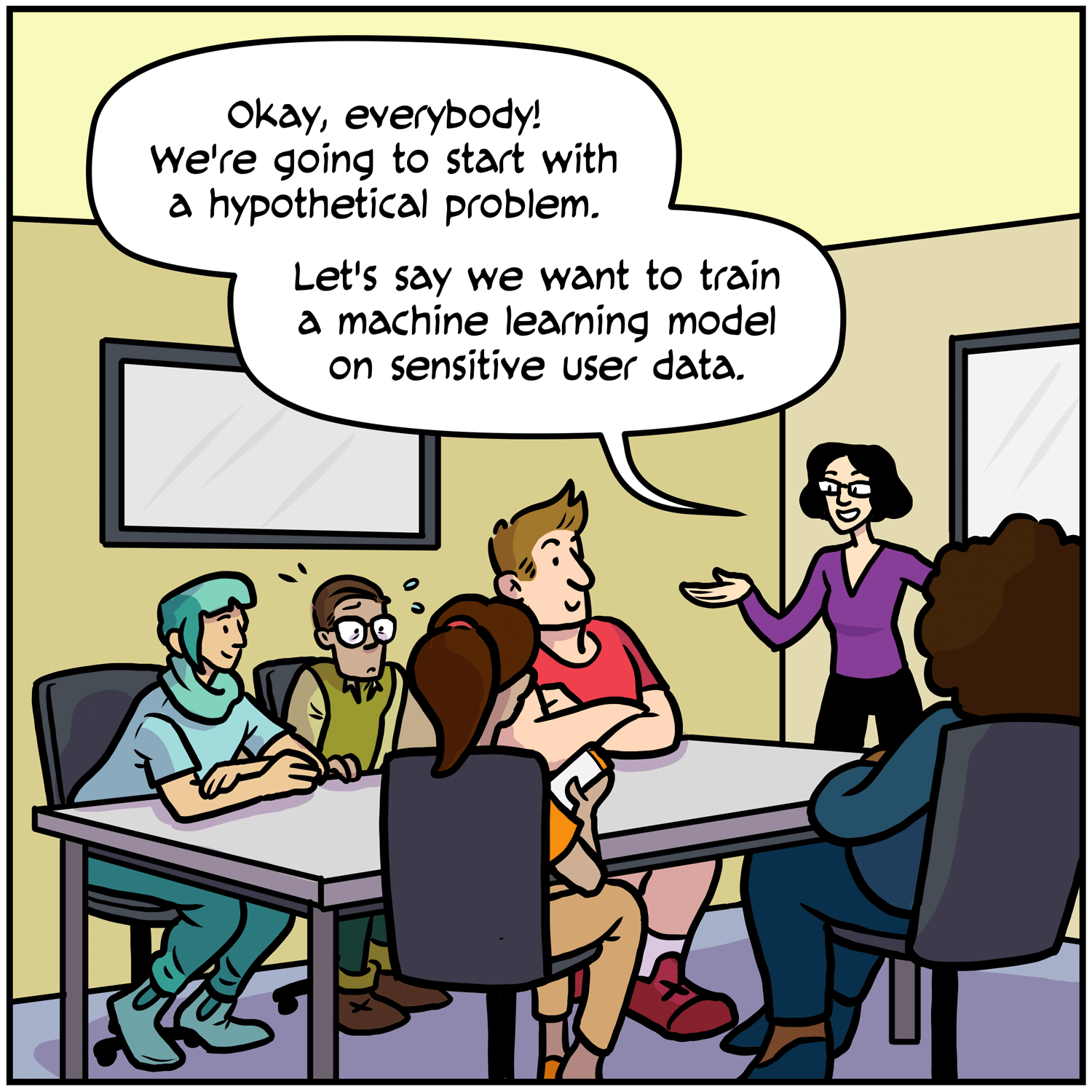

In this series of tutorials, the major concern is to preserve the data-privacy in Deep Learning models. You will be exploring different methods like Federated Learning, Differential Privacy, and Homomorphic Encryption.

In this tutorial, you will discover how to preserve data privacy using federated learning on machine learning models. After completing this tutorial, you will know:

- The basics of Federated Learning

- Dividing data amongst the clients (for federated learning)

- The model architecture

- Aggregation of the Decentralized Weights in Global Weights

Introduction

F

ederated Learning, also known as collaborative learning, is a deep learning technique where the training takes place across multiple decentralized edge devices (clients) or servers on their personal data, without sharing the data with other clients, thus keeping the data private. It aims at training a machine learning algorithm, say, deep neural networks on multiple devices (clients) having local datasets without explicitly exchanging the data samples.

This training happens simultaneously on other devices, hundreds, and thousands of them. After training the same model on different devices with different data, their weights (summary of training) are sent to the global server, where aggregation of these weights takes place. Different aggregation techniques are used to get the most out of the weights learned on the clients’ devices. After aggregation, the global weights are again sent to the clients, and the training continues on the client’s device. This entire process is called a communication round in federated learning, and this is how many communication rounds take place to further improve the accuracy of the model.

#deep-learning #federated-learning #privacy #differential-privacy #deep learning