Iterative algorithms are widely implemented in machine learning, connected components, page rank, etc. These algorithms increase in complexity with iterations, size of data at each iteration and making it fault-tolerant at every iteration is tricky. In this article, I would elaborate few of considerations in spark to work with these challenges. We have used Spark in implementing few iterative algorithms like building connected components, traversing large connected components etc. Below are from my experience while working at Walmart labs building connected components for 60 Billion nodes of customer identities.

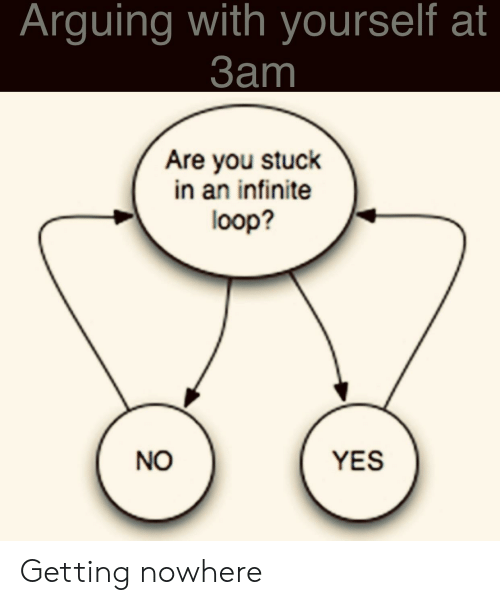

Number of iterations is never predetermined, always have terminate condition 😀

Types of Iterative algorithms

**Converging data: **Here we see the amount of data decreases with every iteration, i.e. we start 1st iteration with huge datasets and the data size decreases with an increase in iterations. The main challenge would be handling the huge datasets in the first few iterations and once a dataset has reduced significantly it would be easy to handle further iterations until end condition.

**Diverging data: **Amount of data increases at every iteration and sometimes it could explode faster and make it impossible to proceed further. We need to have constraints like limitations in the number of iterations, starting data size, size of compute power etc. to make these algorithms work.

#machine-learning #algorithms #big-data #spark