Congratulations! You’ve just been hired as Netflix’s new Chief Technical Officer. Your chief task? Increasing engagement and viewing time from your 180 million subscribers. Your team thinks that you can achieve this by personalizing the home screen of each user. This raises a natural question: given the past viewing preferences of a given user, which shows should you highlight for them to watch next?

The crux of this question is in designing a _recommender system. _Designing such systems is a million dollar industry: seriously. Whether it is Amazon recommending which product you should buy next, or Tinder showing you the most compatible singles in your area, modern companies dedicate significant amounts of time, money, and energy into creating more and more precise recommender systems.

In this article we will discuss how one can create a basic recommender system using ideas from Natural Language Processing (NLP). This is not an exhaustive discussion of recommender systems in general, and the algorithm we describe is not necessarily the best way creating such a system. But it will be fun anyways!

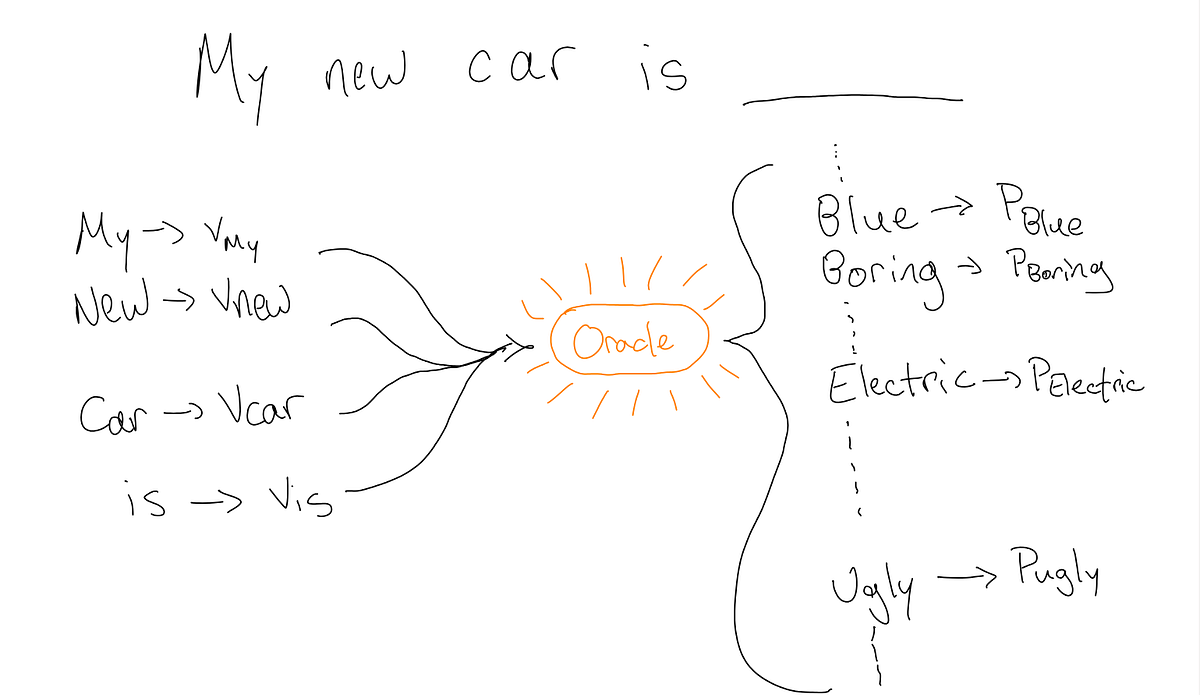

First things first: what is NLP? Natural Language Processing is a subfield of linguistics which aims to break down human language into information which is understandable by a computer. It’s how Google allows you to email faster using Smart Compose, or how some financial companies are using social media sentiment to influence their trading algorithms. A key idea from NLP is word2vec: it is a method for embedding words into a high dimensional vector space in such a way that “similar” words map to “similar” vectors.

A first pass at doing this would be the “one-hot” embedding. Let’s take the ~200,000 words in the English language. We can order these words alphabetically. The one-hot embedding of these words would map the _i_th word in the dictionary to the vector (0,…,0,1,0, …,0) which has a single 1 in the _i_th entry, and 199,999 0’s everywhere else.

Unfortunately this embedding is too naive. First, it embeds the human language into a 200,000 dimensional vector space. This makes computations very slow. And remember: at the end of the day, we need to compute with this information for it to be useful! Second, it does not preserve similarity in any sense. For instance: in NLP we would hope that two synonyms, such as “happy” and “joyful,” map to similar vectors. Just as importantly, antonyms such as “happy” and “sad” need to map to dissimilar vectors. We usually measure the similarity of two vectors via their cosine similarity, which is related to the dot product of two vectors. But any two words in the one-hot embedding map to orthogonal vectors, with dot product zero!

#machine-learning #data-science #naturallanguageprocessing