Docker overview

Docker is an open platform for developing, shipping, and running applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications. By taking advantage of Docker’s methodologies for shipping, testing, and deploying code quickly, you can significantly reduce the delay between writing code and running it in production.

The Docker platform

Docker provides the ability to package and run an application in a loosely isolated environment called a container. The isolation and security allow you to run many containers simultaneously on a given host. Containers are lightweight because they don’t need the extra load of a hypervisor, but run directly within the host machine’s kernel. This means you can run more containers on a given hardware combination than if you were using virtual machines. You can even run Docker containers within host machines that are actually virtual machines!

Docker provides tooling and a platform to manage the lifecycle of your containers:

- Develop your application and its supporting components using containers.

- The container becomes the unit for distributing and testing your application.

- When you’re ready, deploy your application into your production environment, as a container or an orchestrated service. This works the same whether your production environment is a local data center, a cloud provider, or a hybrid of the two.

Docker Engine

Docker Engine is a client-server application with these major components:

-

A server which is a type of long-running program called a daemon process (the

dockerdcommand). -

A REST API which specifies interfaces that programs can use to talk to the daemon and instruct it what to do.

-

A command line interface (CLI) client (the

dockercommand).

The CLI uses the Docker REST API to control or interact with the Docker daemon through scripting or direct CLI commands. Many other Docker applications use the underlying API and CLI.

The daemon creates and manages Docker objects, such as images, containers, networks, and volumes.

Note: Docker is licensed under the open source Apache 2.0 license.

What can I use Docker for?

Fast, consistent delivery of your applications

Docker streamlines the development lifecycle by allowing developers to work in standardized environments using local containers which provide your applications and services. Containers are great for continuous integration and continuous delivery (CI/CD) workflows.

Consider the following example scenario:

- Your developers write code locally and share their work with their colleagues using Docker containers.

- They use Docker to push their applications into a test environment and execute automated and manual tests.

- When developers find bugs, they can fix them in the development environment and redeploy them to the test environment for testing and validation.

- When testing is complete, getting the fix to the customer is as simple as pushing the updated image to the production environment.

Responsive deployment and scaling

Docker’s container-based platform allows for highly portable workloads. Docker containers can run on a developer’s local laptop, on physical or virtual machines in a data center, on cloud providers, or in a mixture of environments.

Docker’s portability and lightweight nature also make it easy to dynamically manage workloads, scaling up or tearing down applications and services as business needs dictate, in near real time.

Running more workloads on the same hardware

Docker is lightweight and fast. It provides a viable, cost-effective alternative to hypervisor-based virtual machines, so you can use more of your compute capacity to achieve your business goals. Docker is perfect for high density environments and for small and medium deployments where you need to do more with fewer resources.

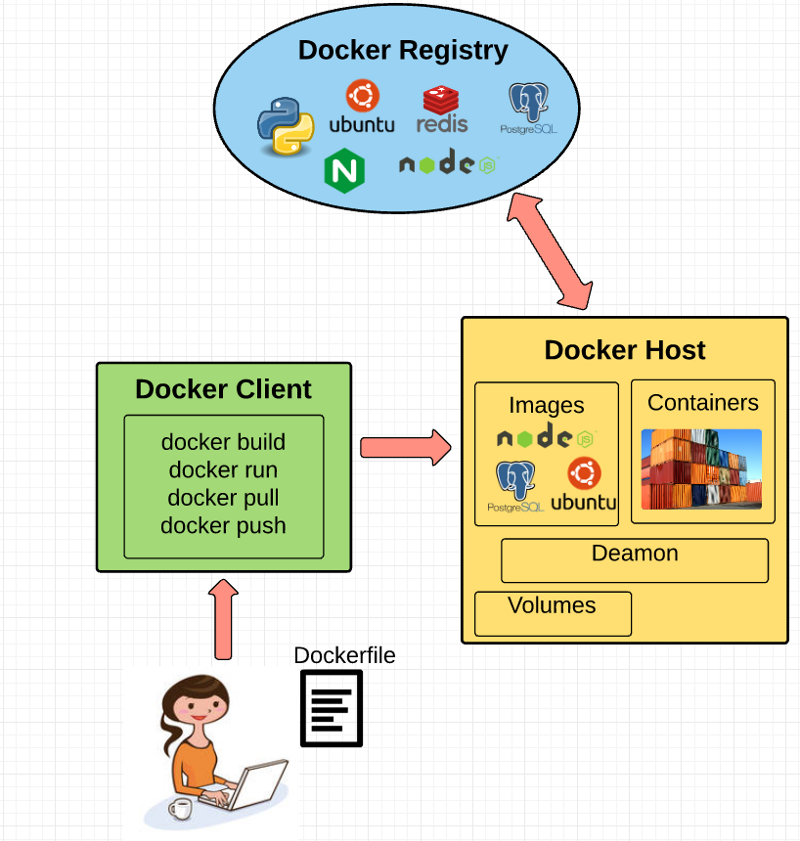

Docker architecture

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers. The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface.

The Docker daemon

The Docker daemon (dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks, and volumes. A daemon can also communicate with other daemons to manage Docker services.

The Docker client

The Docker client (docker) is the primary way that many Docker users interact with Docker. When you use commands such as docker run, the client sends these commands to dockerd, which carries them out. The docker command uses the Docker API. The Docker client can communicate with more than one daemon.

Docker registries

A Docker registry stores Docker images. Docker Hub is a public registry that anyone can use, and Docker is configured to look for images on Docker Hub by default. You can even run your own private registry. If you use Docker Datacenter (DDC), it includes Docker Trusted Registry (DTR).

When you use the docker pull or docker run commands, the required images are pulled from your configured registry. When you use the docker push command, your image is pushed to your configured registry.

Docker objects

When you use Docker, you are creating and using images, containers, networks, volumes, plugins, and other objects. This section is a brief overview of some of those objects.

Images

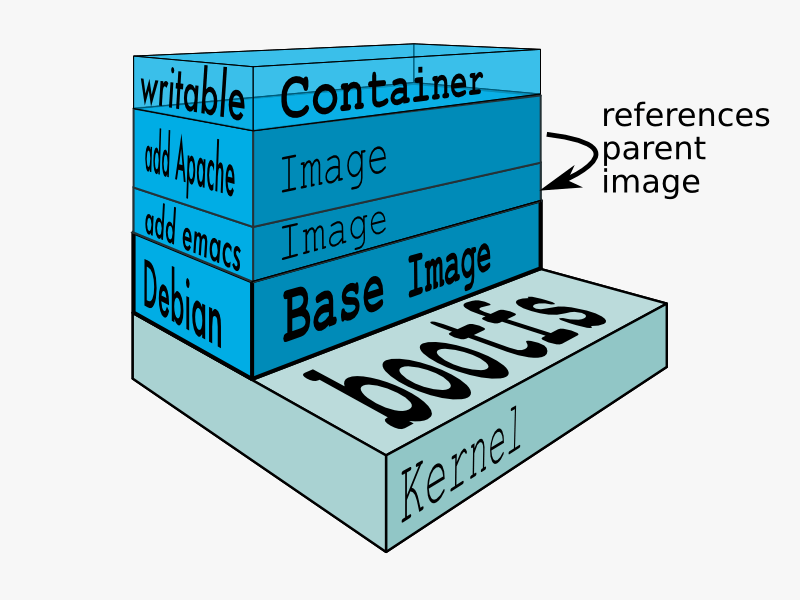

An image is a read-only template with instructions for creating a Docker container. Often, an image is based on another image, with some additional customization. For example, you may build an image which is based on the ubuntu image, but installs the Apache web server and your application, as well as the configuration details needed to make your application run.

You might create your own images or you might only use those created by others and published in a registry. To build your own image, you create a Dockerfile with a simple syntax for defining the steps needed to create the image and run it. Each instruction in a Dockerfile creates a layer in the image. When you change the Dockerfile and rebuild the image, only those layers which have changed are rebuilt. This is part of what makes images so lightweight, small, and fast, when compared to other virtualization technologies.

Containers

A container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state.

By default, a container is relatively well isolated from other containers and its host machine. You can control how isolated a container’s network, storage, or other underlying subsystems are from other containers or from the host machine.

A container is defined by its image as well as any configuration options you provide to it when you create or start it. When a container is removed, any changes to its state that are not stored in persistent storage disappear.

Example docker run command

The following command runs an ubuntu container, attaches interactively to your local command-line session, and runs /bin/bash.

$ docker run -i -t ubuntu /bin/bash

When you run this command, the following happens (assuming you are using the default registry configuration):

-

If you do not have the

ubuntuimage locally, Docker pulls it from your configured registry, as though you had rundocker pull ubuntumanually. -

Docker creates a new container, as though you had run a

docker container createcommand manually. -

Docker allocates a read-write filesystem to the container, as its final layer. This allows a running container to create or modify files and directories in its local filesystem.

-

Docker creates a network interface to connect the container to the default network, since you did not specify any networking options. This includes assigning an IP address to the container. By default, containers can connect to external networks using the host machine’s network connection.

-

Docker starts the container and executes

/bin/bash. Because the container is running interactively and attached to your terminal (due to the-iand-tflags), you can provide input using your keyboard while the output is logged to your terminal. -

When you type

exitto terminate the/bin/bashcommand, the container stops but is not removed. You can start it again or remove it.

Services

Services allow you to scale containers across multiple Docker daemons, which all work together as a swarm with multiple managers and workers. Each member of a swarm is a Docker daemon, and all the daemons communicate using the Docker API. A service allows you to define the desired state, such as the number of replicas of the service that must be available at any given time. By default, the service is load-balanced across all worker nodes. To the consumer, the Docker service appears to be a single application. Docker Engine supports swarm mode in Docker 1.12 and higher.

The underlying technology

Docker is written in Go and takes advantage of several features of the Linux kernel to deliver its functionality.

Namespaces

Docker uses a technology called namespaces to provide the isolated workspace called the container. When you run a container, Docker creates a set of namespaces for that container.

These namespaces provide a layer of isolation. Each aspect of a container runs in a separate namespace and its access is limited to that namespace.

Docker Engine uses namespaces such as the following on Linux:

- The

pidnamespace: Process isolation (PID: Process ID). - The

netnamespace: Managing network interfaces (NET: Networking). - The

ipcnamespace: Managing access to IPC resources (IPC: InterProcess Communication). - The

mntnamespace: Managing filesystem mount points (MNT: Mount). - The

utsnamespace: Isolating kernel and version identifiers. (UTS: Unix Timesharing System).

Control groups

Docker Engine on Linux also relies on another technology called control groups (cgroups). A cgroup limits an application to a specific set of resources. Control groups allow Docker Engine to share available hardware resources to containers and optionally enforce limits and constraints. For example, you can limit the memory available to a specific container.

Union file systems

Union file systems, or UnionFS, are file systems that operate by creating layers, making them very lightweight and fast. Docker Engine uses UnionFS to provide the building blocks for containers. Docker Engine can use multiple UnionFS variants, including AUFS, btrfs, vfs, and DeviceMapper.

Container format

Docker Engine combines the namespaces, control groups, and UnionFS into a wrapper called a container format. The default container format is libcontainer. In the future, Docker may support other container formats by integrating with technologies such as BSD Jails or Solaris Zones.

A Beginner-Friendly Introduction to Containers, VMs and Docker

If you’re a programmer or techie, chances are you’ve at least heard of Docker: a helpful tool for packing, shipping, and running applications within “containers.” It’d be hard not to, with all the attention it’s getting these days — from developers and system admins alike. Even the big dogs like Google, VMware and Amazon are building services to support it.

Regardless of whether or not you have an immediate use-case in mind for Docker, I still think it’s important to understand some of the fundamental concepts around what a “container” is and how it compares to a Virtual Machine (VM). While the Internet is full of excellent usage guides for Docker, I couldn’t find many beginner-friendly conceptual guides, particularly on what a container is made up of. So, hopefully, this post will solve that problem :)

Let’s start by understanding what VMs and containers even are.

What are “containers” and “VMs”?

Containers and VMs are similar in their goals: to isolate an application and its dependencies into a self-contained unit that can run anywhere.

Moreover, containers and VMs remove the need for physical hardware, allowing for more efficient use of computing resources, both in terms of energy consumption and cost effectiveness.

The main difference between containers and VMs is in their architectural approach. Let’s take a closer look.

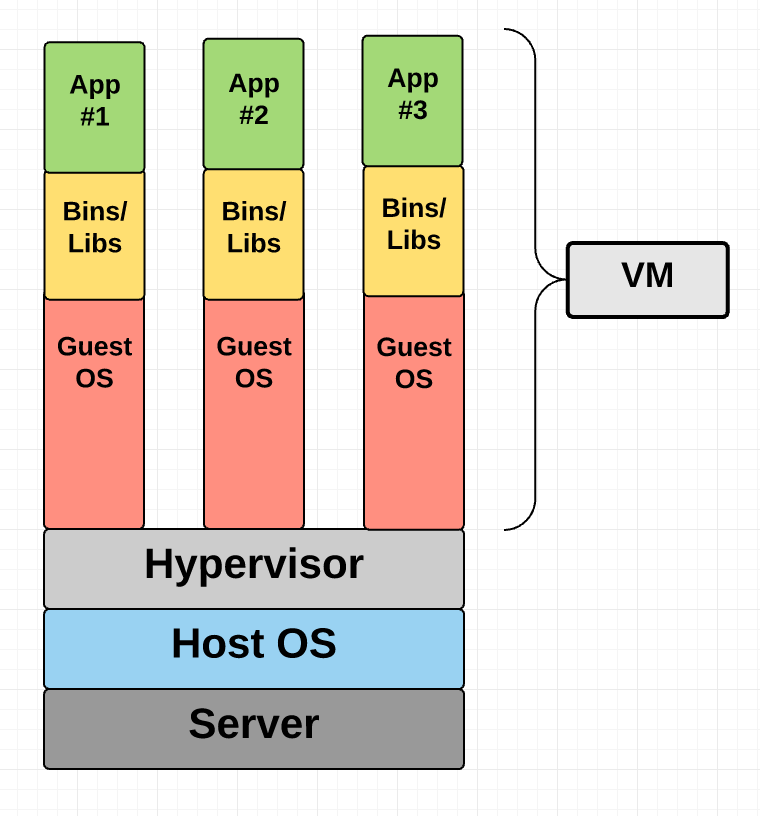

Virtual Machines

A VM is essentially an emulation of a real computer that executes programs like a real computer. VMs run on top of a physical machine using a “hypervisor”. A hypervisor, in turn, runs on either a host machine or on “bare-metal”.

Let’s unpack the jargon:

A hypervisor is a piece of software, firmware, or hardware that VMs run on top of. The hypervisors themselves run on physical computers, referred to as the “host machine”. The host machine provides the VMs with resources, including RAM and CPU. These resources are divided between VMs and can be distributed as you see fit. So if one VM is running a more resource heavy application, you might allocate more resources to that one than the other VMs running on the same host machine.

The VM that is running on the host machine (again, using a hypervisor) is also often called a “guest machine.” This guest machine contains both the application and whatever it needs to run that application (e.g. system binaries and libraries). It also carries an entire virtualized hardware stack of its own, including virtualized network adapters, storage, and CPU — which means it also has its own full-fledged guest operating system. From the inside, the guest machine behaves as its own unit with its own dedicated resources. From the outside, we know that it’s a VM — sharing resources provided by the host machine.

As mentioned above, a guest machine can run on either a hosted hypervisor or a bare-metal hypervisor. There are some important differences between them.

First off, a hosted virtualization hypervisor runs on the operating system of the host machine. For example, a computer running OSX can have a VM (e.g. VirtualBox or VMware Workstation 8) installed on top of that OS. The VM doesn’t have direct access to hardware, so it has to go through the host operating system (in our case, the Mac’s OSX).

The benefit of a hosted hypervisor is that the underlying hardware is less important. The host’s operating system is responsible for the hardware drivers instead of the hypervisor itself, and is therefore considered to have more “hardware compatibility.” On the other hand, this additional layer in between the hardware and the hypervisor creates more resource overhead, which lowers the performance of the VM.

A bare metal hypervisor environment tackles the performance issue by installing on and running from the host machine’s hardware. Because it interfaces directly with the underlying hardware, it doesn’t need a host operating system to run on. In this case, the first thing installed on a host machine’s server as the operating system will be the hypervisor. Unlike the hosted hypervisor, a bare-metal hypervisor has its own device drivers and interacts with each component directly for any I/O, processing, or OS-specific tasks. This results in better performance, scalability, and stability. The tradeoff here is that hardware compatibility is limited because the hypervisor can only have so many device drivers built into it.

After all this talk about hypervisors, you might be wondering why we need this additional “hypervisor” layer in between the VM and the host machine at all.

Well, since the VM has a virtual operating system of its own, the hypervisor plays an essential role in providing the VMs with a platform to manage and execute this guest operating system. It allows for host computers to share their resources amongst the virtual machines that are running as guests on top of them.

Virtual Machine Diagram

As you can see in the diagram, VMs package up the virtual hardware, a kernel (i.e. OS) and user space for each new VM.

Container

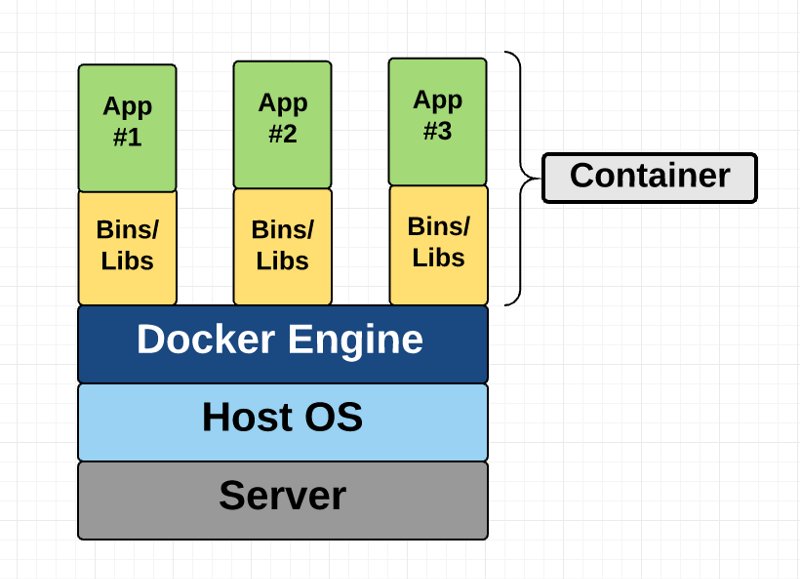

Unlike a VM which provides hardware virtualization, a container provides operating-system-level virtualization by abstracting the “user space”. You’ll see what I mean as we unpack the term container.

For all intent and purposes, containers look like a VM. For example, they have private space for processing, can execute commands as root, have a private network interface and IP address, allow custom routes and iptable rules, can mount file systems, and etc.

The one big difference between containers and VMs is that containers share the host system’s kernel with other containers.

Container Diagram

This diagram shows you that containers package up just the user space, and not the kernel or virtual hardware like a VM does. Each container gets its own isolated user space to allow multiple containers to run on a single host machine. We can see that all the operating system level architecture is being shared across containers. The only parts that are created from scratch are the bins and libs. This is what makes containers so lightweight.

Where does Docker come in?

Docker is an open-source project based on Linux containers. It uses Linux Kernel features like namespaces and control groups to create containers on top of an operating system.

Containers are far from new; Google has been using their own container technology for years. Others Linux container technologies include Solaris Zones, BSD jails, and LXC, which have been around for many years.

So why is Docker all of a sudden gaining steam?

1. Ease of use: Docker has made it much easier for anyone — developers, systems admins, architects and others — to take advantage of containers in order to quickly build and test portable applications. It allows anyone to package an application on their laptop, which in turn can run unmodified on any public cloud, private cloud, or even bare metal. The mantra is: “build once, run anywhere.”

2. Speed: Docker containers are very lightweight and fast. Since containers are just sandboxed environments running on the kernel, they take up fewer resources. You can create and run a Docker container in seconds, compared to VMs which might take longer because they have to boot up a full virtual operating system every time.

3. Docker Hub: Docker users also benefit from the increasingly rich ecosystem of Docker Hub, which you can think of as an “app store for Docker images.” Docker Hub has tens of thousands of public images created by the community that are readily available for use. It’s incredibly easy to search for images that meet your needs, ready to pull down and use with little-to-no modification.

4. Modularity and Scalability: Docker makes it easy to break out your application’s functionality into individual containers. For example, you might have your Postgres database running in one container and your Redis server in another while your Node.js app is in another. With Docker, it’s become easier to link these containers together to create your application, making it easy to scale or update components independently in the future.

Last but not least, who doesn’t love the Docker whale? ;)

Fundamental Docker Concepts

Now that we’ve got the big picture in place, let’s go through the fundamental parts of Docker piece by piece:

Docker Engine

Docker engine is the layer on which Docker runs. It’s a lightweight runtime and tooling that manages containers, images, builds, and more. It runs natively on Linux systems and is made up of:

1. A Docker Daemon that runs in the host computer.

2. A Docker Client that then communicates with the Docker Daemon to execute commands.

3. A REST API for interacting with the Docker Daemon remotely.

Docker Client

The Docker Client is what you, as the end-user of Docker, communicate with. Think of it as the UI for Docker. For example, when you do…

you are communicating to the Docker Client, which then communicates your instructions to the Docker Daemon.

Docker Daemon

The Docker daemon is what actually executes commands sent to the Docker Client — like building, running, and distributing your containers. The Docker Daemon runs on the host machine, but as a user, you never communicate directly with the Daemon. The Docker Client can run on the host machine as well, but it’s not required to. It can run on a different machine and communicate with the Docker Daemon that’s running on the host machine.

Dockerfile

A Dockerfile is where you write the instructions to build a Docker image. These instructions can be:

- RUN apt-get y install some-package: to install a software package

- EXPOSE 8000: to expose a port

- ENV ANT_HOME /usr/local/apache-ant to pass an environment variable

and so forth. Once you’ve got your Dockerfile set up, you can use the docker build command to build an image from it. Here’s an example of a Dockerfile:

Docker Image

Images are read-only templates that you build from a set of instructions written in your Dockerfile. Images define both what you want your packaged application and its dependencies to look like and what processes to run when it’s launched.

The Docker image is built using a Dockerfile. Each instruction in the Dockerfile adds a new “layer” to the image, with layers representing a portion of the images file system that either adds to or replaces the layer below it. Layers are key to Docker’s lightweight yet powerful structure. Docker uses a Union File System to achieve this:

Union File Systems

Docker uses Union File Systems to build up an image. You can think of a Union File System as a stackable file system, meaning files and directories of separate file systems (known as branches) can be transparently overlaid to form a single file system.

The contents of directories which have the same path within the overlaid branches are seen as a single merged directory, which avoids the need to create separate copies of each layer. Instead, they can all be given pointers to the same resource; when certain layers need to be modified, it’ll create a copy and modify a local copy, leaving the original unchanged. That’s how file systems can appear writable without actually allowing writes. (In other words, a “copy-on-write” system.)

Layered systems offer two main benefits:

1. Duplication-free: layers help avoid duplicating a complete set of files every time you use an image to create and run a new container, making instantiation of docker containers very fast and cheap.

2. Layer segregation: Making a change is much faster — when you change an image, Docker only propagates the updates to the layer that was changed.

Volumes

Volumes are the “data” part of a container, initialized when a container is created. Volumes allow you to persist and share a container’s data. Data volumes are separate from the default Union File System and exist as normal directories and files on the host filesystem. So, even if you destroy, update, or rebuild your container, the data volumes will remain untouched. When you want to update a volume, you make changes to it directly. (As an added bonus, data volumes can be shared and reused among multiple containers, which is pretty neat.)

Docker Containers

A Docker container, as discussed above, wraps an application’s software into an invisible box with everything the application needs to run. That includes the operating system, application code, runtime, system tools, system libraries, and etc. Docker containers are built off Docker images. Since images are read-only, Docker adds a read-write file system over the read-only file system of the image to create a container.

Moreover, then creating the container, Docker creates a network interface so that the container can talk to the local host, attaches an available IP address to the container, and executes the process that you specified to run your application when defining the image.

Once you’ve successfully created a container, you can then run it in any environment without having to make changes.

Double-clicking on “containers”

Phew! That’s a lot of moving parts. One thing that always got me curious was how a container is actually implemented, especially since there isn’t any abstract infrastructure boundary around a container. After lots of reading, it all makes sense so here’s my attempt at explaining it to you! :)

The term “container” is really just an abstract concept to describe how a few different features work together to visualize a “container”. Let’s run through them real quick:

1) Namespaces

Namespaces provide containers with their own view of the underlying Linux system, limiting what the container can see and access. When you run a container, Docker creates namespaces that the specific container will use.

There are several different types of namespaces in a kernel that Docker makes use of, for example:

a. NET: Provides a container with its own view of the network stack of the system (e.g. its own network devices, IP addresses, IP routing tables, /proc/net directory, port numbers, etc.).

b. PID: PID stands for Process ID. If you’ve ever ran ps aux in the command line to check what processes are running on your system, you’ll have seen a column named “PID”. The PID namespace gives containers their own scoped view of processes they can view and interact with, including an independent init (PID 1), which is the “ancestor of all processes”.

c. MNT: Gives a container its own view of the “mounts” on the system. So, processes in different mount namespaces have different views of the filesystem hierarchy.

d. UTS: UTS stands for UNIX Timesharing System. It allows a process to identify system identifiers (i.e. hostname, domainname, etc.). UTS allows containers to have their own hostname and NIS domain name that is independent of other containers and the host system.

e. IPC: IPC stands for InterProcess Communication. IPC namespace is responsible for isolating IPC resources between processes running inside each container.

f. USER: This namespace is used to isolate users within each container. It functions by allowing containers to have a different view of the uid (user ID) and gid (group ID) ranges, as compared with the host system. As a result, a process’s uid and gid can be different inside and outside a user namespace, which also allows a process to have an unprivileged user outside a container without sacrificing root privilege inside a container.

Docker uses these namespaces together in order to isolate and begin the creation of a container. The next feature is called control groups.

2) Control groups

Control groups (also called cgroups) is a Linux kernel feature that isolates, prioritizes, and accounts for the resource usage (CPU, memory, disk I/O, network, etc.) of a set of processes. In this sense, a cgroup ensures that Docker containers only use the resources they need — and, if needed, set up limits to what resources a container can use. Cgroups also ensure that a single container doesn’t exhaust one of those resources and bring the entire system down.

Lastly, union file systems is another feature Docker uses:

3) Isolated Union file system:

Described above in the Docker Images section :)

This is really all there is to a Docker container (of course, the devil is in the implementation details — like how to manage the interactions between the various components).

The Future of Docker: Docker and VMs Will Co-exist

While Docker is certainly gaining a lot of steam, I don’t believe it will become a real threat to VMs. Containers will continue to gain ground, but there are many use cases where VMs are still better suited.

For instance, if you need to run multiple applications on multiple servers, it probably makes sense to use VMs. On the other hand, if you need to run many copies of a single application, Docker offers some compelling advantages.

Moreover, while containers allow you to break your application into more functional discrete parts to create a separation of concerns, it also means there’s a growing number of parts to manage, which can get unwieldy.

Security has also been an area of concern with Docker containers — since containers share the same kernel, the barrier between containers is thinner. While a full VM can only issue hypercalls to the host hypervisor, a Docker container can make syscalls to the host kernel, which creates a larger surface area for attack. When security is particularly important, developers are likely to pick VMs, which are isolated by abstracted hardware — making it much more difficult to interfere with each other.

Of course, issues like security and management are certain to evolve as containers get more exposure in production and further scrutiny from users. For now, the debate about containers vs. VMs is really best off to dev ops folks who live and breathe them everyday!

Conclusion

I hope you’re now equipped with the knowledge you need to learn more about Docker and maybe even use it in a project one day.

Docker Tutorial for Beginners - A Full DevOps Course on How to Run Applications in Containers

Get started using Docker with this end-to-end beginners course with hands-on labs.

Docker is an open platform for developers and sysadmins to build, ship, and run distributed applications, whether on laptops, data center VMs, or the cloud.

In this course you will learn Docker through a series of lectures that use animation, illustration and some fun analogies that simply complex concepts, we have demos that will show how to install and get started with Docker and most importantly we have hands-on labs that you can access right in your browser.

⭐️ Course Contents ⭐️

⌨️ (0:00:00) Introduction

⌨️ (0:02:35) Docker Overview

⌨️ (0:05:10) Getting Started

⌨️ (0:16:58) Install Docker

⌨️ (0:21:00) Commands

⌨️ (0:29:00) Labs

⌨️ (0:33:12) Run

⌨️ (0:42:19) Environment Variables

⌨️ (0:44:07) Images

⌨️ (0:51:38) CMD vs ENTRYPOINT

⌨️ (0:58:37) Networking

⌨️ (1:03:55) Storage

⌨️ (1:16:27) Compose

⌨️ (1:34:49) Registry

⌨️ (1:49:38) Engine

⌨️ (1:34:49) Docker on Windows

⌨️ (1:53:22) Docker on Mac

⌨️ (1:59:25) Docker Swarm

⌨️ (2:03:21) Kubernetes

⌨️ (2:09:30) Conclusion

Docker for Beginners: Full Course

Get started using Docker with this end-to-end beginner’s course with hands-on labs.

Docker is an open platform for developers and sysadmins to build, ship, and run distributed applications, whether on laptops, data center VMs, or the cloud.

In this course, you will learn Docker through a series of lectures that use animation, illustration and some fun analogies that simply complex concepts, we have demos that will show how to install and get started with Docker and most importantly we have hands-on labs that you can access right in your browser.

But first, let’s look at the objectives of this course. In this course, we first try to understand what containers are, what Docker is, why you might need it and what it can do for you. We will see how to Run a docker container, how to build your own docker image, networking in docker and how to use docker-compose, what docker registry is, how to deploy your own private registry. We then look at some of these concepts in-depth and we try to understand how docker really works. We look at Docker for Windows and MAC. Before finally getting a basic introduction to container orchestration tools like Docker swarm and Kubernetes.

Here’s a quick note about hands-on labs. First of all, to complete this course you don’t have to set up your own labs. Well, you may set it up if you wish to have your own environment, but as part of this course, we provide real labs that you can access right in your browser. The labs give you instant access to a terminal to a docker host and an accompanying quiz portal. The quiz portal asks a set of questions. Such as exploring the environment and gathering information. Or you might be asked to perform an action such as run a docker container. The quiz portal then validates your work and gives you feedback instantly. Every lecture in this course is accompanied by such challenging interactive quizzes that make learning docker a fun activity.

So I hope you are as thrilled as I am to get started. So let us begin.

This course is designed for beginners in DevOps

- 0:00 Docker For Beginners

- 2:37 Docker Overview

- 16:55 Docker Installation

- 20:00 Docker Commands

- 42:06 Docker Environment variables

- 44:05 Docker Images

- 51:36 Docker CMD vs Entrypoint

- 58:30 Docker Networking

- 1:03:57 Docker Storage

- 1:16:19 Docker Compose

- 1:34:39 Docker Registry

- 1:39:30 Docker Engine

- 1:46:06 Docker on Windows

- 1:52:06 Docker on Mac

- 1:54:39 Container Orchestration

- 1:58:53 Docker Swarm

- 02:02:35 Kubernetes

- 2:08:40 Conclusion

Docker Full Course - Learn Docker in 5 Hours | Docker Tutorial For Beginners

This Edureka Docker Full Course video will help you understand and learn docker in detail. This Docker tutorial is ideal for both beginners as well as professionals who want to master the container concepts. Below are the topics covered in this Docker tutorial for beginners video:

1:33 Introduction to Docker

1:38 Problems before Docker

4:28 How Docker solves the problem?

5:48 What is Docker?

6:38 Docker in a Nutshell

8:03 Docker Examples

9:40 Docker Case Study Indiana University

13:00 Docker Registry

14:30 Docker Images & Containers

18:45 Docker Compose

19:05 Install & Setup Docker

19:10 Install Docker

22:15 Docker for Windows

22:25 Why use Docker for Windows?

25:30 Docker For Windows Demo

39:52 DokerFile & Commands

39:57 DockerFile Syntax

41:22 DockerFile Commands

48:52 Creating an Image to Install Apache Web Server

56:57 DockerFile for Installing Nginx

1:03:37 Docker Commands

1:03:42 Most Used Docker Commands

1:04:42 Basic Docker Commands

1:45:47 Advanced Docler Commands

1:58:02 Docker Compose & Swarm

1:58:07 Docker Compose

1:58:12 What is Docker Compose?

2:03:27 What is MEAN Stack Application?

2:04:12 Demo

2:21:02 Docker Swarm

2:21:07 What is Docker Swarm?

2:28:37 Demo

2:32:42 Docker Swarm Commands

3:03:47 Docker Networking

3:05:02 Goals of Docker Networking

3:06:42 Container Network Model

3:08:27 Container Network Model Objects

3:11:27 Network Drivers

3:11:42 Network Driver: Bridge

3:12:22 Network Driver: Host

3:13:02 Network Driver: None

3:13:17 Network Driver: Overlay

3:14:22 Network Driver: Macvlan

3:14:57 Docker Swarm

3:15:22 Docker Swarm Clusters

3:15:47 Docker Swarm: Managers & Clusters

3:16:32 Hands-On -1

3:19:02 Hands-On - 2

3:28:42 Dockerizing Applications

3:29:42 What is Angular?

3:32:42 What is DevOps?

3:33:12 DevOps Tools & Techniques

3:34:12 Deploying an Angular Applications

3:37:17 Demo

4:01:12 Docker Jenkins

4:02:17 What is Jenkins?

4:03:40 How Jenkins Works?

4:04:40 Containerisation vs Virtualization

4:07:50 Docker Use-case

4:08::50 what are Microservices?

4:10:35 What are Microservices?

4:11:05 Advantages of Microservice Architecture

4:12:15 VMs vs Docker Containers for Microservices

4:13:35 Use Case

4:23:50 Node.js Docker

4:24:00 Why Use Node.js with Docker?

4:24:40 Demo: Node.js with Docker

4:38:00 Docker vs VM

4:38:30 Virtual Machines

4:38:35 What is a Virtual Machine?

4:39:25 Benefits of Virtual Machines

4:39:45 Popular Virtual Machine Providers

4:40:00 Docker Containers

4:40:05 What is Docker Container?

4:41:30 Types of Containers

4:42:10 Benefits of Containers

4:42:55 Major Differences

4:46:30 Use-case

4:46:45 How PayPal uses Docker & VM?

4:50:45 Docker Swarm vs Kubernetes

4:53:25 Installation & Cluster Configuration

4:55:00 GUI

4:56:30 Scalability

4:57:00 Auto-scaling

4:58:20 Load Balancing

4:59:25 Rolling Updates & Rollbacks

5:01:35 Data Volumes

5:02:10 Logging & Monitoring

5:03:35 Demo

5:14:55 Kubernetes vs Docker Swarm Mindshare

Docker Tutorial | Docker Tutorial for Beginners | What is Docker

In this docker tutorial for beginners video we have covered docker from scratch. In this docker tutorial, you will start by learning what is docker, why do we need Docker and then move on to understand docker and it’s various components. Towards the end, we will also learn and implement docker swarm. So this is an in depth video on docker containerization with demo so that you understand the concepts well.

Following topics are covered in this docker tutorial for beginners video:

- 01:01 - problems before docker

- 07:47 - what is docker

- 13:41 - docker vs virtal machine

- 16:05 - docker installation

- 20:50 - docker container lifecycle

- 23:20 - common docker operations

- 59:14 - what is a dockerfile

- 01:16:30 - introduction to docker volumes

- 01:58:40 - container orchestration in docker using docker swarm

#docker #devops