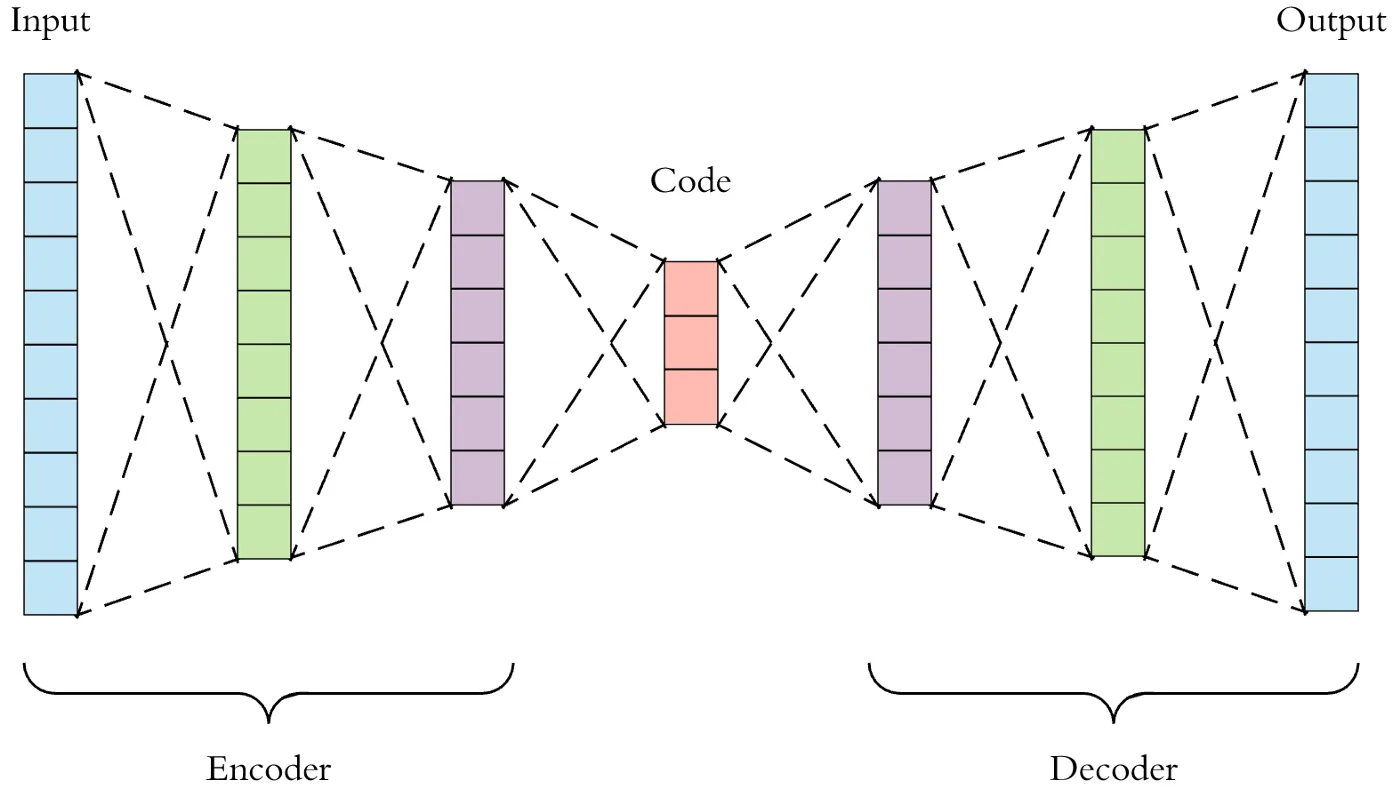

Since their introduction in 1986 [1], general Autoencoder Neural Networks have permeated into research in most major divisions of modern Machine Learning over the past 3 decades. Having been shown to be exceptionally effective in embedding complex data, Autoencoders offer simple means to encode complex non-linear dependencies into trivial vector representations. But while their effectiveness has been proven in many aspects, they often fall short in being able to reproduce sparse data, especially when the columns are correlated like One Hot Encodings.

In this article, I’ll briefly discuss One Hot Encoding (OHE) data and general autoencoders. Then I’ll cover the use cases that bring about the issues with Autoencoders trained on One Hot Encoded Data. Lastly, I’ll discuss the issue of reconstructing sparse OHE data in-depth, then cover 3 loss functions that I found to work well under these conditions:

- CosineEmbeddingLoss

- Sorenson-Dice Coefficient Loss

- Multi-Task Learning Losses of Individual OHE Components

— that solve for the aforementioned challenges, including code to implement them in PyTorch.

#one-hot-encoding #pytorch #sparse-data #autoencoder #data-science