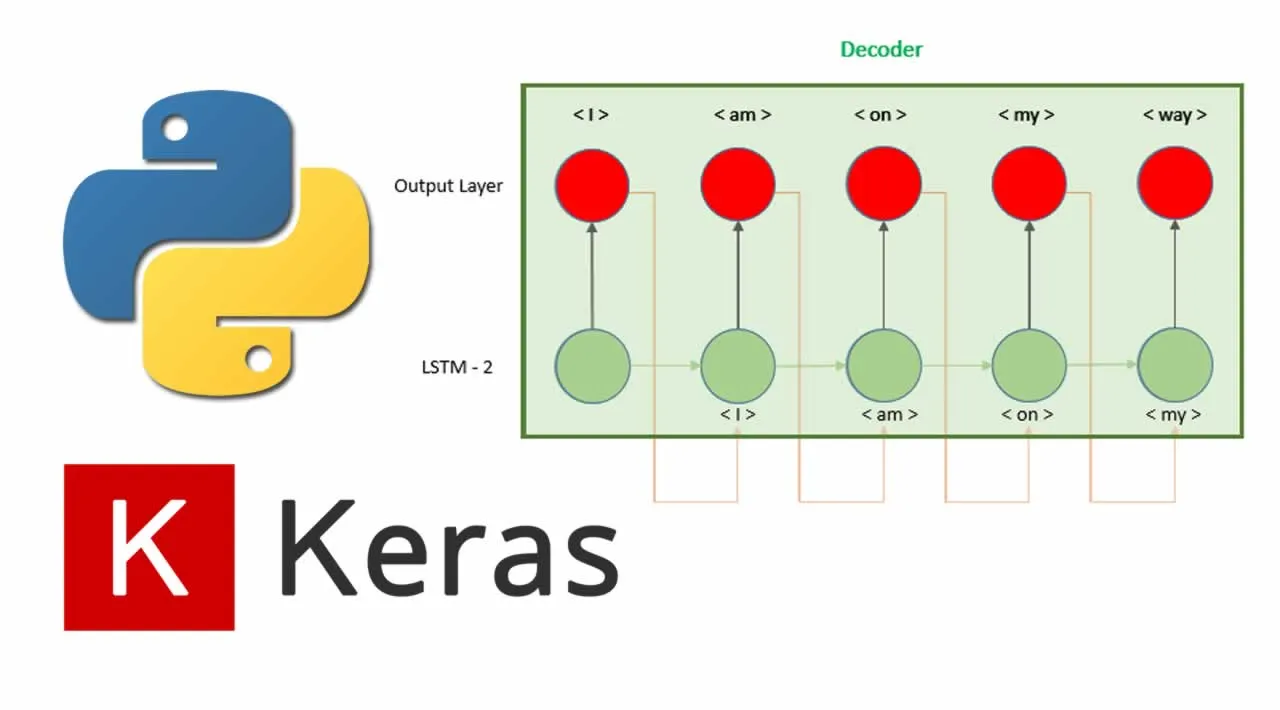

This article is a practical guide on how to develop an encoder decoder model, more precisely a Sequence to Sequence (Seq2Seq) with Python and Keras. In the previous tutorial we develop a many to many translation model similar to the image below:

This structure has one important limitation, the sequence length. As we can see in the image, the input sequence and the output sequence must have the same length. What if we need to have different lengths? Models with different sequences lengths are, for example, sentiment analysis that receives a sequence of words and outputs a number, or Image captioning models where the input is an image and the output is a sequence of words.

If we want to develop models were inputs and outputs lengths are different we need to develop an encoder decoder model. Through this tutorial we are going to see how to develop the model, applying it to a translation exercise. The representation of the model looks as the following.

#machine-learning #artificial-intelligence #data-science #ai #keras