For regression prediction tasks, not all time that we pursue only an absolute accurate prediction, and in fact, our prediction is always inaccurate, so instead of looking for an absolute precision, some times a prediction interval is required, in which cases we need quantile regression — that we predict an interval estimation of our target.

Loss Function

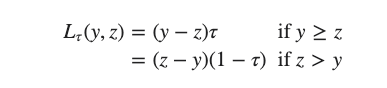

Fortunately, the powerful lightGBM has made quantile prediction possible and the major difference of quantile regression against general regression lies in the loss function, which is called pinball loss or quantile loss. There is a good explanation of pinball loss here, it has the formula:

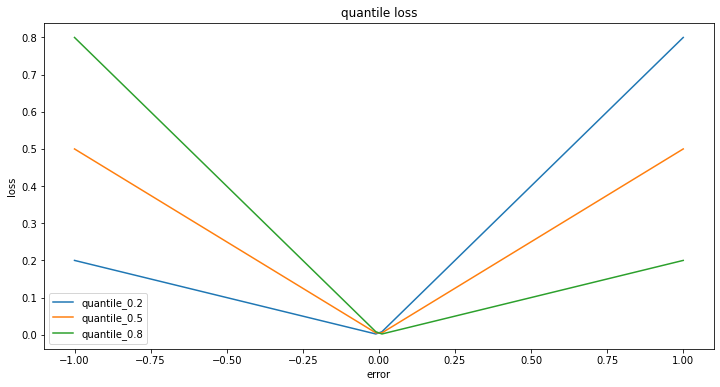

Where y is the actual value, z is the prediction and 𝛕 is the targeted quantile. So the first sight of the loss function, we can see that besides when quantile equals to 0.5, the loss function is unsymmetrical. Let’s have a visual on it:

#machine-learning #lightgbm #regression