While it has been established that pre-training large natural language models like Google’s BERT or XLNet, can bring immense advantages in NLP tasks, these are usually trained on a general collection of texts like websites, documents, books and news. On the other hand, experts believe that pre-training models on domain-specific knowledge can provide substantial gains over the one that is trained on general knowledge, or mixed domain knowledge.

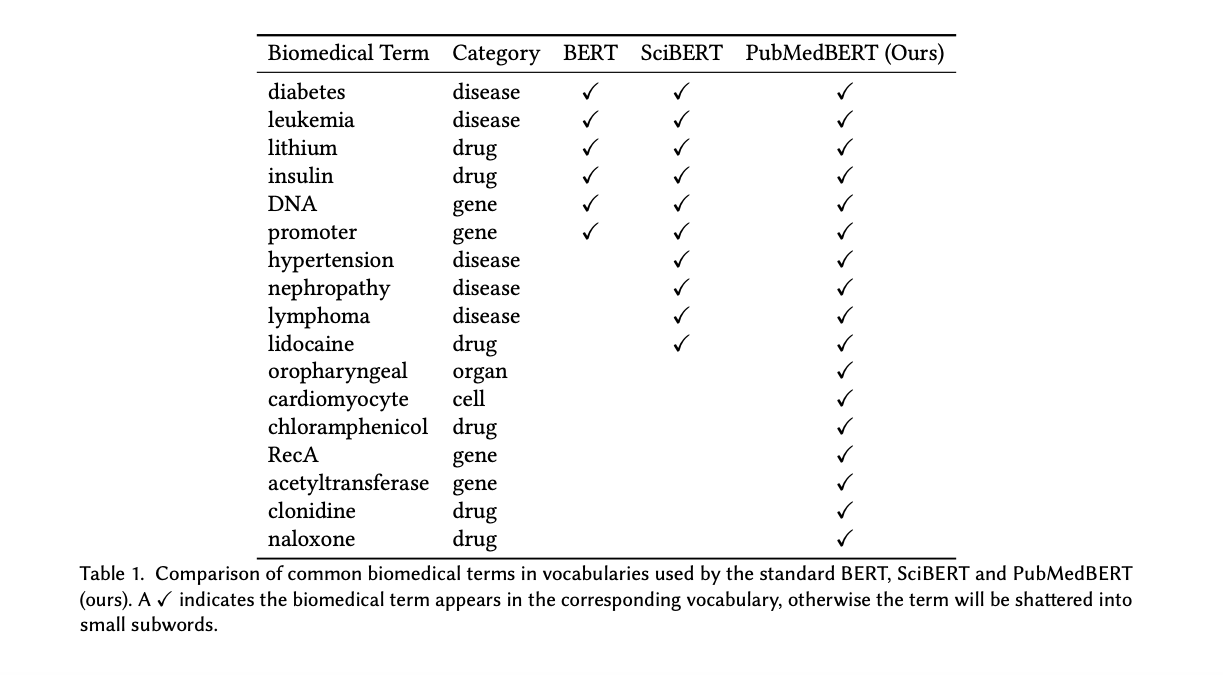

To facilitate this investigation, Microsoft researchers compiled a comprehensive biomedical NLP benchmark from publicly-available datasets, which then compared it with modelling choices for pre-training and its impacts on domain-specific applications — case in point: biomedicine. To which, researchers noted that domain-specific pre-training from scratch could be enormously beneficial for performing a wide range of specialised NLP tasks. Also, in order to accelerate research in biomedical natural language processing, Microsoft has released pre-trained task-specific models for the community.

#opinions #biomedical ai #biomedical nlp #nlp #nlp ai #pre-trained