Everything we see around us is nothing but an Image. we capture them using our mobile camera. In Signal Processing terms, Image is a signal which conveys some information. First I will tell you about what is a signal? how many types are they? Later part of this blog I will tell you about the images.

We are saying that image is signal. Signals are carry some information. It may be useful information or random noise. In Mathematics, Signal is function which depends on independent variables. The variables which are responsible for the altering the signal are called independent Variables. we have multidimensional signals. Here you will know about only three types of signals which are mainly used in edge cutting techniques such as Image processing, Computer Vision, Machine Learning, Deep Learning.

- 1D signal: Signals which has only one independent variable. Audio signals are the perfect example. It depends on the time. For instance, if you change time of an audio clip, you will listen sound at that particular time.

- 2D signal: Signals which depends on two independent variables. Image is an 2D signal as its information is only depends on its length and width.

- 3D signals : Signals which depends on three independent variables. Videos are the best examples for this. It is just motion of images with respect to time. Here image’s length and width are two independent variables and time is the third one.

Types of Images:

- Analog Images: These are natural images. The images which we see with our eye all are Analog image such as all physical objects. It has continuous values. Its amplitude is infinite.

- **Digital images: **By quantizing the analog images we can produce the digital images. But now-a-days, mostly all cameras produce digital images only. In digital Images, All values are discrete. Each location will have finite amplitude. Mostly we are using digital images for processing.

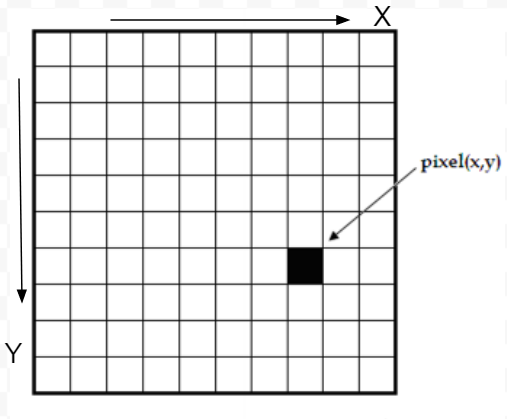

Every digital image will have group of pixels. Its coordinate system is starts from top coroner

Digital images contains stack of small rectangles. Each rectangle we call as Pixel. Pixel is the smallest unit in the image.Each Pixel will have particular value that is intensity. this intensity value is produced by the combination of colors. We have millions of colors. But our eye is perceive only three colors and their combinations. Those color we call primary colors i.e., Red, Green and Blue.

Why only those three colors ???

Do not think much. the reason is as our human eye has only three color receptors. Different combinations in the stimulation of the receptors enable the human eye to distinguish nearly 350000 colors

Lets move to our image topic:

As of now, we knew that image intensity values is combination of Red, Green and Blue. Each pixel in color image will have these three color channels. Generally, we represent each color value in 8 bits i.e., one byte.

Now, you can say how many bits will require at each pixel. We have 3 colors at each pixel and each color value will be stored in 8 bits. Then each pixel will have 24 bits. This 24 bit color image will display 2**24 different colors.

Now you have a question. how much memory does it require to store RGB image of shape 256*256 ???I think so explanation is not required, if you want to clear explanation please comment below.

#machine-learning #computer-vision #image-processing #deep-learning #image #deep learning