What is batch normalization?

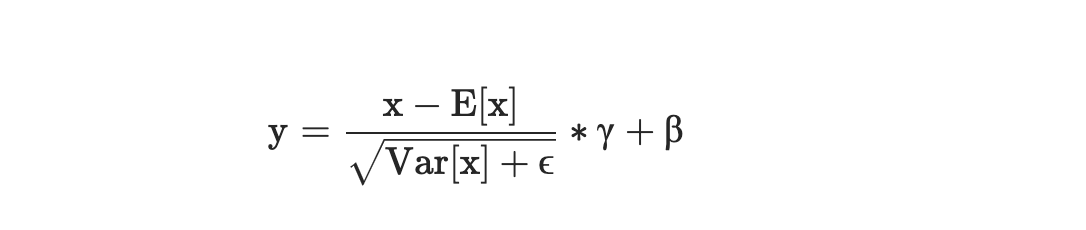

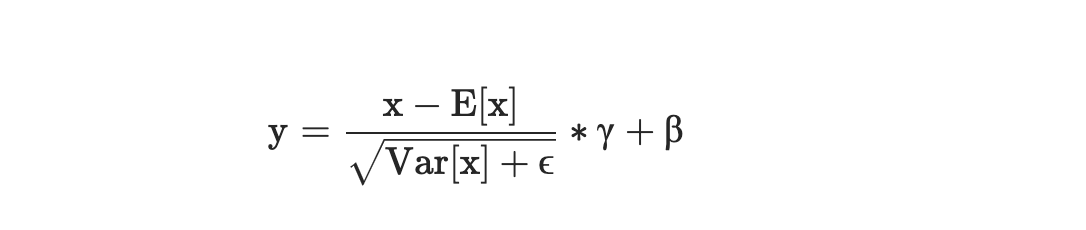

Batch normalization normalizes the activations of the network between layers in batches so that the batches have a mean of 0 and a variance of 1. The batch normalization is normally written as follows:

https://pytorch.org/docs/stable/generated/torch.nn.BatchNorm2d.html

The mean and standard-deviation are calculated per-dimension over the mini-batches and γ and β are learnable parameter vectors of size C (where C is the input size). By default, the elements of γ are set to 1 and the elements of β are set to 0.(https://pytorch.org/docs/stable/generated/torch.nn.BatchNorm2d.html)

The mean and standard deviation are calculated for each batch and for each dimension/channel. γ and β are learnable parameters which can be used to scale and shift the normalized value, so that we can control the shape of the data when going into the next layer (e.g., control the percentage of positive and negative values going into a ReLU).

Ideally we would do this activation normalization for the entire dataset, however, it is often not possible due to the large size of the data. Thus, we try do to the normalization for each batch. Note that we prefer to have large batch sizes. If the batch size is too small, the mean and standard deviation would be very sensitive to outliers. If our batch sizes are large enough, the mean and standard deviations would be more stable.

#pytorch #batch-normalization #python #data-science #deep-learning