The classical way of doing POS tagging is using some variant of **Hidden Markov Model. **Here we’ll see how we could do that using Recurrent neural networks. The original RNN architecture has some variants too. It has a novel RNN architecture — the **Bidirectional RNN **which is capable of reading sequences in the ‘reverse order’ as well and has proven to boost performance significantly.

Then two important cutting-edge variants of the RNN which have made it possible to train large networks on real datasets. Although RNNs are capable of solving a variety of sequence problems, their architecture itself is their biggest enemy due to the problems of exploding and vanishing gradients that occur during the training of RNNs. This problem is solved by two popular gated RNN architectures — the **Long, Short Term Memory (LSTM) **and the **Gated Recurrent Unit (GRU). **We’ll look into all these models here with respect to POS tagging.

POS Tagging — An Overview

The process of classifying words into their parts of speech and labeling them accordingly is known as part-of-speech tagging, or simply POS-tagging. The NLTK library has a number of corpora that contain words and their POS tag. I will be using the POS tagged corpora i.e treebank, **conll2000, **and brown from NLTK to demonstrate the key concepts. To get into the codes directly, an accompanying notebook is published on Kaggle.

POS Tagging Using RNN

Explore and run machine learning code with Kaggle Notebooks | Using data from word-embeddings

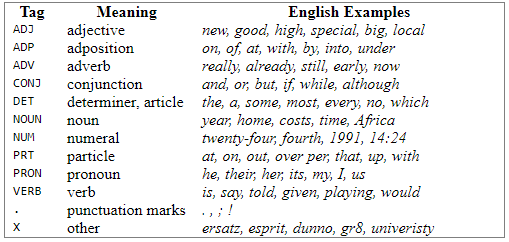

The following table provides information about some of the major tags:

#artificial-intelligence #deep-learning #machine-learning #pos-tagging #recurrent-neural-network