Fastai is an open source deep learning library that adds higher level functionalities to PyTorch and makes it easier to achieve state-of-the-art results with little coding.

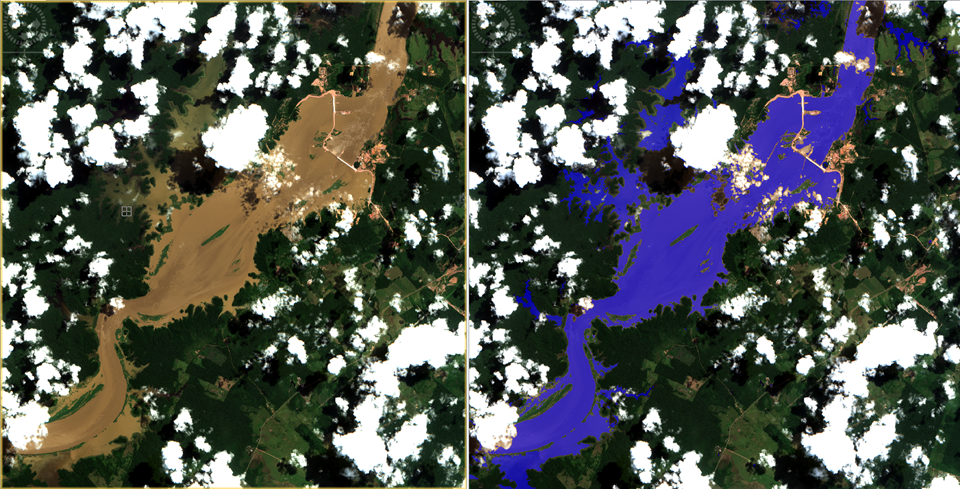

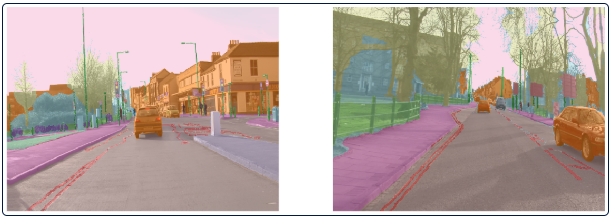

The vision module is really handy when we need to quickly create an image dataset, apply data augmentations, resize, crop or even overlay a segmentation mask (Figure 1). However, all this simplification comes with a cost. Most of these higher level APIs for computer vision are optimized to RGB images, and these image libraries don’t support multispectral or multichannel images.

In recent years, due to an increase in data accessibility, Earth Observation researchers have been paying a lot of attention on deep learning techniques, like image recognition, image segmentation, object detection, among others. [1]. The problem is that satellite imagery, usually composed by many different spectral bands (wavelengths), doesn’t fit in most vision libraries used by the deep learning community. For that reason, I have been working directly with PyTorch to create the dataset (here) and train the “home-made” U-Net architecture (here).

With the upcoming of Fastai-v2 (promised to be released in next weeks)[2], I would like to test if it was possible to use it’s Data Block structure to create a multispectral image dataset to train a U-Net model. That’s more advanced than my previous stories as we have to create some custom subclasses, but I tried to make it as simple as possible. The notebook with all the code is available in the GitHub project (notebook here).

#remote-sensing #deep-learning #multispectral #fastai #deep learning