We cover basic mistakes that can lead to unnecessary copying of data and memory allocation in NumPy. We further cover NumPy internals, strides, reshaping, and transpose in detail.

In the first two parts of our series on NumPy optimization, we have primarily covered how to speed up your code by trying to substitute loops for vectorized code. We covered the basics of vectorization and broadcasting, and then used them to optimize an implementation of the K-Means algorithm, speeding it up by 70x compared to the loop-based implementation.

Following the format of Parts 1 and 2, Part 3 (this one) will focus on introducing a bunch of NumPy features with some theory–namely NumPy internals, strides, reshape and transpose. Part 4 will cover the application of these tools to a practical problem.

In the earlier posts we covered how to deal with loops. In this post we will focus on yet another bottleneck that can often slow down NumPy code: unnecessary copying and memory allocation. The ability to minimize both issues not only speeds up the code, but may also reduce the memory a program takes up.

We will begin with some basic mistakes that can lead to unnecessary copying of data and memory allocation. Then we’ll take a deep dive into how NumPy internally stores its arrays, how operations like reshape and transpose are performed, and detail a visualization method to compute the results of such operations without typing a single line of code.

In Part 4, we’ll use the things we’ve learned in this part to optimize the output pipeline of an object detector. But let’s leave that for later.

So, let’s get started.

Preallocate Preallocate Preallocate!

A mistake that I made myself in the early days of moving to NumPy, and also something that I see many people make, is to use the loop-and-append paradigm. So, what exactly do I mean by this?

Consider the following piece of code. It adds an element to a list during each iteration of the loop.

li = []

import random

for i in range(10000):

## Something important goes here

x = random.randint(1,10)

li.append(x)

The script above merely creates a list containing random integers from zero to nine. However, instead of a random number, the thing we are adding to the list could be the result of some involved operation happening every iteration of the loop.

append is an amortized O(1) operation in Python. In simple words, on average, and regardless of how large your list is, append will take a constant amount of time. This is the reason you’ll often spot this method being used to add to lists in Python. Heck, this method is so popular that you will even find it deployed in production-grade code. I call this the loop-and-append paradigm. While it works well in Python, the same can’t be said for NumPy.

When people switch to NumPy and they have to do something similar, this is what they sometimes do.

## Do the operation for first step, as you can't concatenate an empty array later

arr = np.random.randn(1,10)

## Loop

for i in range(10000 - 1):

arr = np.concatenate((arr, np.random.rand(1,10)))

Alternatively, you could also use the np.append operation in place of np.concatenate. In fact, np.append internally uses np.concatenate, so its performance is upper-bounded by the performance of np.concatenate.

Nevertheless, this is not really a good way to go about such operations. Because np.concatenate, unlike append, is not a constant-time function. In fact, it is a linear-time function as it includes creating a new array in memory, and then copying the contents of the two arrays to be concatenated to the newly allocated memory.

But why can’t NumPy implement a constant time concatenate, along the lines of how append works? The answer to this lies in how lists and NumPy arrays are stored.

The Difference Between How Lists And Arrays Are Stored

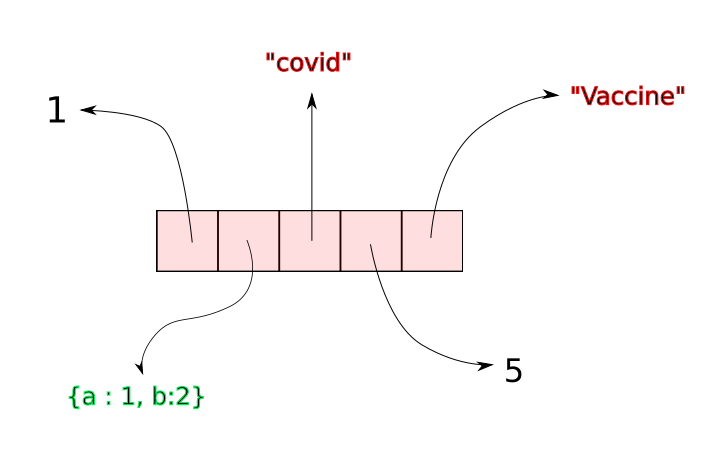

A Python list is made up references that point to objects. While the references are stored in a contiguous manner, the objects they point to can be anywhere in the memory.

A python list is made of reference to the objects, which are stored elsewhere in the memory.

A python list is made of reference to the objects, which are stored elsewhere in the memory.

Whenever we create a Python List, a certain amount of contagious space is allocated for the references that make up the list. Suppose a list has n elements. When we call append on a list, python simply inserts a reference to the object (being appended) at the n+1th

slot in contagious space.

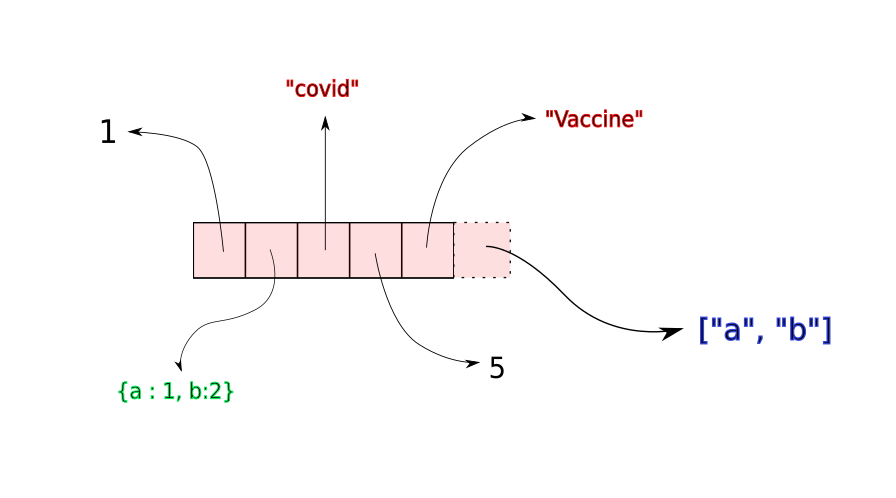

An append operation merely involves adding a reference to wherever the appended object is stored in the memory. No copying is involved.

An append operation merely involves adding a reference to wherever the appended object is stored in the memory. No copying is involved.

Once this contagious space fills up, a new, larger memory block is allocated to the list, with space for new insertions. The elements of the list are copied to the new memory location. While the time for copying elements to the new location is not constant (it would increase with size of the array), copying operations are often very rare. Therefore, on an average, append takes constant time independent of the size of the array

However, when it comes to NumPy, arrays are basically stored as contagious blocks of objects that make up the array. Unlike Python lists, where we merely have references, actual objects are stored in NumPy arrays.

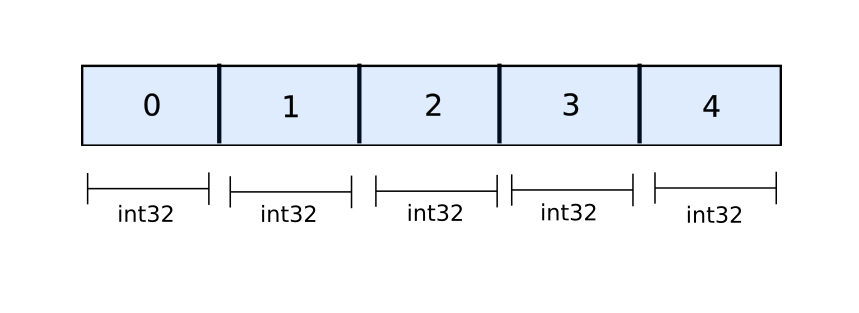

Numpy Arrays are stored as objects (32-bit Integers here) in the memory lined up in a contiguous manner

Numpy Arrays are stored as objects (32-bit Integers here) in the memory lined up in a contiguous manner

All the space for a NumPy array is allocated before hand once the the array is initialised.

a = np.zeros((10,20)) ## allocate space for 10 x 20 floats

There is no dynamic resizing going on the way it happens for Python lists. When you call np.concatenate on two arrays, a completely new array is allocated, and the data of the two arrays is copied over to the new memory location. This makes np.concatenate slower than append even if it’s being executed in C.

To circumvent this issue, you should preallocate the memory for arrays whenever you can. Preallocate the array before the body of the loop and simply use slicing to set the values of the array during the loop. Below is such a variant of the above code.

arr = np.zeros((10000,10))

for i in range(10000):

arr[i] = np.random.rand(1,10)

Here, we allocate the memory only once. The only copying involved is copying random numbers to the allocated space and not moving around array in memory every iteration.

Timing the code

In order to see the speed benefits of preallocating arrays, we time the two snippets using timeit.

%%timeit -n 100

arr = np.random.randn(1,10)

for i in range(10000 - 1):

arr = np.concatenate((arr, np.random.rand(1,10)))

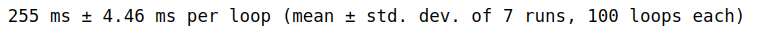

The output is

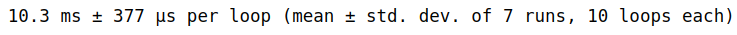

Whereas for the code with pre-allocation.

%%timeit -n 10

arr = np.zeros((10000,10))

for i in range(10000):

arr[i] = np.random.rand(1,10)

We get a speed up of about 25x.

#numpy #python #machine-learning #big-data #developer