We are happy to open source TensorFlow Decision Forests (TF-DF). TF-DF is a collection of production-ready state-of-the-art algorithms for training, serving and interpreting decision forest models (including random forests and gradient boosted trees). You can now use these models for classification, regression and ranking tasks - with the flexibility and composability of the TensorFlow and Keras.

## **About decision forests**

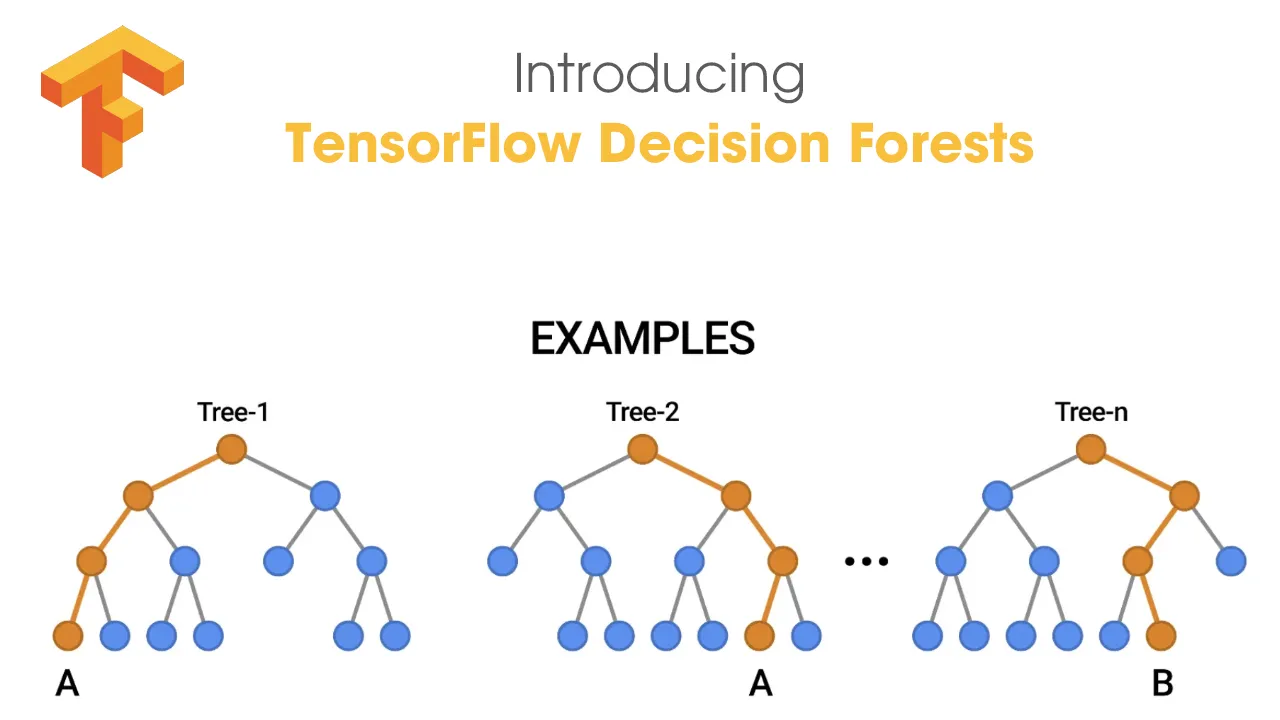

Decision forests are a family of machine learning algorithms with quality and speed competitive with (and often favorable to) neural networks, especially when you’re working with tabular data. They’re built from many [decision trees](https://developers.google.com/machine-learning/glossary#decision-tree), which makes them easy to use and understand - and you can take advantage of a plethora of interpretability tools and techniques that already exist today.

TF-DF brings this class of models along with a suite of tailored tools to TensorFlow users:

* Beginners will find it easier to develop and explain decision forest models. There is no need to explicitly list or pre-process input features (as decision forests can naturally handle numeric and categorical attributes), specify an architecture (for example, by trying different combinations of layers like you would in a neural network), or worry about models diverging. Once your model is trained, you can plot it directly or analyse it with easy to interpret statistics.

* Advanced users will benefit from models with very fast inference time (sub-microseconds per example in many cases). And, this library offers a great deal of composability for model experimentation and research. In particular, it is easy to combine neural networks and decision forests.

If you’re already using decision forests outside of TensorFlow, here’s a little of what TF-DF offers:

* It provides a slew of state-of-the-art Decision Forest training and serving algorithms such as random forests, gradient-boosted trees, CART, (Lambda)MART, DART, Extra Trees, greedy global growth, oblique trees, one-side-sampling, categorical-set learning, random categorical learning, out-of-bag evaluation and feature importance, and structural feature importance.

* This library can serve as a bridge to the rich TensorFlow ecosystem by making it easier for you to integrate tree-based models with various TensorFlow [tools](https://www.tensorflow.org/resources/tools), [libraries](https://www.tensorflow.org/resources/libraries-extensions), and platforms such as [TFX](https://www.tensorflow.org/tfx).

* And for users new to neural networks, you can use decision forests as an easy way to get started with TensorFlow, and continue to explore neural networks from there.